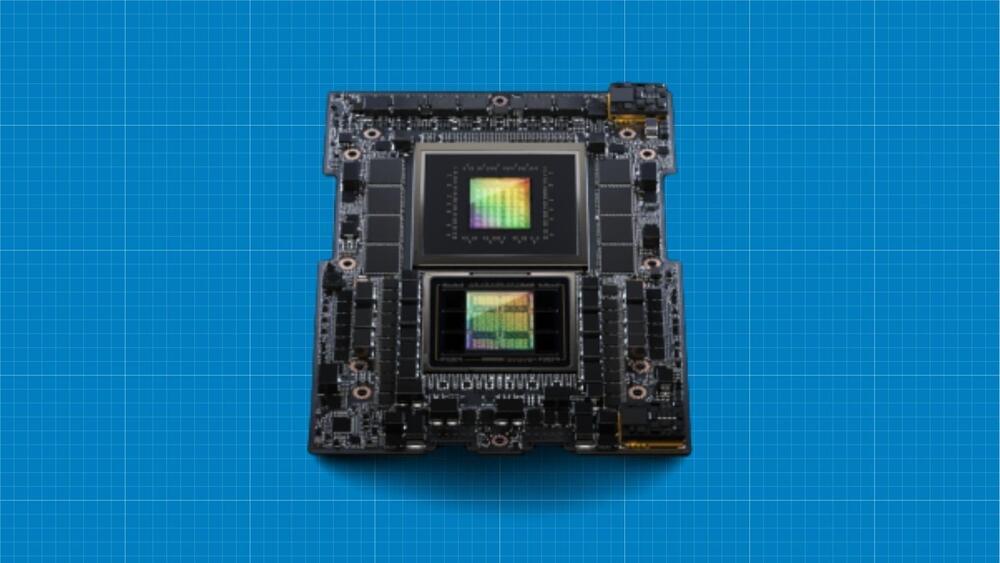

The advancement of robotics and artificial intelligence (AI) has paved the way for a new era in warfare. Gone are the days of manned ships and traditional naval operations. Instead, the US Navy’s Task Force 59 is at the forefront of integrating AI and robotics into naval operations. With a fleet of autonomous robot ships, the Navy aims to revolutionize the way wars are fought at sea.

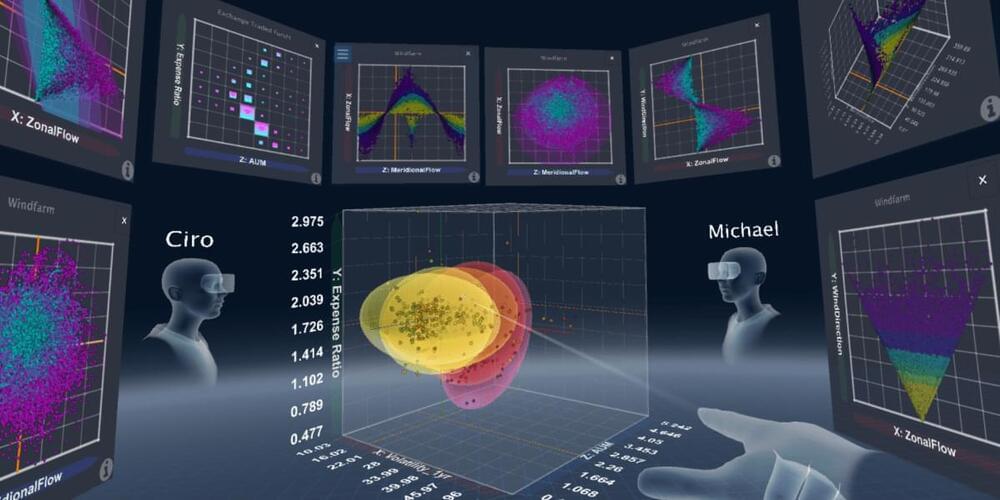

The Persian Gulf serves as a testing ground for Task Force 59’s fleet of robot ships. These unmanned vessels, ranging from solar-powered kayaks to surfboard-style boats, are equipped with state-of-the-art technology. Their purpose is to act as the eyes and ears of the Navy, collecting data through cameras, radar, and hydrophones. Pattern-matching algorithms help differentiate between oil tankers and potential threats like smugglers.

One particular vessel, the Triton, stands out with its ability to submerge for extended periods. This feature allows it to evade enemy detection and resurface when it is safe to do so. The Triton can stay submerged for up to five days, utilizing this time to recharge its batteries and transmit valuable information back to base.