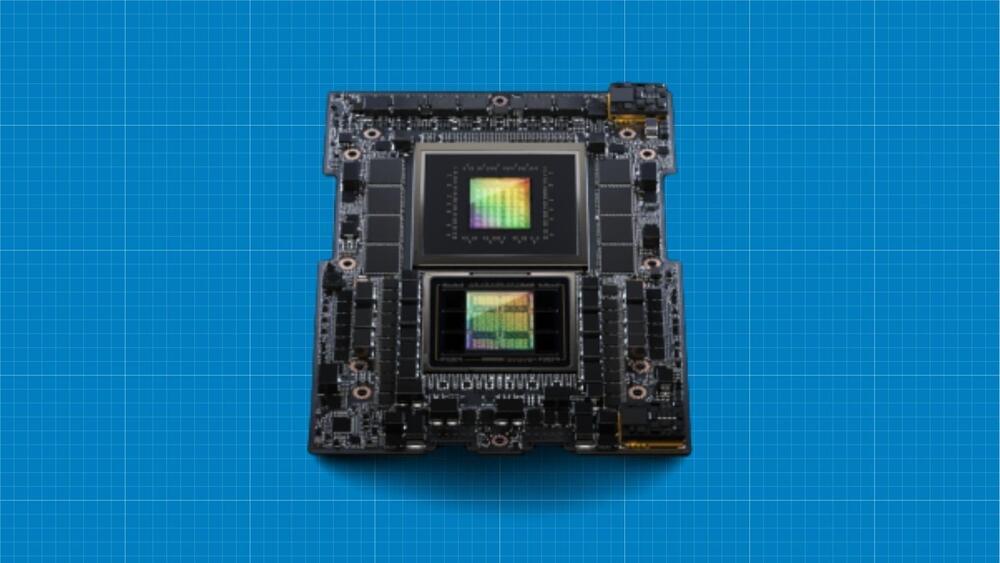

In its dual configuration, the superchips will offer 3.5x more memory capacity and 3x more bandwidth than the current generation chips.

The world’s leading supplier of chips for artificial intelligence (AI) applications, Nvidia, has unveiled its next generation of superchips created to handle the “most complex generative AI workloads,” the company said in a press release. Dubbed GH200 GraceHopper, the platform also features the world’s first HBM3e processor.

The new superchip has been designed by combining Nvidia’s Hopper platform, which houses the graphic processing unit (GPU), with the Grace CPU platform, which handles the processing needs. Both these platforms were named in honor of Grace… More.

Nvidia.