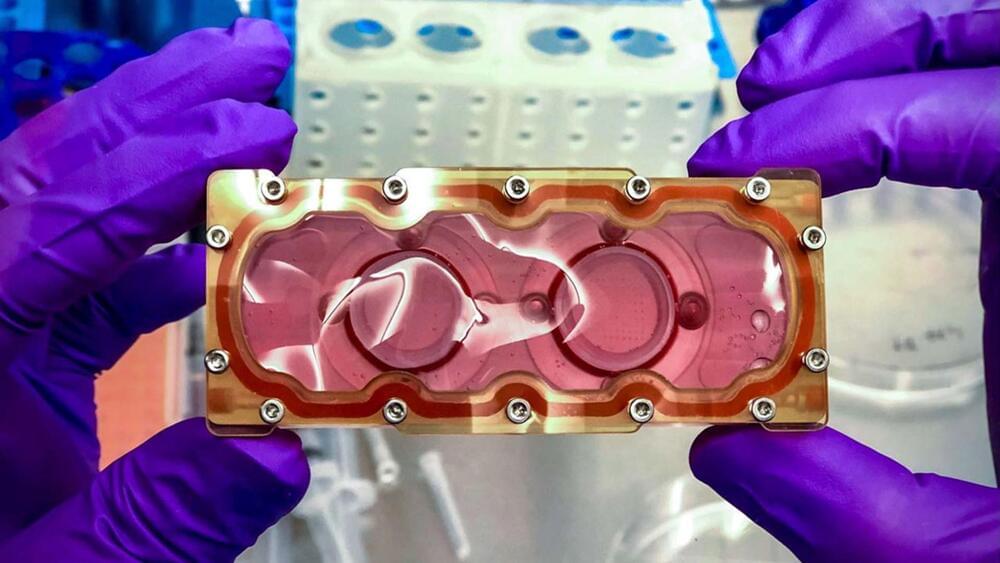

OpenAI announced its latest language model, GPT-4, but many in the AI community were disappointed by the lack of public information. Their complaints track increasing tensions in the AI world over safety.

Yesterday, OpenAI announced GPT-4, its long-awaited next-generation AI language model.

Should AI research be open or closed? Experts disagree.

Many in the AI community have criticized this decision, noting that it undermines the company’s founding ethos as a research org and makes it harder for others to replicate its work. Perhaps more significantly, some say it also makes it difficult to develop safeguards against the sort of threats posed by AI systems like GPT-4, with these complaints coming at a time of increasing tension and rapid progress in the AI world.

“I think we can call it shut on ‘Open’ AI: the 98 page paper introducing GPT-4 proudly declares that they’re disclosing *nothing* about the contents of their training set,” tweeted Ben Schmidt, VP of information design at Nomic AI, in a thread on the topic.