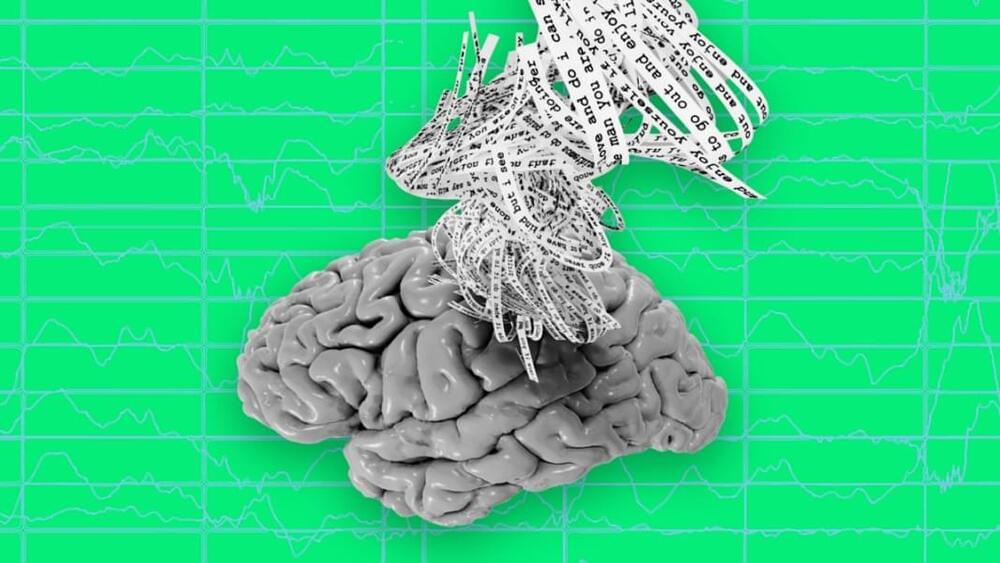

Language and speech are how we express our inner thoughts. But neuroscientists just bypassed the need for audible speech, at least in the lab. Instead, they directly tapped into the biological machine that generates language and ideas: the brain.

Using brain scans and a hefty dose of machine learning, a team from the University of Texas at Austin developed a “language decoder” that captures the gist of what a person hears based on their brain activation patterns alone. Far from a one-trick pony, the decoder can also translate imagined speech, and even generate descriptive subtitles for silent movies using neural activity.

Here’s the kicker: the method doesn’t require surgery. Rather than relying on implanted electrodes, which listen in on electrical bursts directly from neurons, the neurotechnology uses functional magnetic resonance imaging (fMRI), a completely non-invasive procedure, to generate brain maps that correspond to language.