View all available purchase options and get full access to this article.

Citation information is sourced from Crossref Cited-by service.

AI can also help develop objective risk stratification scores, predict the course of disease or treatment outcomes in CLD or liver cancer, facilitate easier and more successful liver transplantation, and develop quality metrics for hepatology.

Artificial Intelligence (AI) is an umbrella term that covers all computational processes aimed at mimicking and extending human intelligence for problem-solving and decision-making. It is based on algorithms or arrays of mathematical formulae that make up specific computational learning methods. Machine learning (ML) and deep learning (DL) use algorithms in more complex ways to predict learned and new outcomes.

AI-powered liver disease diagnosis Machine learning for treatment planning Predicting disease progression The future of hepatology References Further reading

Hepatology largely depends on imaging, a field that AI can fully exploit. Machine learning is being pressed into play to extract rich information from imaging and clinical data to aid the non-invasive and accurate diagnosis of multiple liver conditions.

While ChatGPT and its associated AI models are clearly not human (despite the hype associated with its marketing), if the updates perform as shown, they potentially represent a significant expansion in capabilities for OpenAI’s computer assistant:

[📸: Getty Images]

On Monday, OpenAI announced a significant update to ChatGPT that enables its GPT-3.5 and GPT-4 AI models to analyze images and react to them as part of a text conversation. Also, the ChatGPT mobile app will add speech synthesis options that, when paired with its existing speech recognition features, will enable fully verbal conversations with the AI assistant, OpenAI says.

OpenAI says the new image recognition feature in ChatGPT lets users upload one or more images for conversation, using either the GPT-3.5 or GPT-4 models. In its promotional blog post, the company claims the feature can be used for a variety of everyday applications: from figuring out what’s for dinner by taking pictures of the fridge and pantry, to troubleshooting why your grill won’t start.

Insilico Medicine, a clinical-stage generative AI-driven drug discovery company has announced that the company has used Microsoft BioGPT to identify targets against both the aging process and major age-related diseases.

Longevity. Technology: ChatGPT – the AI chatbot – can craft poems, write webcode and plan holidays. Large language models (LLMs) are the cornerstone of chatbots like GPT-4; trained on vast amounts of text data, they have been contributing to advances in diverse fields including literature, art and science – but their potential in the complex realms of biology and genomics has yet to be fully unlocked.

Forward-looking: While AI has been at the forefront of most tech industry conversations this year, the new wave of generative AI is still far off the concept of an artificial general intelligence (AGI). However, legendary developer John Carmack believes such a technology will be shown off sometime around 2030.

Carmack, of course, is best known as the co-founder of id Software and lead programmer of Wolfenstein 3D, Doom, and Quake. He left Oculus in December last year to focus on Keen Technologies, his new AGI startup.

In an announcement video (via The Reg) revealing that Keen has hired Richard Sutton, chief scientific advisor at the Alberta Machine Intelligence Institute, Carmack said the new hire was ideally positioned to work on AGI.

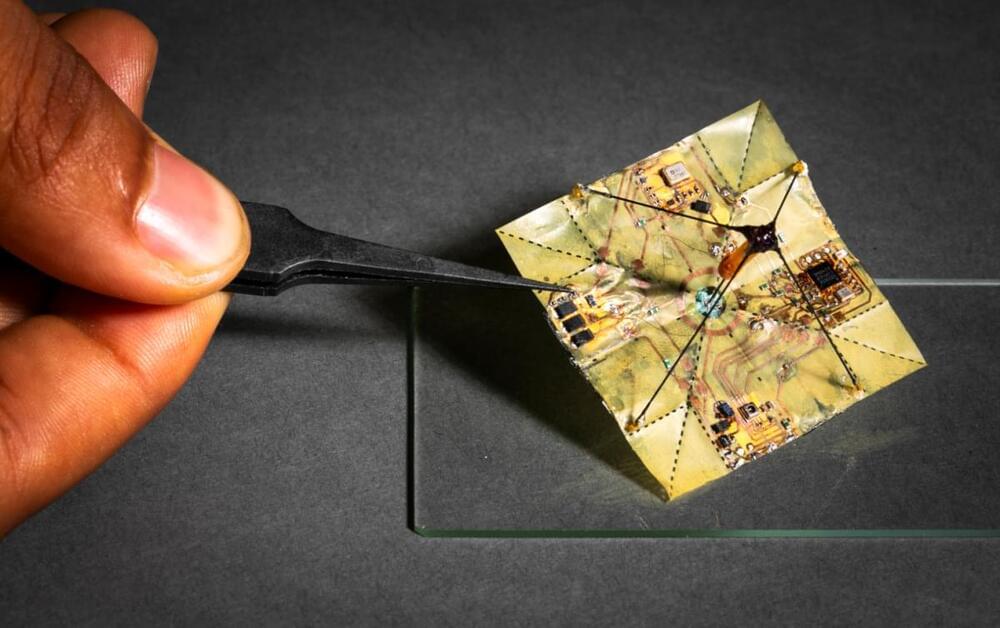

Scientists at the University of Washington have developed flying robots that change shape in mid-air, all without batteries, as originally published in the research journal Science Robotics. These miniature Transformers snap into a folded position during flight to stabilize descent. They weigh just 400 milligrams and feature an on-board battery-free actuator complete with a solar power-harvesting circuit.

Here’s how they work. These robots actually mimic the flight of different leaf types in mid-air once they’re dropped from a drone at an approximate height of 130 feet. The origami-inspired design allows them to transform quickly from an unfolded to a folded state, a process that takes just 25 milliseconds. This transformation allows for different descent trajectories, with the unfolded position floating around on the breeze and the folded one falling more directly. Small robots are nothing new, but this is the first solar-powered microflier that allows for control over the descent, thanks to an onboard pressure sensor to estimate altitude, an onboard timer and a simple Bluetooth receiver.

The instrumental title track “I Robot”, together with the successful single “I Wouldn’t Want To Be Like You”, form the opening of “I Robot”, a progressive rock album recorded by The Alan Parsons Project and engineered by Alan Parsons and Eric Woolfson in 1977. It was released by Arista Records in 1977 and re-released on CD in 1984 and 2007. It was intended to be based on the “I, Robot” stories written by Isaac Asimov, and actually Woolfson spoke with Asimov, who was enthusiastic about the concept. However, as the rights had already been granted to a TV/movie company, the album’s title was altered slightly by removing the comma, and the theme and lyrics were made to be more generically about robots rather than specific to the Asimov universe. The cover inlay reads: “I ROBOT… HE STORY OF THE RISE OF THE MACHINE AND THE DECLINE OF MAN, WHICH PARADOXICALLY COINCIDED WITH HIS DISCOVERY OF THE WHEEL… ND A WARNING THAT HIS BRIEF DOMINANCE OF THIS PLANET WILL PROBABLY END, BECAUSE MAN TRIED TO CREATE ROBOT IN HIS OWN IMAGE.” The Alan Parsons Project were a British progressive rock band, active between 1975 and 1990, founded by Eric Woolfson and Alan Parsons. Englishman Alan Parsons (born 20 December 1948) met Scotsman Eric Norman Woolfson (18 March 1945 — 2 December 2009) in the canteen of Abbey Road Studios in the summer of 1974. Parsons had already acted as assistant engineer on The Beatles’ “Abbey Road” and “Let It Be”, had recently engineered Pink Floyd’s “The Dark Side Of The Moon”, and had produced several acts for EMI Records. Woolfson, a songwriter and composer, was working as a session pianist, and he had also composed material for a concept album idea based on the work of Edgar Allan Poe. Parsons asked Woolfson to become his manager and Woolfson managed Parsons’ career as a producer and engineer through a string of successes including Pilot, Steve Harley, Cockney Rebel, John Miles, Al Stewart, Ambrosia and The Hollies. Parsons commented at the time that he felt frustrated in having to accommodate the views of some of the musicians, which he felt interfered with his production. Woolfson came up with the idea of making an album based on developments in the film industry, where directors such as Alfred Hitchcock and Stanley Kubrick were the focal point of the film’s promotion, rather than individual film stars. If the film industry was becoming a director’s medium, Woolfson felt the music business might well become a producer’s medium. Recalling his earlier Edgar Allan Poe material, Woolfson saw a way to combine his and Parsons’ respective talents. Parsons would produce and engineer songs written by the two, and The Alan Parsons Project was born. This channel is dedicated to the classic rock hits that have become part of the history of our culture. The incredible AOR tracks that define music from the late 60s, the 70s and the early 80s… lassic Rock is here!

Check out my newer music videos and other fun stuff at:

www.youtube.com/djbuddylove3000

For music videos of the Old School funk, go to:

www.youtube.com/djbuddyloveoldschool.

Check out my music videos from the Roots Of Rap at:

www.youtube.com/djbuddyloveraproots.

If you like relaxing with some classic Cool Jazz, go to:

www.youtube.com/djbuddylovecooljazz.

To explore the world of The DJ Cafe, go to:

What if you could hear photos? Impossible, right? Not anymore – with the help of artificial intelligence (AI) and machine learning, researchers can now get audio from photos and silent videos.

Academics from four US universities have teamed up to develop a technique called Side Eye that can extract audio from static photos and silent – or muted – videos.

The technique targets the image stabilization technology that is now virtually standard across most modern smartphones.

Effective compression is about finding patterns to make data smaller without losing information. When an algorithm or model can accurately guess the next piece of data in a sequence, it shows it’s good at spotting these patterns. This links the idea of making good guesses—which is what large language models like GPT-4 do very well —to achieving good compression.

In an arXiv research paper titled “Language Modeling Is Compression,” researchers detail their discovery that the DeepMind large language model (LLM) called Chinchilla 70B can perform lossless compression on image patches from the ImageNet image database to 43.4 percent of their original size, beating the PNG algorithm, which compressed the same data to 58.5 percent. For audio, Chinchilla compressed samples from the LibriSpeech audio data set to just 16.4 percent of their raw size, outdoing FLAC compression at 30.3 percent.

In this case, lower numbers in the results mean more compression is taking place. And lossless compression means that no data is lost during the compression process. It stands in contrast to a lossy compression technique like JPEG, which sheds some data and reconstructs some of the data with approximations during the decoding process to significantly reduce file sizes.