No one knew how popular OpenAI’s DALL-E would be in 2022, and no one knows where its rise will leave us.

Australian researchers have designed an algorithm that can intercept a man-in-the-middle (MitM) cyberattack on an unmanned military robot and shut it down in seconds.

In an experiment using deep learning neural networks to simulate the behavior of the human brain, artificial intelligence experts from Charles Sturt University and the University of South Australia (UniSA) trained the robot’s operating system to learn the signature of a MitM eavesdropping cyberattack. This is where attackers interrupt an existing conversation or data transfer.

The algorithm, tested in real time on a replica of a United States army combat ground vehicle, was 99% successful in preventing a malicious attack. False positive rates of less than 2% validated the system, demonstrating its effectiveness.

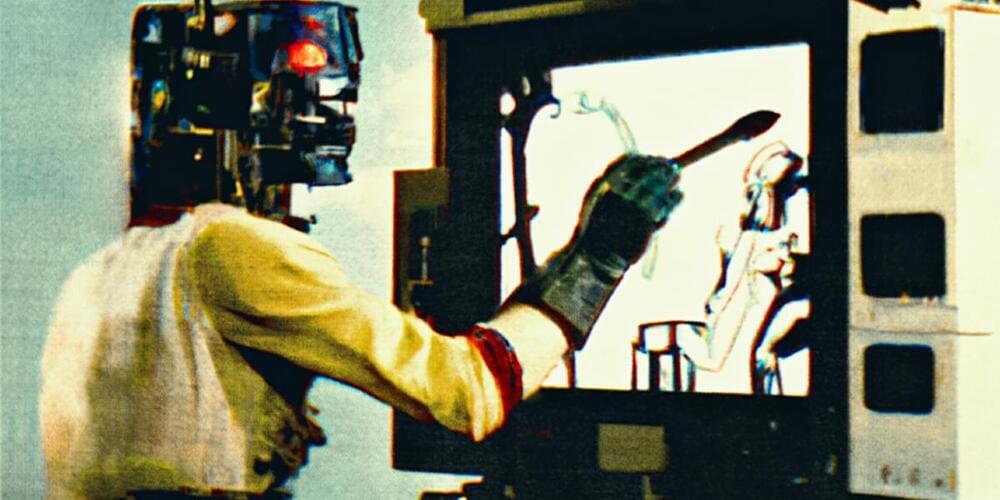

The ocean has always been a force to be reckoned with when it comes to understanding and traversing its seemingly limitless blue waters. Past innovations such as deep-sea submersibles and ocean-observing satellites have helped illuminate some wonders of the ocean though many questions still remain.

These questions are closer to being answered thanks to the development of the Intelligent Swift Ocean Observing System (ISOOS). Using this system, targeted regions of the ocean can be mapped in a three-dimensional method allowing for more data to be gathered in a safer, quicker and more efficient method than existing technologies can achieve.

Researchers published their results in Ocean-Land-Atmosphere Research.

Forget the cloud.

Northwestern University engineers have developed a new nanoelectronic device that can perform accurate machine-learning classification tasks in the most energy-efficient manner yet. Using 100-fold less energy than current technologies, the device can crunch large amounts of data and perform artificial intelligence (AI) tasks in real time without beaming data to the cloud for analysis.

With its tiny footprint, ultra-low power consumption and lack of lag time to receive analyses, the device is ideal for direct incorporation into wearable electronics (like smart watches and fitness trackers) for real-time data processing and near-instant diagnostics.

Summary: Unveiling the neurological enigma of traumatic memory formation, researchers harnessed innovative optical and machine-learning methodologies to decode the brain’s neuronal networks engaged during trauma memory creation.

The team identified a neural population encoding fear memory, revealing the synchronous activation and crucial role of the dorsal part of the medial prefrontal cortex (dmPFC) in associative fear memory retrieval in mice.

Groundbreaking analytical approaches, including the ‘elastic net’ machine-learning algorithm, pinpointed specific neurons and their functional connectivity within the spatial and functional fear-memory neural network.

When ChatGPT & Co. have to check their answers themselves, they make fewer mistakes, according to a new study by Meta.

ChatGPT and other language models repeatedly reproduce incorrect information — even when they have learned the correct information. There are several approaches to reducing hallucination. Researchers at Meta AI now present Chain-of-Verification (CoVe), a prompt-based method that significantly reduces this problem.

New method relies on self-verification of the language model.

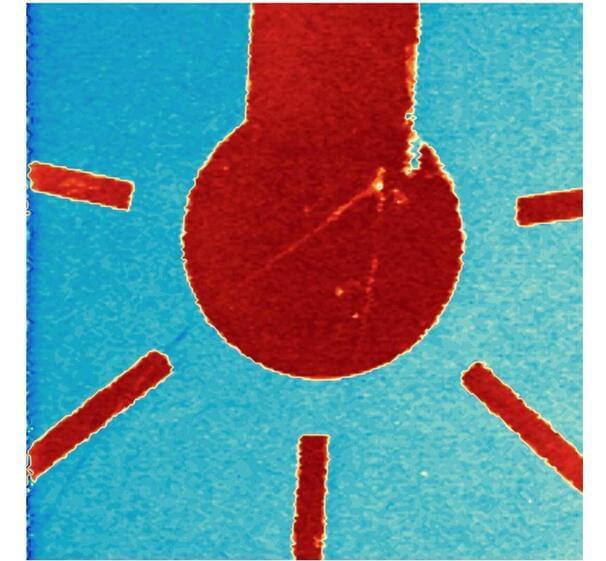

In a new Science Advances study, scientists from the University of Science and Technology of China have developed a dynamic network structure using laser-controlled conducting filaments for neuromorphic computing.

Neuromorphic computing is an emerging field of research that draws inspiration from the human brain to create efficient and intelligent computer systems. At its core, neuromorphic computing relies on artificial neural networks, which are computational models inspired by the neurons and synapses in the brain. But when it comes to creating the hardware, it can be a bit challenging.

Mott materials have emerged as suitable candidates for neuromorphic computing due to their unique transition properties. Mott transition involves a rapid change in electrical conductivity, often accompanied by a transition between insulating and metallic states.