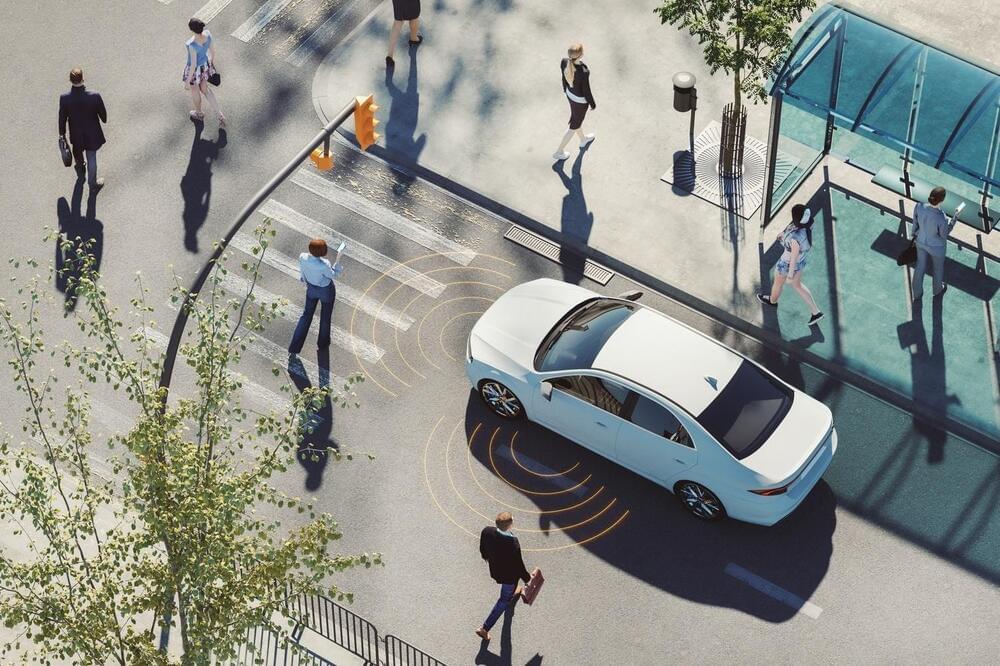

As we accelerate towards creating one of humanity’s greatest technological achievements, we need to ask ourselves – what is the offset of this development?

Artificial intelligence-powered systems not only consume huge amounts of data for training but also require tremendous amounts of electricity to run on. A recent study calculated the energy use and carbon footprint of several recent large language models. One of them, ChatGPT, running on 10,000 NVIDIA GPUs, was found to be consuming 1,287 megawatt hours of electricity – the equivalent of energy used by 121 homes for a year in the United States.

As we accelerate towards building one of the greatest technological developments man has ever… More.

Devrimb/iStock.

As we accelerate towards building one of the greatest technological developments man has ever achieved, we need to ask ourselves, what is the offset of this development? In a commentary published in the journal Joule, author Alex de Vries argues that in the future, the energy demands to power AI tools may exceed the power demands of some small nations.