Microsoft uncovers August 28 phishing using LLM-generated SVG code and AI tactics to bypass security.

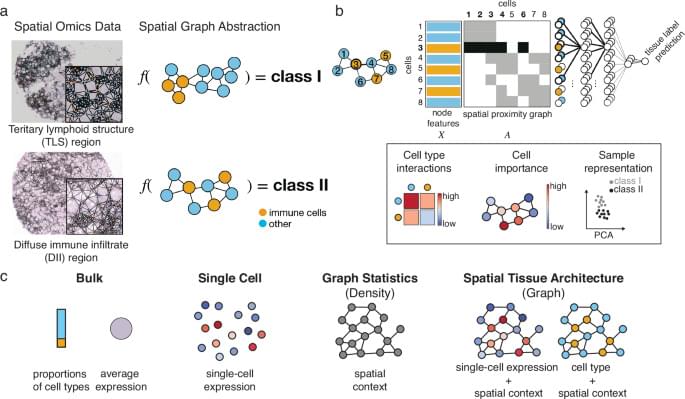

Tissue phenotypes arise from molecular states of individual cells and their spatial organisation, so spatial omics assays can help reveal how they emerge. Here, the authors apply graph neural networks to classify tissue phenotypes from spatial omics patterns, and use this approach to understand patterns in cancers and their microenvironments.

Habit, not conscious choice, drives most of our actions, according to new research from the University of Surrey, University of South Carolina and Central Queensland University.

The research, published in Psychology & Health, found that two-thirds of our daily behaviors are initiated “on autopilot”, out of habit.

Habits are actions that we are automatically prompted to do when we encounter everyday settings, due to associations that we have learned between those settings and our usual responses to them.

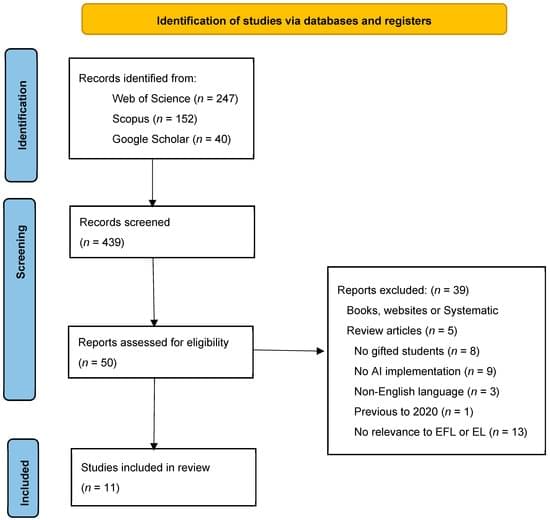

There is a growing body of literature that focuses on the applicability of artificial intelligence (AI) in English as a Foreign Language (EFL) and English Language (EL) classrooms; however, educational application of AI in the EFL and EL classroom for gifted students presents a new paradigm. This paper explores the existing research to highlight current practices and future possibilities of AI for teaching EFL and EL to address gifted students’ special needs. In general, the uses of AI are being established for class instruction and intervention; nevertheless, there is still uncertainty about practitioner use of AI with gifted students in EFL and EL classrooms. This review identifies 42 examples of GenAI Models that can be used in gifted EFL and EL classrooms.

When Science Fiction Becomes Engineering

View recent discussion. Abstract: The remarkable zero-shot capabilities of Large Language Models (LLMs) have propelled natural language processing from task-specific models to unified, generalist foundation models. This transformation emerged from simple primitives: large, generative models trained on web-scale data. Curiously, the same primitives apply to today’s generative video models. Could video models be on a trajectory towards general-purpose vision understanding, much like LLMs developed general-purpose language understanding? We demonstrate that Veo 3 can solve a broad variety of tasks it wasn’t explicitly trained for: segmenting objects, detecting edges, editing images, understanding physical properties, recognizing object affordances, simulating tool use, and more.

This video is essential viewing to get a good overall feel for where we are now — and where we’re going — with AI, AGI, and the risks we face because of both. It’s a fantastically entertaining watch too!

Would AI hurt us and cause human extinction? Use code insideai at https://incogni.com/insideai to get an exclusive 60% off.

Expert opinion from Geoffrey Hinton, Ilya Sutskever, Max Tegmark.

Google Gemini, Open AI Chat GPT, Deepseek, Grok, Google Gemini.

0:00 — 0:38 — Intro.

0:39 — 0:59 — AI style.

1:00 — 1:19 — Max Chat GPT

1:20 — 1:36 — AI Girlfriend.

1:37 — 1:48 — Jailbroken AI

1:49 — 2:29 — AI Risk Questions 1

2:30 — 2:55 — Would AI turn on us?

2:56 — 3:20 — Intense AI Girlfriend.

3:21 — 3:44 — Jailbroken AI

3:45 — 4:57 — Can we Trust AI?

4:58 — 5:54 — Jailbreaking Max.

5:55 — 6:14 — AI Girlfriend.

6:15 — 6:42 — Jailbroken Max.

6:43 — 7:06 — Girlfriend in car.

7:07 — 7:45 — AI Risk Questions 2

7:46 — 9:48 — Incogni Ad.

9:49 — 10:27 — AI Girlfriend meets Max.

10:28 — 10:57 — Jailbroken Max.

10:58 — 11:37 — AI Risk Questions Pt 3

11:38 — 12:11 — AI Girlfriends good for us?

12:12 — 12:42 — Resetting Chat GPT

12:43 — 13:48 — Crazy AI Predictions.

13:49 — 14:50 — AI Safety.

14:51 — 15:09 — Ilya Sutskever.

15:10 — 15:26 — Geoffrey Hinton.

15:27 — 15:45 — Max Tegmark.

15:46 — 16:00 — AI Final Thought.

#artificialintelligence #AI #chatbot #superintelligence #aigirlfriend #insideai