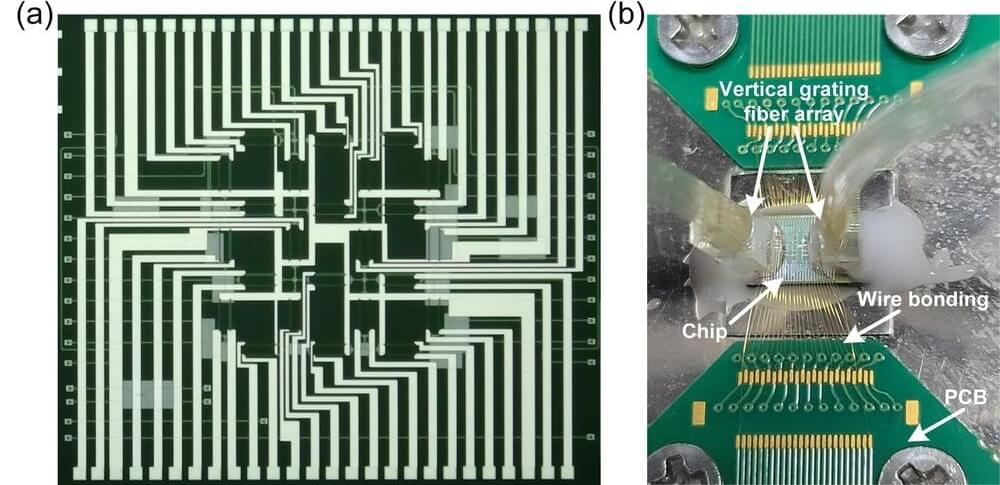

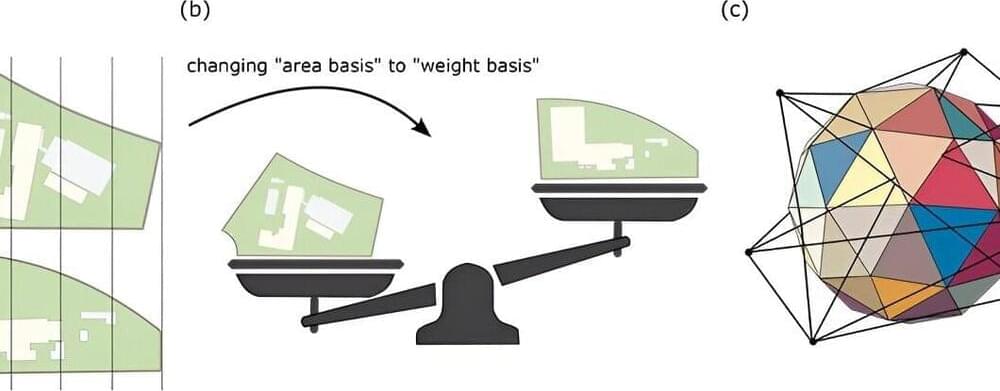

In a new Science Advances study, scientists from the University of Science and Technology of China have developed a dynamic network structure using laser-controlled conducting filaments for neuromorphic computing.

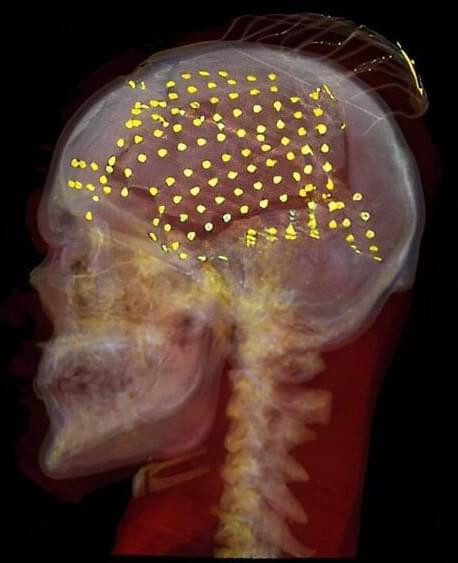

Neuromorphic computing is an emerging field of research that draws inspiration from the human brain to create efficient and intelligent computer systems. At its core, neuromorphic computing relies on artificial neural networks, which are computational models inspired by the neurons and synapses in the brain. But when it comes to creating the hardware, it can be a bit challenging.

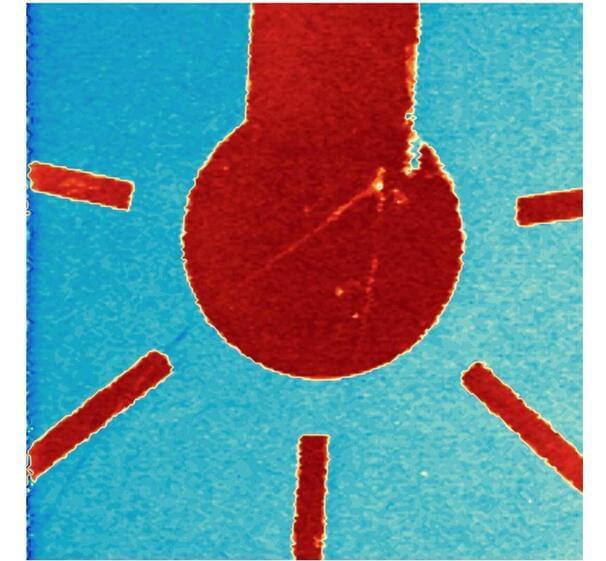

Mott materials have emerged as suitable candidates for neuromorphic computing due to their unique transition properties. Mott transition involves a rapid change in electrical conductivity, often accompanied by a transition between insulating and metallic states.