An international team of scientists, including from the University of Cambridge, have launched a new research collaboration that will leverage the same technology behind ChatGPT to build an AI-powered tool for scientific discovery.

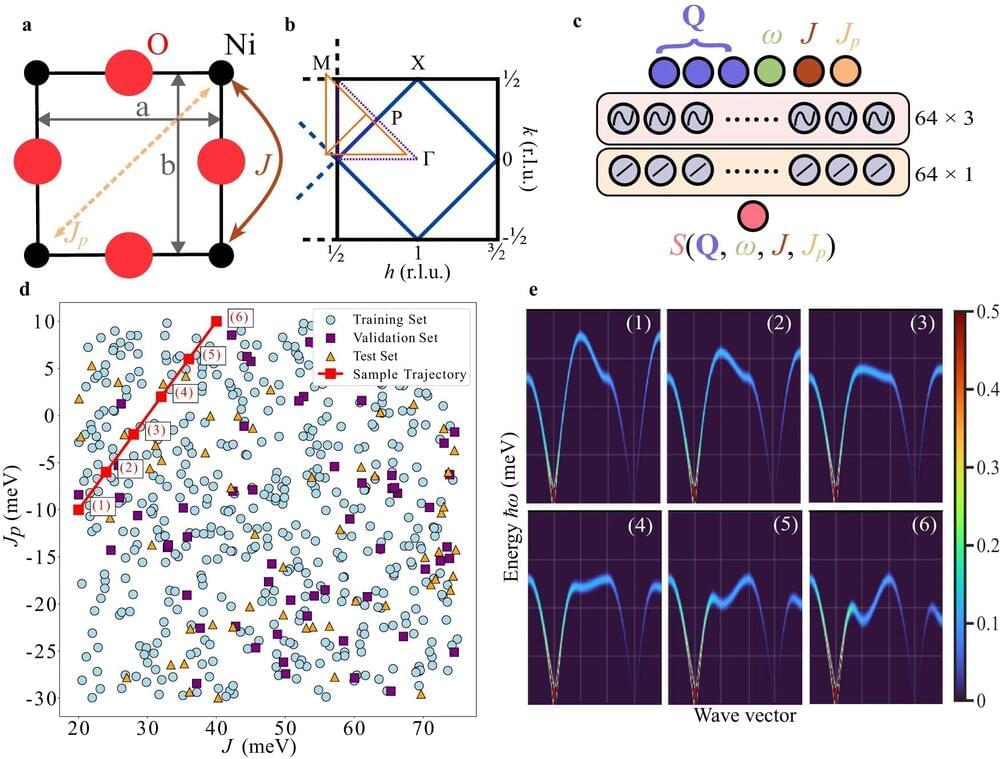

While ChatGPT deals in words and sentences, the team’s AI will learn from numerical data and physics simulations from across scientific fields to aid scientists in modeling everything from supergiant stars to the Earth’s climate.

The team launched the initiative, called Polymathic AI earlier this week, alongside the publication of a series of related papers on the arXiv open access repository.