This startup’s tech outperforms cryo-CMOS devices in speed and efficiency.

Albert Einstein wasn’t entirely convinced about quantum mechanics, suggesting our understanding of it was incomplete. In particular, Einstein took issue with entanglement, the notion that a particle could be affected by another particle that wasn’t close by.

Experiments since have shown that quantum entanglement is indeed possible and that two entangled particles can be connected over a distance. Now a new experiment further confirms it, and in a way we haven’t seen before.

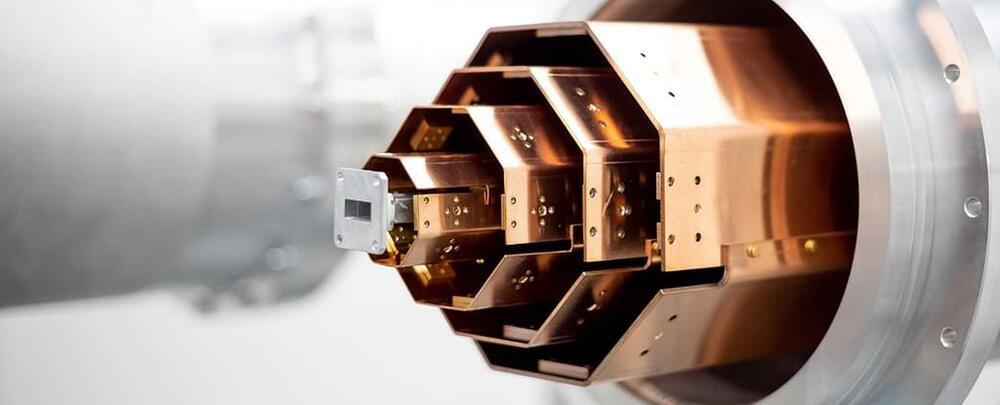

In the new experiment, scientists used a 30-meter-long tube cooled to close to absolute zero to run a Bell test: a random measurement on two entangled qubit (quantum bit) particles at the same time.

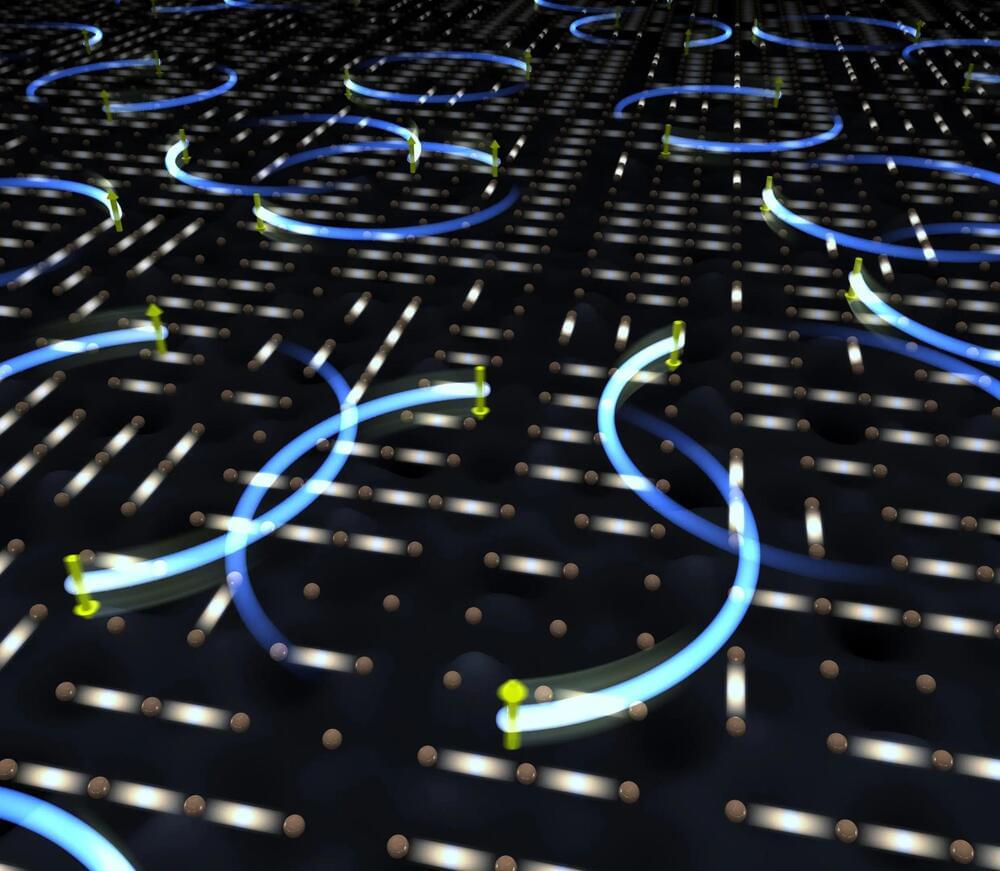

Summary: For the first time, Google Quantum AI has observed the peculiar behavior of non-Abelian anyons, particles with the potential to revolutionize quantum computing by making operations more resistant to noise.

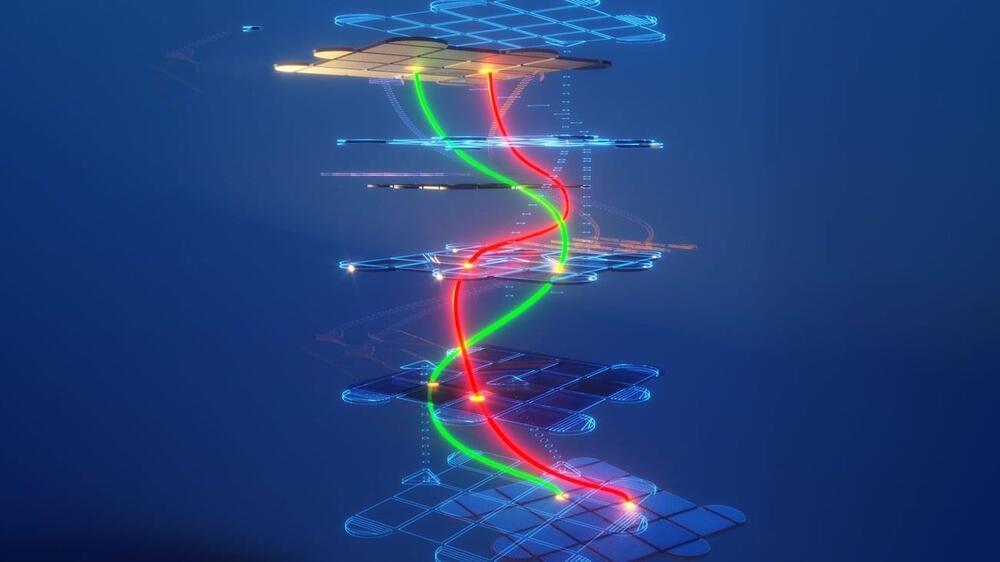

Non-Abelian anyons have the unique feature of retaining a sort of memory, allowing us to determine when they have been exchanged, even though they are identical.

The team successfully used these anyons to perform quantum computations, opening a new path towards topological quantum computation. This significant discovery could be instrumental in the future of fault-tolerant topological quantum computing.

A novel protocol for quantum computers could reproduce the complex dynamics of quantum materials.

RIKEN researchers have created a hybrid quantum-computational algorithm that can efficiently calculate atomic-level interactions in complex materials. This innovation enables the use of smaller quantum computers or conventional ones to study condensed-matter physics and quantum chemistry, paving the way for new discoveries in these fields.

A quantum-computational algorithm that could be used to efficiently and accurately calculate atomic-level interactions in complex materials has been developed by RIKEN researchers. It has the potential to bring an unprecedented level of understanding to condensed-matter physics and quantum chemistry—an application of quantum computers first proposed by the brilliant physicist Richard Feynman in 1981.

It could be a strange way of achieving immortality—or at least, everlasting life for copies of you.

Graphene’s valence and conduction bands meet at a point, making the single-layer crystal a semimetal. Researchers have predicted that spin-orbit coupling of carbon’s outer electrons opens a narrow gap between these bands—but only for the crystal’s bulk. Along the edges, spin-dependent states bridge the band gap, allowing the resistance-free flow of electrons: a quantum spin Hall effect. The weakness of carbon’s spin-orbit coupling means that this quantum spin Hall effect is too fragile to observe, however. Now Pantelis Bampoulis of the University of Twente in the Netherlands and his collaborators have seen the quantum spin Hall effect in graphene’s germanium (Ge) analog, germanene [1]. Furthermore, they show that germanene’s structure—a honeycomb like graphene’s, but lightly buckled—allows the quantum spin Hall effect to be turned off and on using an electric field.

Bampoulis and his collaborators grew a germanene monolayer on a buffer layer of Ge atop a substrate of Ge2Pt. Using a scanning tunneling microscope, they discriminated between the edge and the bulk states of germanene and measured how current depended on voltage under an external electric field perpendicular to the layer. At low field strengths, germanene exhibited a robust quantum spin Hall effect due to germanium’s strong spin-orbit coupling. At high field strengths, the edge states no longer bridged the gap and germanene became a normal insulator. But at a critical intermediate value, germanene underwent a topological phase transition as the otherwise separated conduction and valence bands in the bulk came together and the symmetry that sustained the quantum spin Hall effect was destroyed.

The robustness of germanene’s quantum spin Hall effect and the fact that it can be turned off with an applied electric field suggest that the material could be used to make room-temperature topological field-effect transistors.