Simon C. Marshall, Casper Gyurik, and Vedran Dunjko.

Leiden University, Leiden, The Netherlands.

Get full text pdfRead on arXiv VanityComment on Fermat’s library.

We often believe computers are more efficient than humans. After all, computers can complete a complex math equation in a moment and can also recall the name of that one actor we keep forgetting. However, human brains can process complicated layers of information quickly, accurately, and with almost no energy input: recognizing a face after only seeing it once or instantly knowing the difference between a mountain and the ocean.

These simple human tasks require enormous processing and energy input from computers, and even then, with varying degrees of accuracy.

Creating brain-like computers with minimal energy requirements would revolutionize nearly every aspect of modern life. Quantum Materials for Energy Efficient Neuromorphic Computing (Q-MEEN-C)—a nationwide consortium led by the University of California San Diego—has been at the forefront of this research.

There is increasing talk of quantum computers and how they will allow us to solve problems that traditional computers cannot solve. It’s important to note that quantum computers will not replace traditional computers: they are only intended to solve problems other than those that can be solved with classical mainframe computers and supercomputers. And any problem that is impossible to solve with classical computers will also be impossible with quantum computers. And traditional computers will always be more adept than quantum computers at memory-intensive tasks such as sending and receiving e-mail messages, managing documents and spreadsheets, desktop publishing, and so on.

There is nothing “magic” about quantum computers. Still, the mathematics and physics that govern their operation are more complex and reside in quantum physics.

The idea of quantum physics is still surrounded by an aura of great intellectual distance from the vast majority of us. It is a subject associated with the great minds of the 20th century such as Karl Heisenberg, Niels Bohr, Max Planck, Wolfgang Pauli, and Erwin Schrodinger, whose famous hypothetical cat experiment was popularized in an episode of the hit TV show ‘The Big Bang Theory’. As for Schrodinger, his observations of the uncertainty principle, serve as a reminder of the enigmatic nature of quantum mechanics. The uncertainty principle holds that the observer determines the characteristics of an examined particle (charge, spin, position) only at the moment of detection. Schrödinger explained this using the theoretical experiment, known as the paradox of Schrödinger’s cat. The experiment’s worth mentioning, as it describes one of the most important aspects of quantum computing.

A team from the University of Chicago.

Founded in 1,890, the University of Chicago (UChicago, U of C, or Chicago) is a private research university in Chicago, Illinois. Located on a 217-acre campus in Chicago’s Hyde Park neighborhood, near Lake Michigan, the school holds top-ten positions in various national and international rankings. UChicago is also well known for its professional schools: Pritzker School of Medicine, Booth School of Business, Law School, School of Social Service Administration, Harris School of Public Policy Studies, Divinity School and the Graham School of Continuing Liberal and Professional Studies, and Pritzker School of Molecular Engineering.

When Albert Einstein famously said “God does not play dice with the universe” he wasn’t objecting to the idea that randomness exists in our everyday lives.

What he didn’t like was the idea that randomness is so essential to the laws of physics, that even with the most precise measurements and carefully controlled experiments there would always be some level at which the outcome is effectively an educated guess. He believed there was another option.

This video discusses how probability is determined in quantum mechanics. Let’s play some dice with the universe and talk about it.

Join Katie Mack, Perimeter Institute’s Hawking Chair in Cosmology and Science Communication, over 10 short forays into the weird, wonderful world of quantum science. Episodes are published weekly, subscribe to our channel so you don’t miss an update.

Want to learn more about quantum concepts? Visit https://perimeterinstitute.ca/quantum-101-quantum-science-explained to access free resources.

Follow Perimeter:

Quantum information (QI) processing has the potential to revolutionize technology, offering unparalleled computational power, safety, and detection sensitivity.

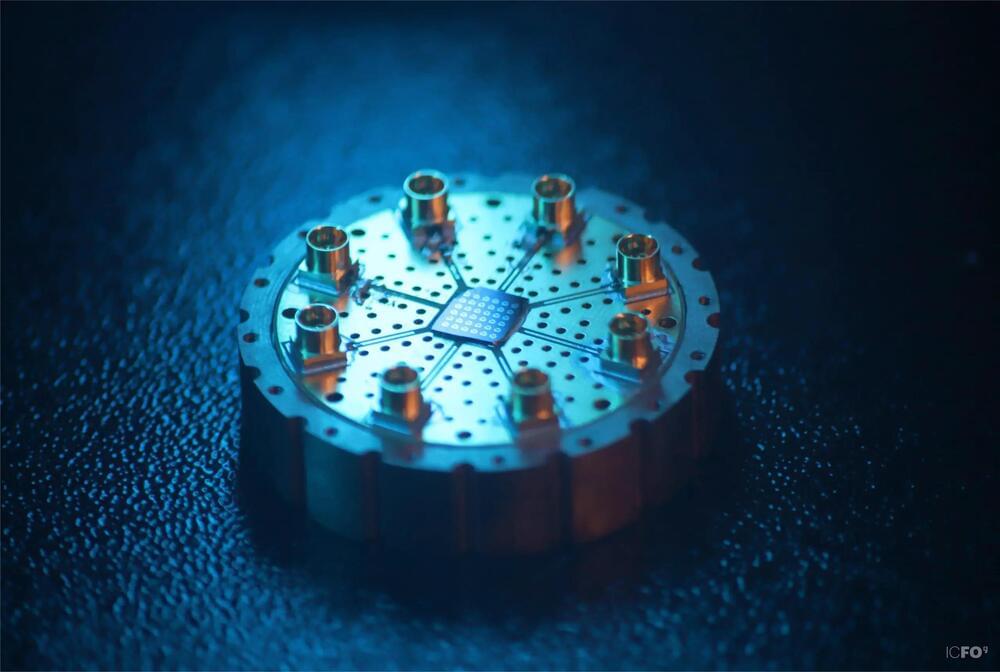

Qubits, the fundamental units of hardware for quantum information, serve as the cornerstone for quantum computers and the processing of quantum information. However, there remains substantial discussion regarding which types of qubits are actually the best.

Research and development in this field are growing at astonishing paces to see which system or platform outruns the other. To mention a few, platforms as diverse as superconducting Josephson junctions, trapped ions, topological qubits, ultra-cold neutral atoms, or even diamond vacancies constitute the zoo of possibilities to make qubits.

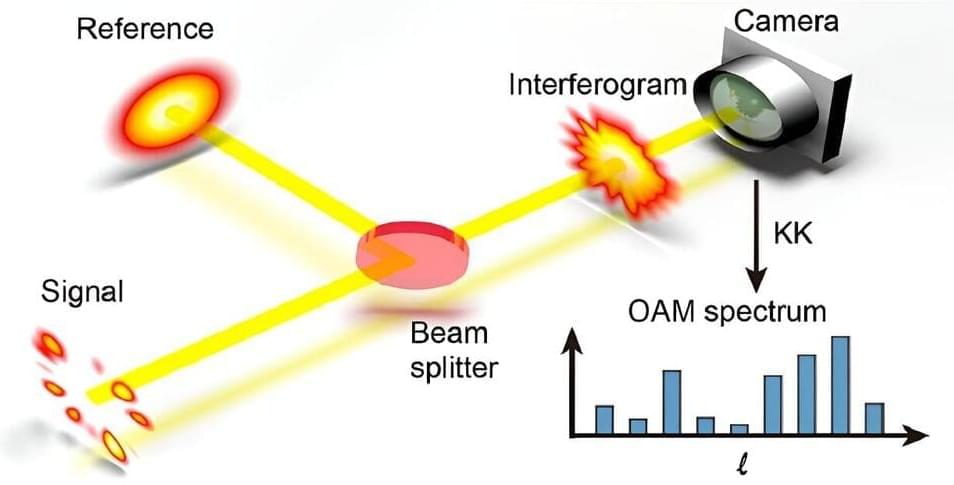

Structured light waves with spiral phase fronts carry orbital angular momentum (OAM), attributed to the rotational motion of photons. Recently, scientists have been using light waves with OAM, and these special “helical” light beams have become very important in various advanced technologies like communication, imaging, and quantum information processing. In these technologies, it’s crucial to know the exact structure of these special light beams. However, this has proven to be quite tricky.

Interferometry—superimposing a light field with a known reference field to extract information from the interference—can retrieve OAM spectrum information using a camera. As the camera only records the intensity of the interference, the measurement technique encounters additional crosstalk known as “signal-signal beat interference” (SSBI), which complicates the retrieval process. It’s like hearing multiple overlapping sounds, making it difficult to distinguish the original notes.

In a recent breakthrough reported in Advanced Photonics, researchers from Sun Yat-sen University and École Polytechnique Fédérale de Lausanne (EPFL) used a powerful mathematical tool called the Kramers-Kronig (KK) relation, which helps with understanding and solving the problem. This tool enabled them to untangle the complex helical light pattern from the camera’s intensity-only measurements for single-shot retrieval in simple on-axis interferometry. Exploring the duality between the time-frequency and azimuth-OAM domains, they apply the KK approach to investigate various OAM fields, including Talbot self-imaged petals and fractional OAM modes.

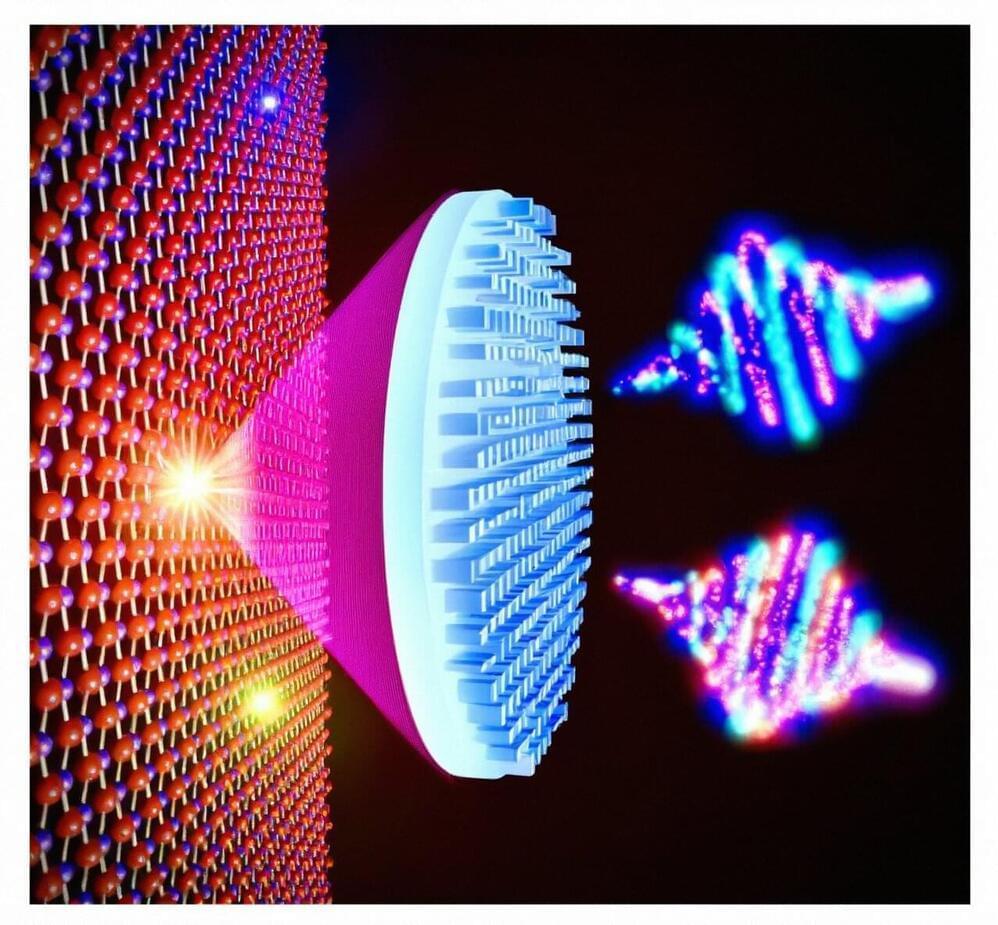

Quantum emission is pivotal to realizing photonic quantum technologies. Solid-state single photon emitters (SPEs), such as hexagonal boron nitride (hBN) defects, operate at room temperature. They are highly desirable due to their robustness and brightness.

The conventional way to collect photons from SPEs relies on a high numerical aperture (NA) objective lens or micro-structured antennas. While photon collection efficiency can be high, these tools cannot manipulate quantum emissions. Multiple bulky optical elements, such as polarizers and phase plates, are required to achieve any desired structuring of the emitted quantum light source.

In a new paper published in eLight, an international team of scientists led by Drs Chi Li and Haoran Ren from Monash University have developed a new multifunctional metalens for structuring quantum emissions from SPEs.

Weird things happen on the quantum level. Whole clouds of particles can become entangled, their individuality lost as they act as one.

Now scientists have observed, for the first time, ultracold atoms cooled to a quantum state chemically reacting as a collective, rather than haphazardly forming new molecules after bumping into each other by chance.

“What we saw lined up with the theoretical predictions,” says Cheng Chin, a physicist at the University of Chicago and senior author of the study. “This has been a scientific goal for 20 years, so it’s a very exciting era.”

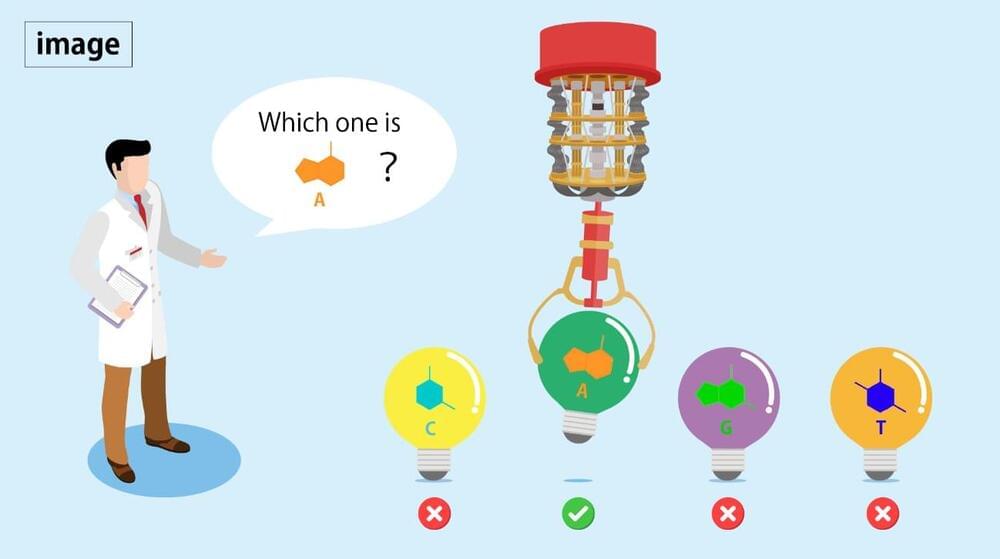

DNA sequencing technology, i.e., determining the order of nucleotide bases in a DNA molecule, is central to personalized medicine and disease diagnostics, yet even the fastest technologies require hours, or days, to read a complete sequence. Now, a multi-institutional research team led by The Institute of Scientific and Industrial Research (SANKEN) at Osaka University, has developed a technique that could lead to a new paradigm for genomic analysis.

DNA sequences are sequential arrangements of the nucleotide bases, i.e., the four letters that encode information invaluable to the proper functioning of an organism. For example, changing the identity of just one nucleotide out of the several billion nucleotide pairs in the human genome can lead to a serious medical condition. The ability to read DNA sequences quickly and reliably is thus essential to some urgent point-of-care decisions, such as how to proceed with a particular chemotherapy treatment.

Unfortunately, genome analysis remains challenging for classical computers, and it’s in this context that quantum computers show promise. Quantum computers use quantum bits instead of the zeroes and ones of classical computers, facilitating an exponential increase in computational speed.