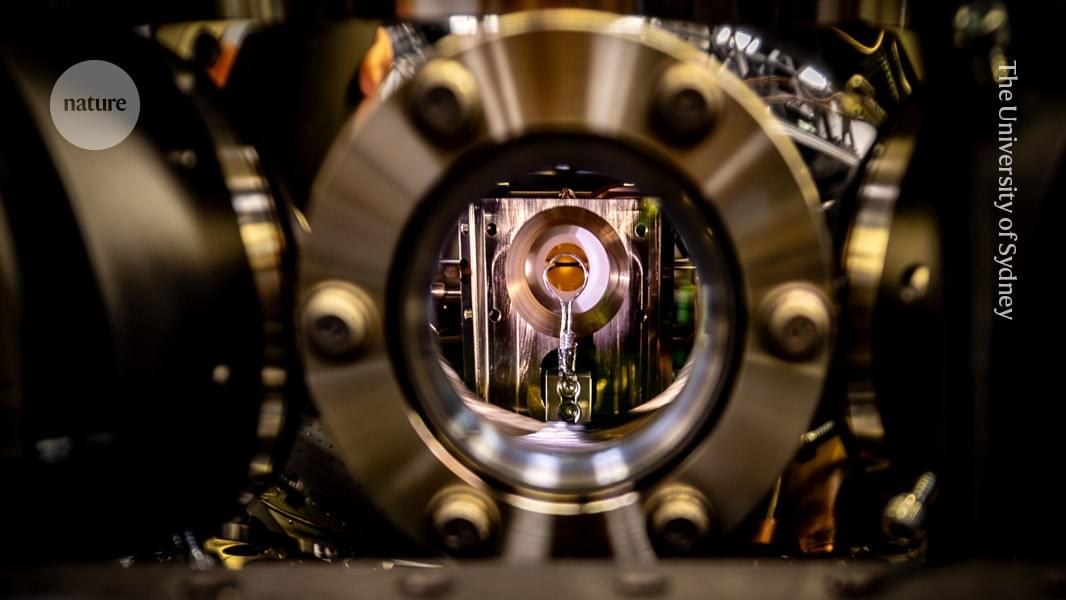

A trapped ytterbium ion can be used to model complex changes in the energy levels of organic molecules interacting with light.

In 2025, China tech is no longer just catching up—it’s rewriting the rules. From quantum computers that outperform U.S. supercomputers to humanoid robots priced for mass adoption, China tech is accelerating at a pace few imagined. In this video, Top 10 Discoveries Official explores the 8 cutting-edge breakthroughs that prove China tech is reshaping transportation, AI, clean energy, and even brain-computer interfaces. While the West debates and regulates, China tech builds—from driverless taxis and flying cars to homegrown AI chips and thorium reactors. Watch now to understand why the future might not be written in Silicon Valley, but in Shenzhen.

#chinatech #chinaai #chinanews #top10discoveriesofficial

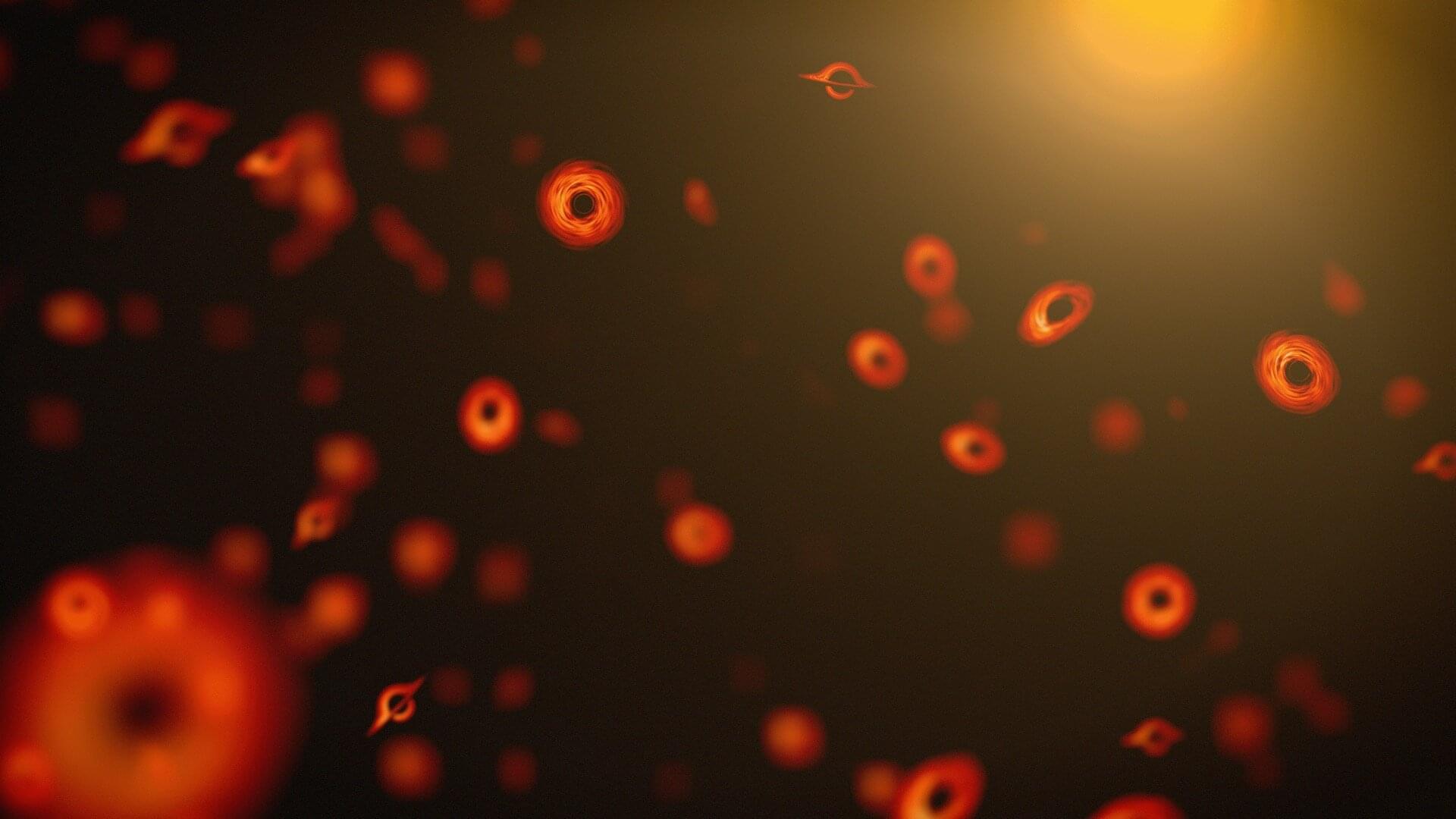

What if black holes weren’t the only things slowly vanishing from existence? Scientists have now shown that all dense cosmic bodies—from neutron stars to white dwarfs—might eventually evaporate via Hawking-like radiation.

Even more shocking, the end of the universe could come far sooner than expected, “only” 1078 years from now, not the impossibly long 101100 years once predicted. In an ambitious blend of astrophysics, quantum theory, and math, this playful yet serious study also computes the eventual fates of the Moon—and even a human.

Black Holes Aren’t Alone

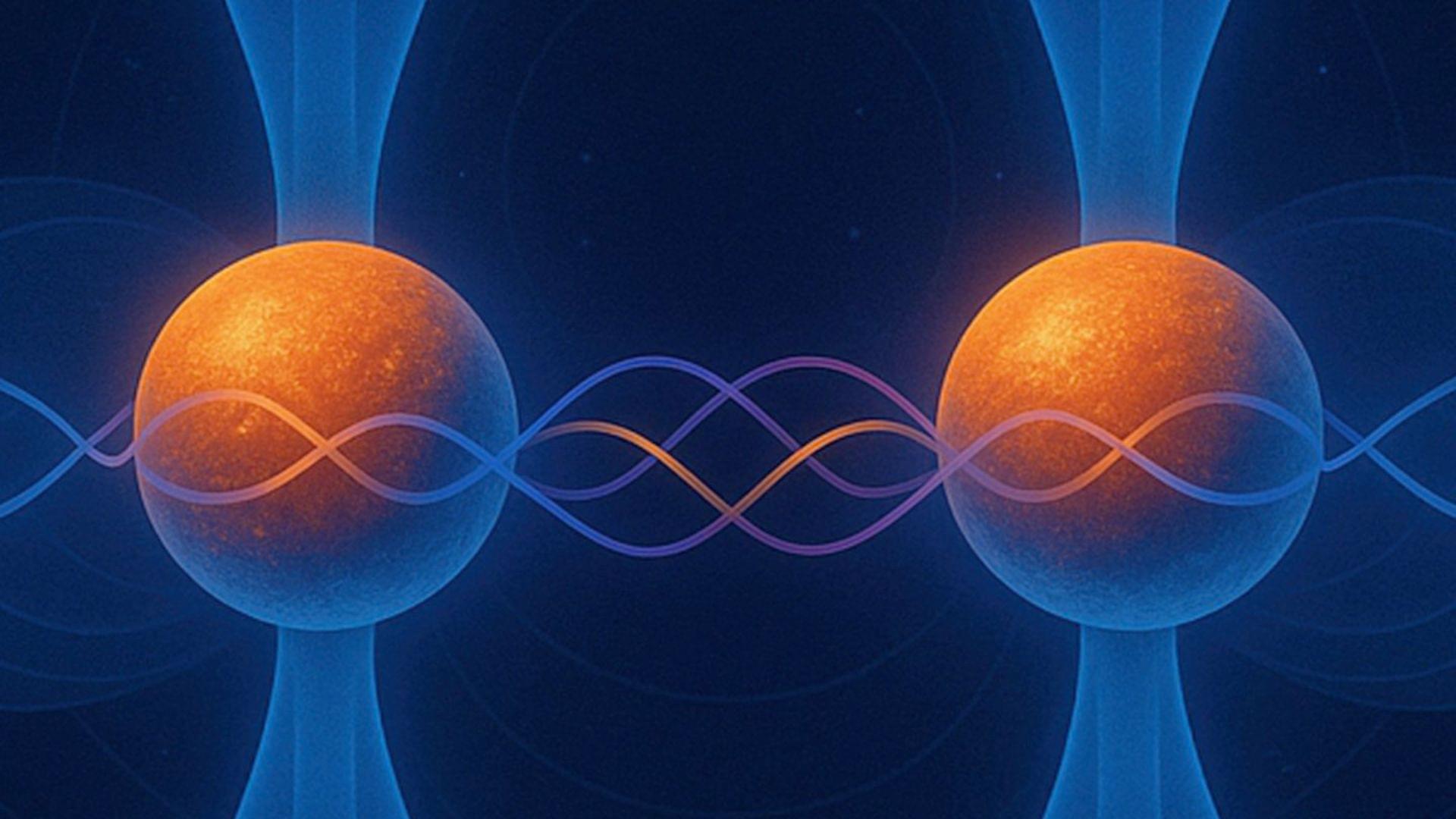

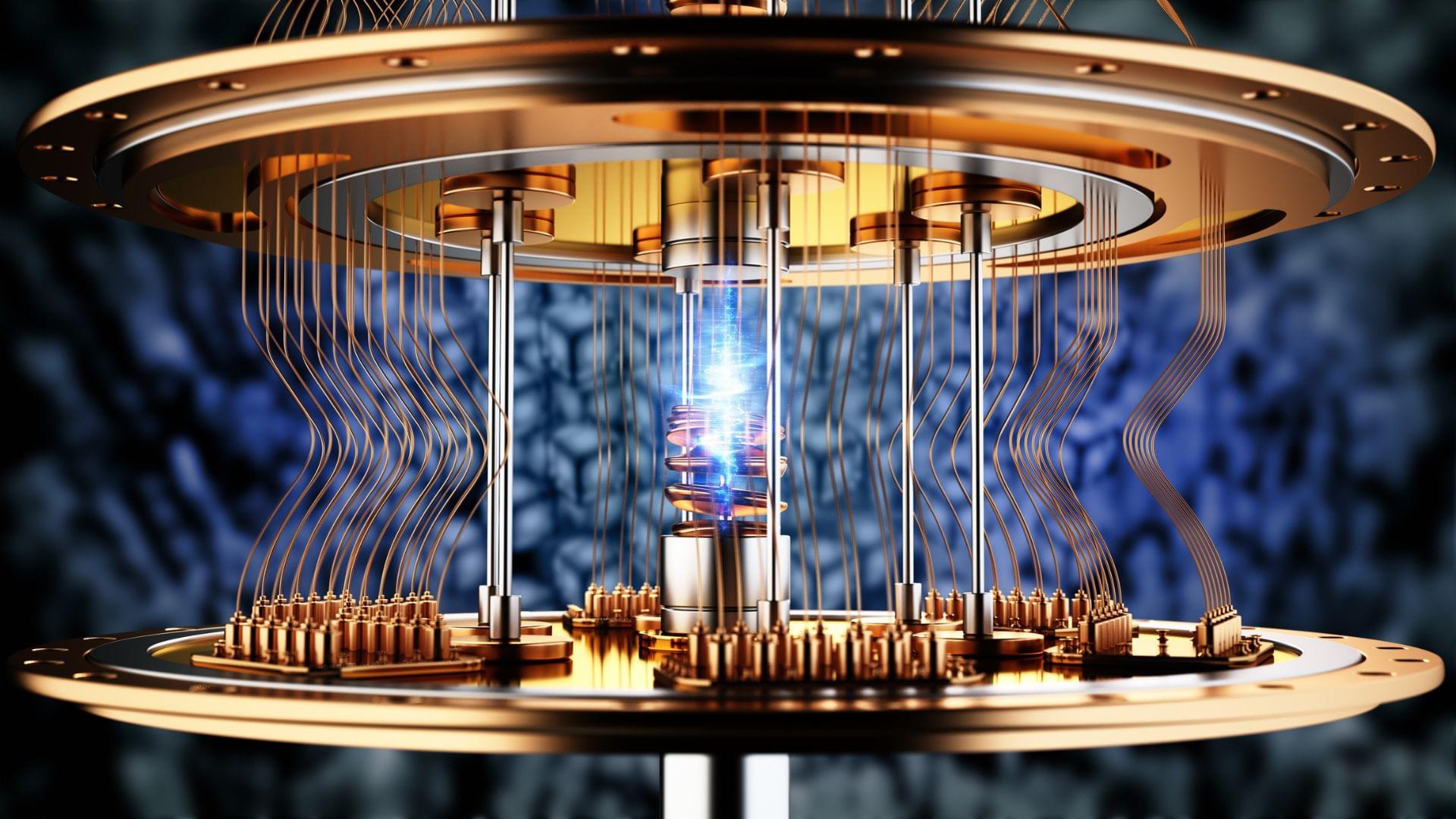

Researchers at IBM and Lockheed Martin teamed up high-performance computing with quantum computing to accurately model the electronic structure of ‘open-shell’ molecules, methylene, which has been a hurdle with classic computing over the years. This is the first demonstration of the sample-based quantum diagonalization (SQD) technique to open-shell systems, a press release said.

Quantum computing, which promises computations at speeds unimaginable by even the fastest supercomputers of today, is the next frontier of computing. Leveraging quantum states of molecules to serve as quantum bits, these computers supersede computational capabilities that humanity has had access to in the past and open up new research areas.

Besides particles like sterile neutrinos, axions and weakly interacting massive particles (WIMPs), a leading candidate for the cold dark matter of the universe are primordial black holes—black holes created from extremely dense conglomerations of subatomic particles in the first seconds after the Big Bang.

Primordial black holes (PBHs) are classically stable, but as shown by Stephen Hawking in 1975, they can evaporate via quantum effects, radiating nearly like a blackbody. Thus, they have a lifetime; it’s proportional to the cube of their initial mass. As it’s been 13.8 billion years since the Big Bang, only PBHs with an initial mass of a trillion kilograms or more should have survived to today.

However, it has been suggested that the lifetime of a black hole might be considerably longer than Hawking’s prediction due to the memory burden effect, where the load of information carried by a black hole stabilizes it against evaporation.

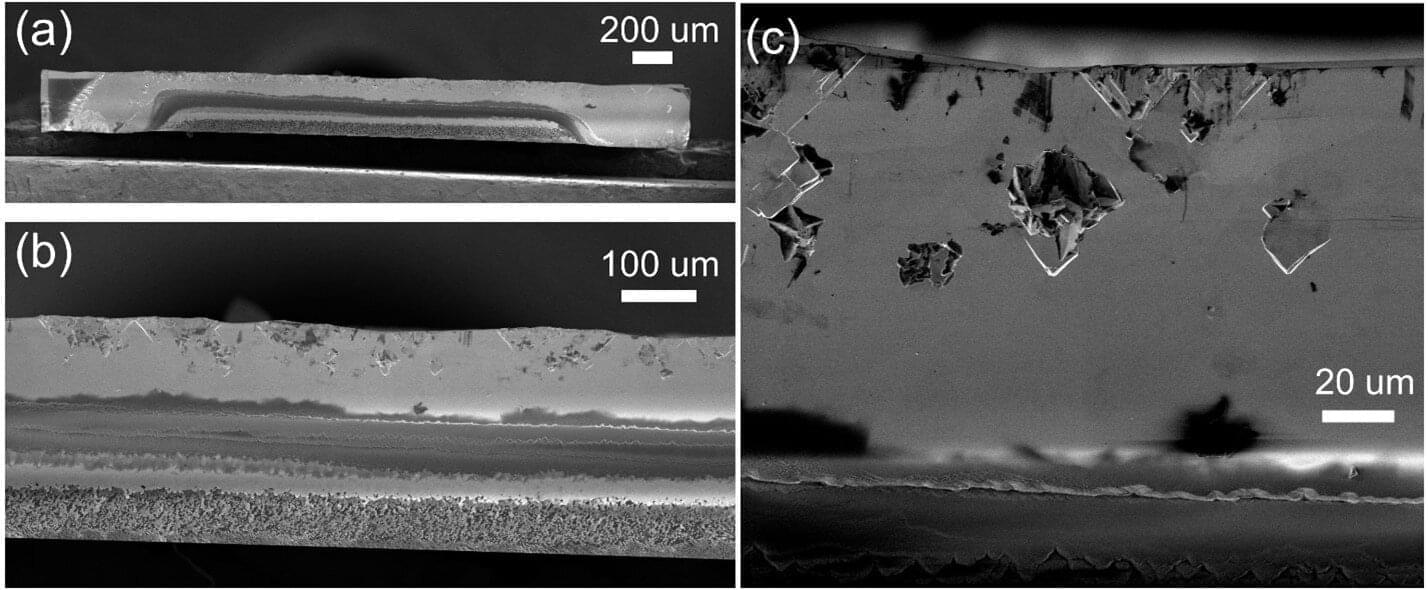

Diamond is one of the most prized materials in advanced technologies due to its unmatched hardness, ability to conduct heat and capacity to host quantum-friendly defects. The same qualities that make diamond useful also make it difficult to process.

Engineers and researchers who work with diamond for quantum sensors, power electronics or thermal management technologies need it in ultrathin, ultrasmooth layers. But traditional techniques, like laser cutting and polishing, often damage the material or create surface defects.

Ion implantation and lift-off is a way to separate a thin layer of diamond from a larger crystal by bombarding a diamond substrate with high-energy carbon ions, which penetrate to a specific depth below the surface. The process creates a buried layer in the diamond substrate where the crystalline lattice has been disrupted. That damaged layer effectively acts like a seam: Through high-temperature annealing, it turns into smooth graphite, allowing for the diamond layer above it to be lifted off in one uniform, ultrathin wafer.

We’re announcing the world’s first scalable, error-corrected, end-to-end computational chemistry workflow. With this, we are entering the future of computational chemistry.

Quantum computers are uniquely equipped to perform the complex computations that describe chemical reactions – computations that are so complex they are impossible even with the world’s most powerful supercomputers.

However, realizing this potential is a herculean task: one must first build a large-scale, universal, fully fault-tolerant quantum computer – something nobody in our industry has done yet. We are the farthest along that path, as our roadmap, and our robust body of research, proves. At the moment, we have the world’s most powerful quantum processors, and are moving quickly towards universal fault tolerance. Our commitment to building the best quantum computers is proven again and again in our world-leading results.