Using the Five-hundred-meter Aperture Spherical Radio Telescope (FAST), astronomers from the Chinese Academy of Sciences (CAS) and elsewhere have observed a nearby pulsar known as PSR J2129+4119. Results of the observational campaign, published October 30 on the arXiv pre-print server, deliver important insights into the behavior and properties of this pulsar.

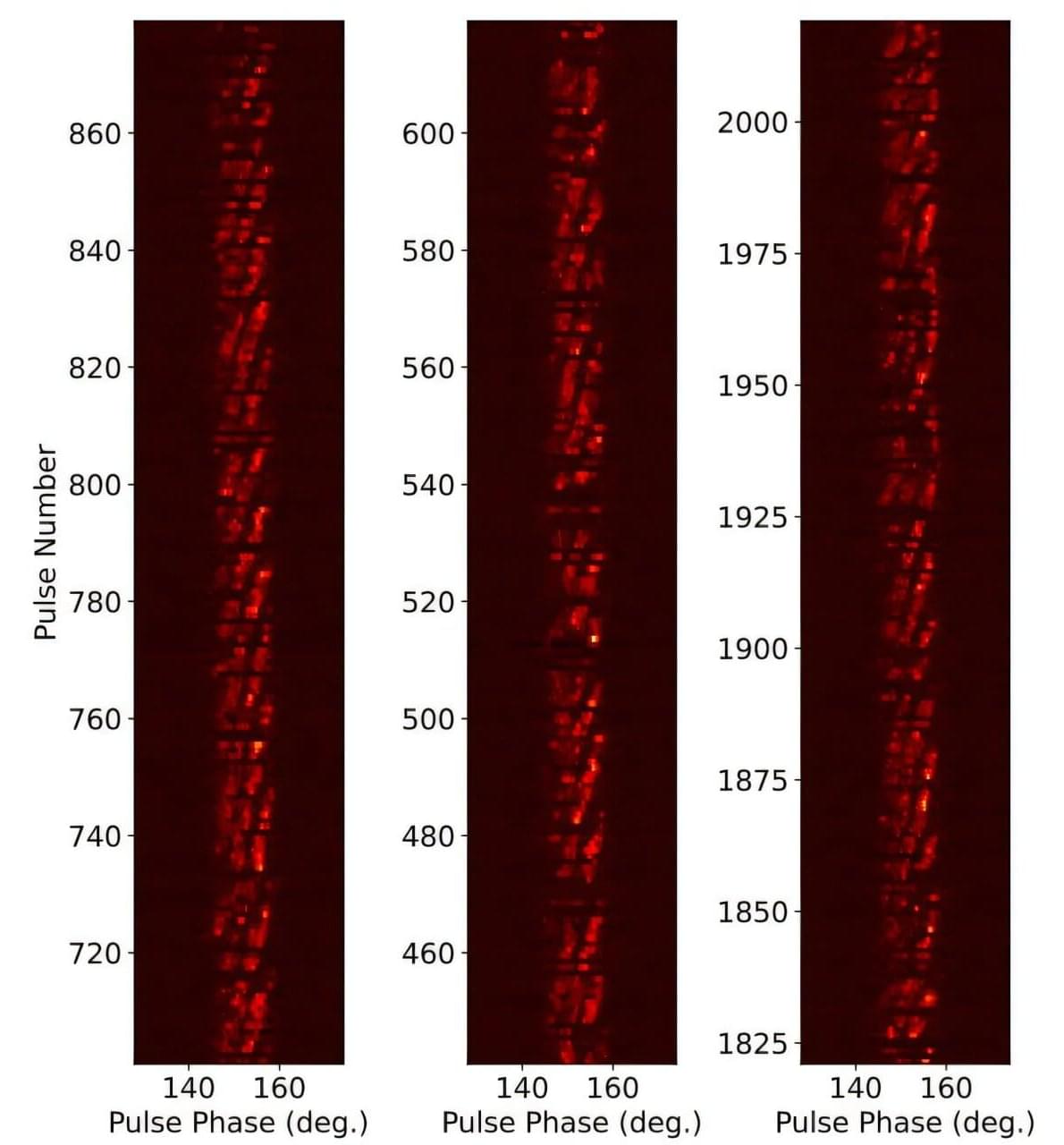

Radio emission from pulsars exhibits a variety of phenomena, including subpulse drifting, nulling, or mode changing. In the case of subpulse drifting, radio emission from a pulsar appears to drift in spin phase within the main pulse profile. When it comes to nulling, the emission from a pulsar ceases abruptly from a few to hundreds of pulse periods before it is restored.

Discovered in 2017, PSR J2129+4119 is an old and nearby pulsar located some 7,500 light years away. It has a pulse period of 1.69 seconds, dispersion measure of 31 cm/pc3, and characteristic age of 342.8 million years. The pulsar lies below the so-called “death line”—a theoretical boundary in the period-period derivative diagram below which the coherent radio emission is sustained.