Two decades ago, an experiment at the U.S. Department of Energy’s (DOE) Brookhaven National Laboratory pinpointed a mysterious mismatch between established particle physics theory and actual lab measurements. When researchers gauged the behavior of a subatomic particle called the muon, the results did not agree with theoretical calculations, posing a potential challenge to the Standard Model—our current understanding of how the universe works.

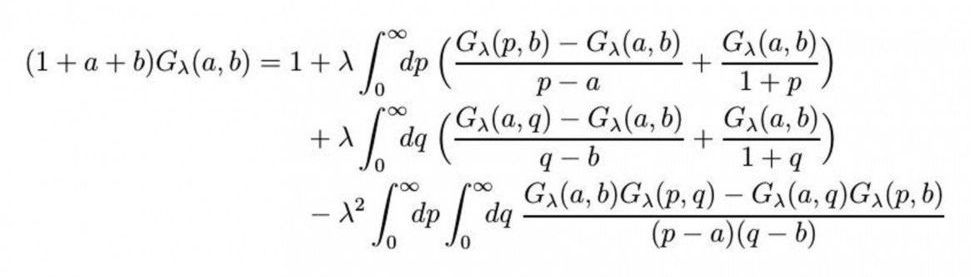

Ever since then, scientists around the world have been trying to verify this discrepancy and determine its significance. The answer could either uphold the Standard Model, which defines all of the known subatomic particles and how they interact, or introduce the possibility of an entirely undiscovered physics. A multi-institutional research team (including Brookhaven, Columbia University, and the universities of Connecticut, Nagoya and Regensburg, RIKEN) have used Argonne National Laboratory’s Mira supercomputer to help narrow down the possible explanations for the discrepancy, delivering a newly precise theoretical calculation that refines one piece of this very complex puzzle. The work, funded in part by the DOE’s Office of Science through its Office of High Energy Physics and Advanced Scientific Computing Research programs, has been published in the journal Physical Review Letters.

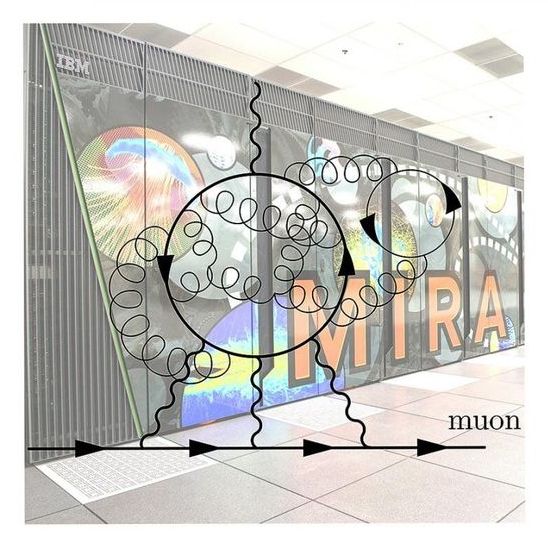

A muon is a heavier version of the electron and has the same electric charge. The measurement in question is of the muon’s magnetic moment, which defines how the particle wobbles when it interacts with an external magnetic field. The earlier Brookhaven experiment, known as Muon g-2, examined muons as they interacted with an electromagnet storage ring 50 feet in diameter. The experimental results diverged from the value predicted by theory by an extremely small amount measured in parts per million, but in the realm of the Standard Model, such a difference is big enough to be notable.