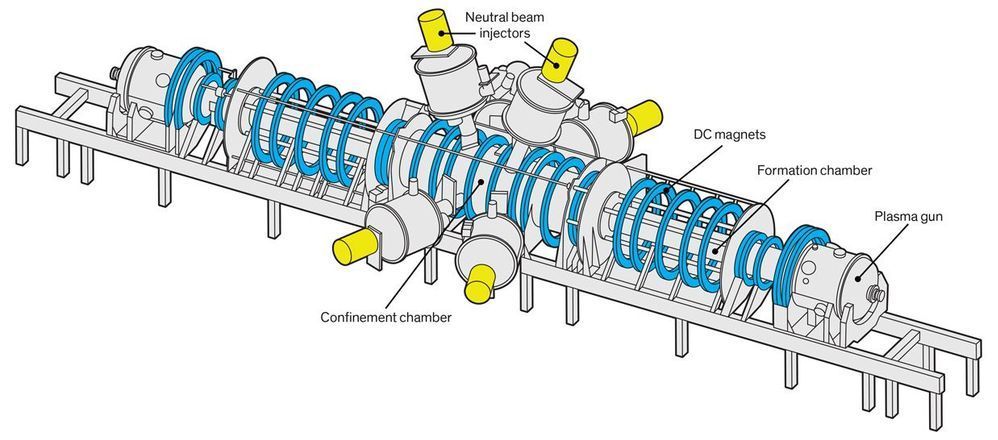

Russian scientists have proposed a concept of a thorium hybrid reactor in that obtains additional neutrons using high-temperature plasma held in a long magnetic trap. This project was applied in close collaboration between Tomsk Polytechnic University, All-Russian Scientific Research Institute Of Technical Physics (VNIITF), and Budker Institute of Nuclear Physics of SB RAS. The proposed thorium hybrid reactor is distinguished from today’s nuclear reactors by moderate power, relatively compact size, high operational safety, and a low level of radioactive waste.

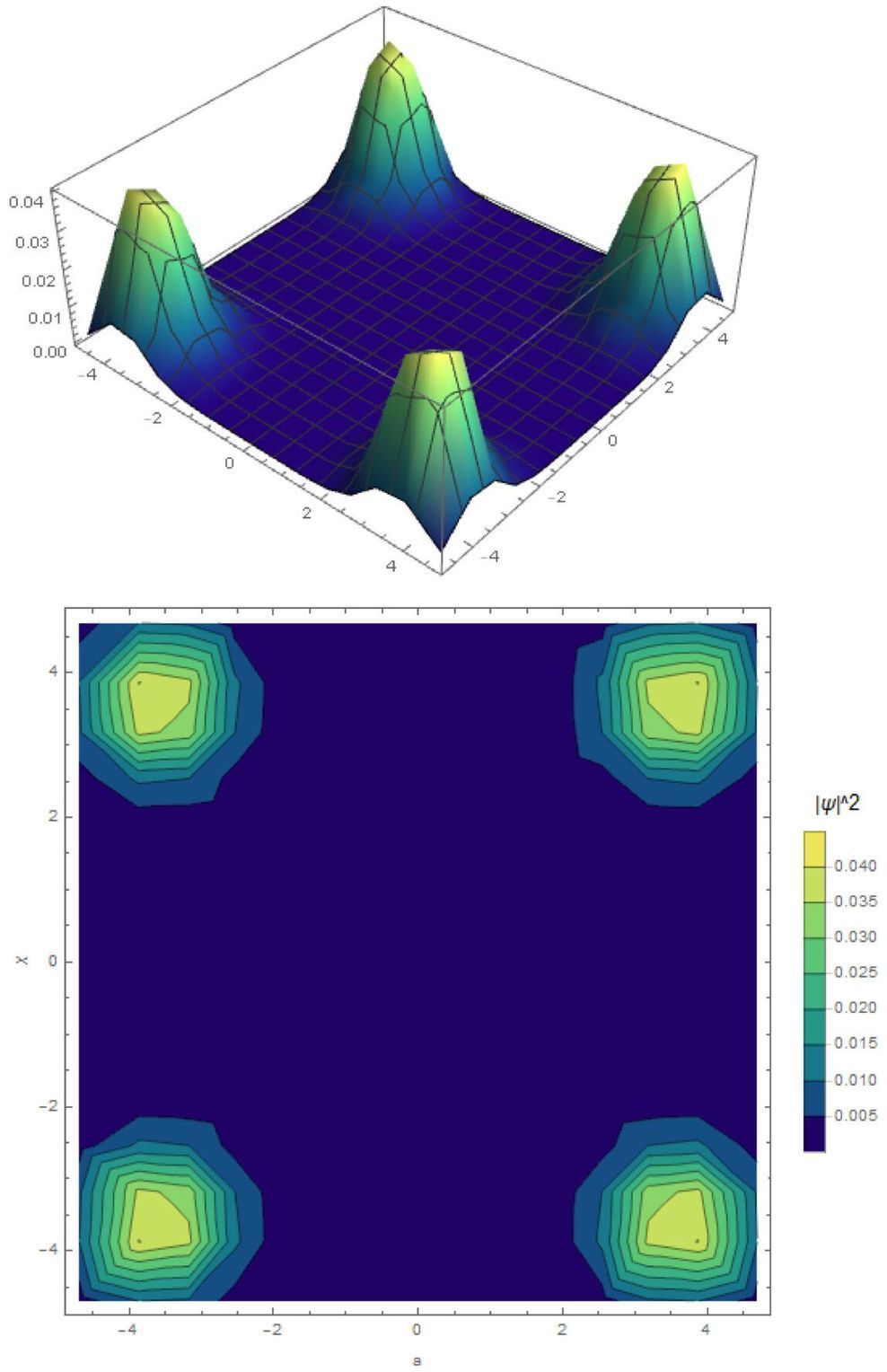

“At the initial stage, we get relatively cold plasma using special plasma guns. We retain the amount by deuterium gas injection. The injected neutral beams with particle energy of 100 keV into this plasma generate the high-energy deuterium and tritium ions and maintain the required temperature. Colliding with each other, deuterium and tritium ions are combined into a helium nucleus so high-energy neutrons are released. These neutrons can freely pass through the walls of the vacuum chamber, where the plasma is held by a magnetic field, and entering the area with nuclear fuel. After slowing down, they support the fission of heavy nuclei, which serves as the main source of energy released in the hybrid reactor,” says professor Andrei Arzhannikov, a chief researcher of Budker Institute of Nuclear Physics of SB RAS.

The main advantage of a hybrid nuclear fusion reactor is the simultaneous use of the fission reaction of heavy nuclei and synthesis of light ones. It minimizes the disadvantages of applying these nuclear reactions separately.