Over the last decade, improvements in optical atomic clocks have repeatedly led to devices that have broken records for their precision (see Viewpoint: A Boost in Precision for Optical Atomic Clocks). To achieve even better performance, physicists must find a way to cool the atoms in these clocks to lower temperatures, which would allow them to use shallower atom traps and reduce measurement uncertainty. Tackling this challenge, Xiaogang Zhang and colleagues at the National Institute of Standards and Technology, Colorado, have cooled a gas of ytterbium atoms to a record low temperature of a few tens of nanokelvin [1]. As well as enabling the next generation of optical atomic clocks, the researchers say that their technique could be used to cool atoms in neutral-atom quantum computers.

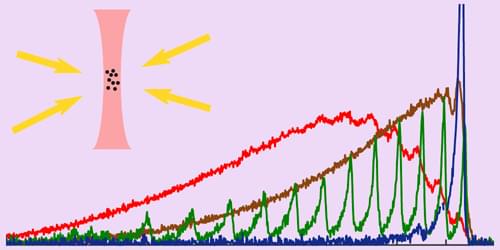

Divalent atoms such as ytterbium are especially suited to precision metrology, as their lack of net electronic spin makes them less sensitive than other species to environmental noise. These atoms can be cooled to the necessary sub-µK temperatures in several ways, but not all techniques are compatible with the requirements of high-precision clocks. For example, evaporative cooling, in which the most energetic atoms are removed, is time-consuming and depletes the atoms. Meanwhile, resolved sideband cooling chills the motion of the atoms only along the axis of the 1D optical trap, leaving their off-axis motion unaffected.

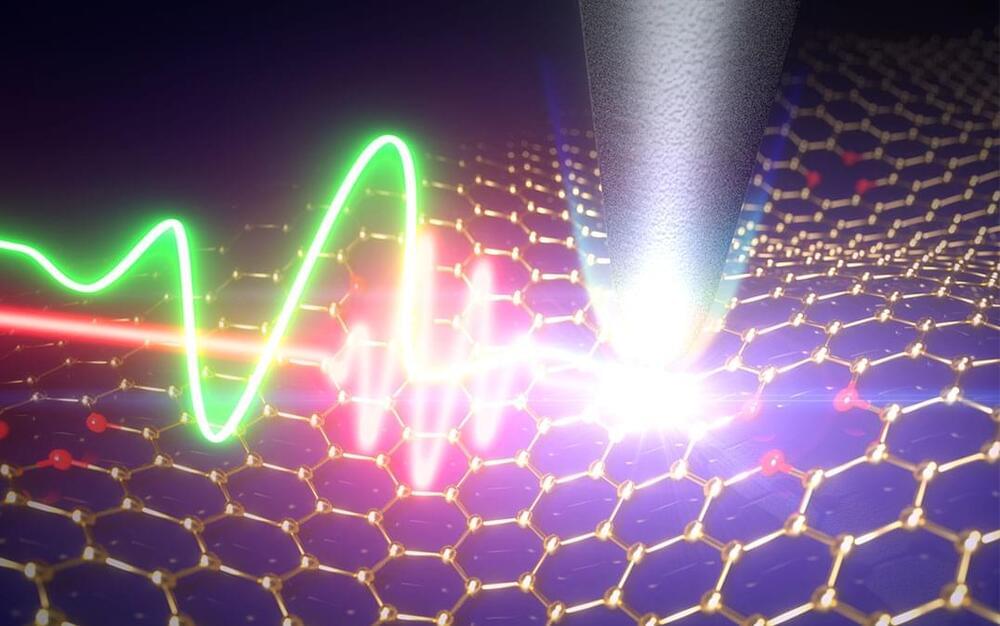

Zhang and colleagues cool their atoms using a laser tuned to ytterbium’s so-called clock transition, whose extremely narrow linewidth means that the atom can theoretically be cooled to below 10 nK. They demonstrate that the precision of a clock employing a shallow lattice trap enabled by such a temperature would not be limited by atoms tunneling between adjacent lattice sites, potentially allowing a measurement uncertainty below 10-19.