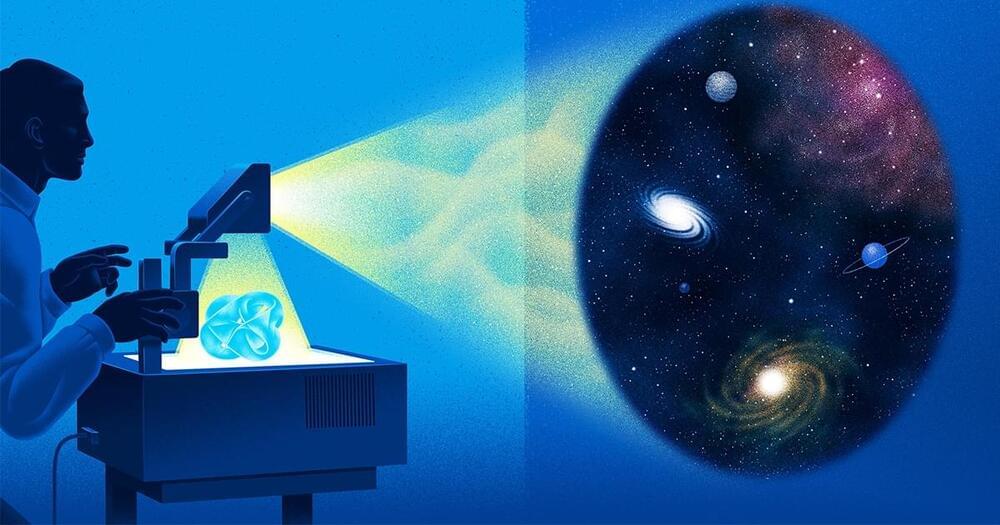

The late physicist Joseph Polchinski once said the existence of magnetic monopoles is “one of the safest bets that one can make about physics not yet seen.” In its quest for these particles, which have a magnetic charge and are predicted by several theories that extend the Standard Model, the MoEDAL collaboration at the Large Hadron Collider (LHC) has not yet proven Polchinski right, but its latest findings mark a significant stride forward.

The results, reported in two papers posted on the arXiv preprint server, considerably narrow the search window for these hypothetical particles.

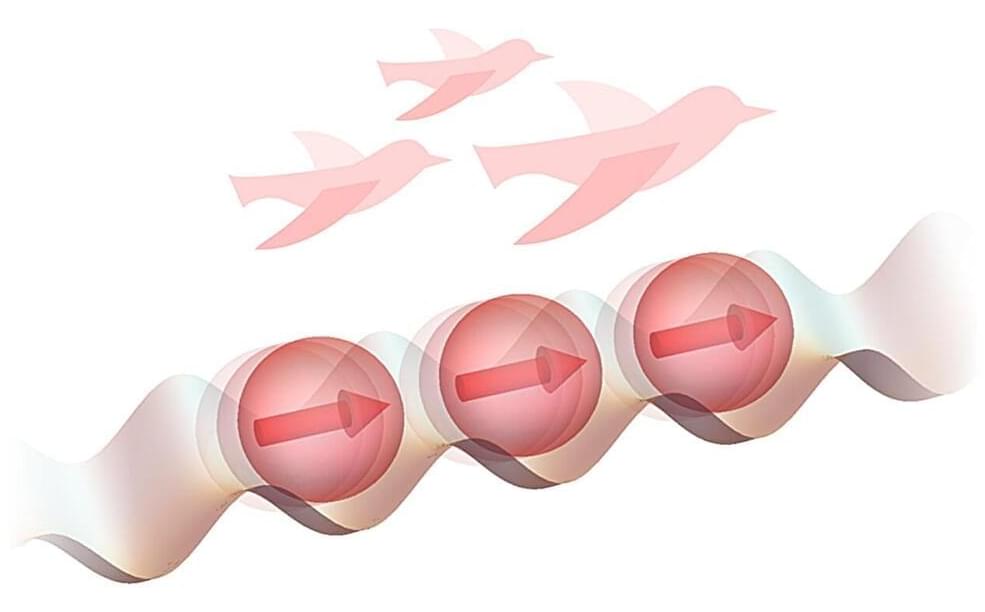

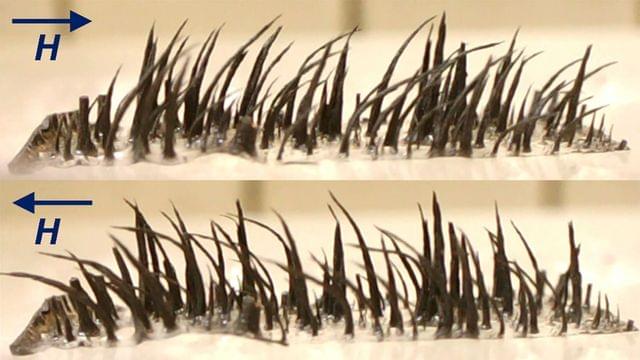

At the LHC, pairs of magnetic monopoles could be produced in interactions between protons or heavy ions. In collisions between protons, they could be formed from a single virtual photon (the Drell–Yan mechanism) or the fusion of two virtual photons (the photon-fusion mechanism). Pairs of magnetic monopoles could also be produced from the vacuum in the enormous magnetic fields created in near-miss heavy-ion collisions, through a process called the Schwinger mechanism.