Bioquark, Inc., (http://www.bioquark.com) a company focused on the development of novel biologics for complex regeneration and disease reversion, and Revita Life Sciences, (http://revitalife.co.in) a biotechnology company focused on translational therapeutic applications of autologous stem cells, have announced that they have received IRB approval for a study focusing on a novel combinatorial approach to clinical intervention in the state of brain death in humans.

This first trial, within the portfolio of Bioquark’s Reanima Project (http://www.reanima.tech) is entitled “Non-randomized, Open-labeled, Interventional, Single Group, Proof of Concept Study With Multi-modality Approach in Cases of Brain Death Due to Traumatic Brain Injury Having Diffuse Axonal Injury” (https://clinicaltrials.gov/ct2/show/NCT02742857?term=bioquark&rank=1), will enroll an initial 20 subjects, and be conducted at Anupam Hospital in Rudrapur, Uttarakhand India.

“We are very excited about the approval of our protocol,” said Ira S. Pastor, CEO, Bioquark Inc. “With the convergence of the disciplines of regenerative biology, cognitive neuroscience, and clinical resuscitation, we are poised to delve into an area of scientific understanding previously inaccessible with existing technologies.”

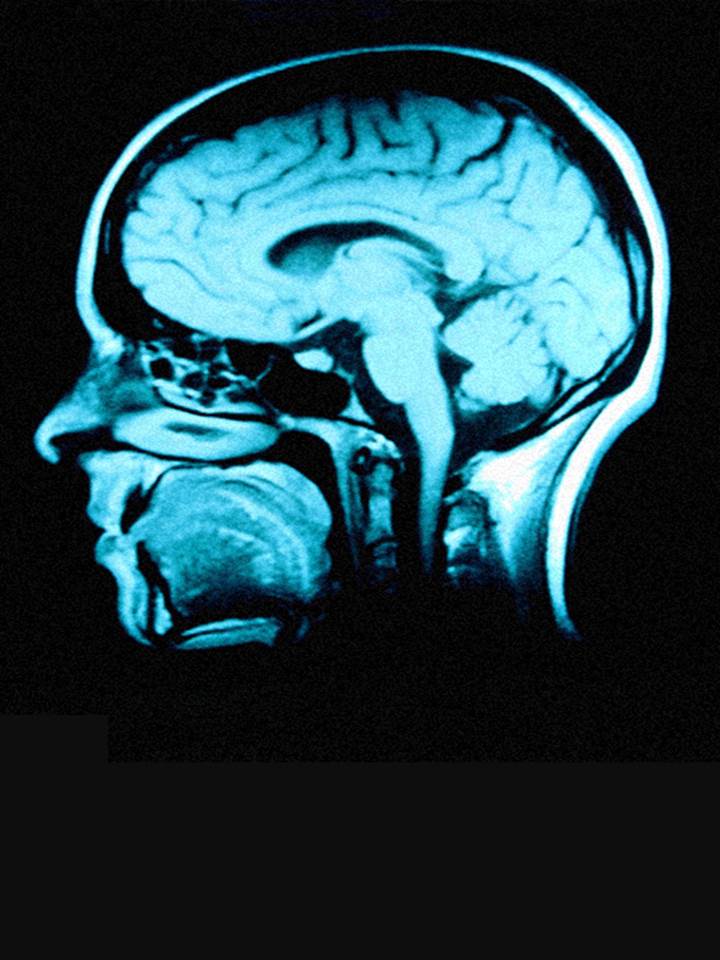

Death is defined as the termination of all biological functions that sustain a living organism. Brain death, the complete and irreversible loss of brain function (including involuntary activity necessary to sustain life) as defined in the 1968 report of the Ad Hoc Committee of the Harvard Medical School, is the legal definition of human death in most countries around the world. Either directly through trauma, or indirectly through secondary disease indications, brain death is the final pathological state that over 60 million people globally transfer through each year.

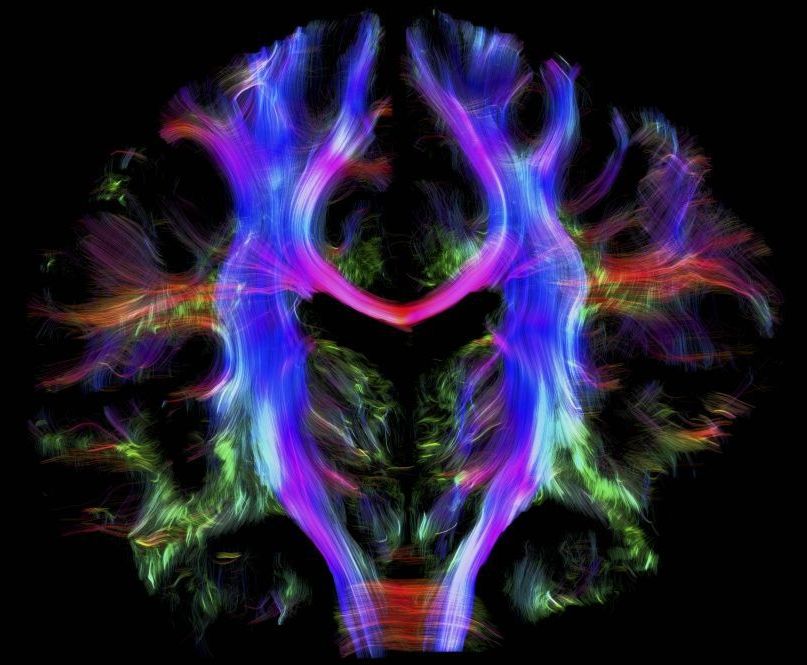

While human beings lack substantial regenerative capabilities in the CNS, many non-human species, such as amphibians, planarians, and certain fish, can repair, regenerate and remodel substantial portions of their brain and brain stem even after critical life-threatening trauma.

Additionally, recent studies on complex brain regeneration in these organisms, have highlighted unique findings in relation to the storage of memories following destruction of the entire brain, which may have wide ranging implications for our understanding of consciousness and the stability of memory persistence.

“Through our study, we will gain unique insights into the state of human brain death, which will have important connections to future therapeutic development for other severe disorders of consciousness, such as coma, and the vegetative and minimally conscious states, as well as a range of degenerative CNS conditions, including Alzheimer’s and Parkinson’s disease,” said Dr. Sergei Paylian, Founder, President, and Chief Science Officer of Bioquark Inc.

Over the years, clinical science has focused heavily on preventing such life and death transitions and made some initial progress with suspended animation technologies, such as therapeutic hypothermia. However, once humans transition through the brain death window, currently defined by the medical establishment as “irreversible”, they are technically no longer alive, despite the fact that human bodies can still circulate blood, digest food, excrete waste, balance hormones, grow, sexually mature, heal wounds, spike a fever, and gestate and deliver a baby. It is even acknowledged by thought leaders that recently brain dead humans still may have residual blood flow and electrical nests of activity in their brains, just not enough to allow for an integrated functioning of the organism as a whole.

“We look forward to working closely with Bioquark Inc. on this cutting edge clinical initiative,” said Dr. Himanshu Bansal, Managing Director of Revita Life Sciences.

About Bioquark, Inc.

Bioquark Inc. is focused on the development of natural biologic based products, services, and technologies, with the goal of curing a wide range of diseases, as well as effecting complex regeneration. Bioquark is developing both biological pharmaceutical candidates, as well as products for the global consumer health and wellness market segments.

About Revita Life Sciences

Revita Life Sciences is a biotechnology company focused on the development of stem cell therapies that target areas of significant unmet medical need. Revita is led by Dr. Himanshu Bansal MD, PhD. who has spent over two decades developing novel MRI based classifications of spinal cord injuries as well as comprehensive treatment protocols with autologous tissues including bone marrow stem cells, dural nerve grafts, nasal olfactory tissues, and omental transposition.