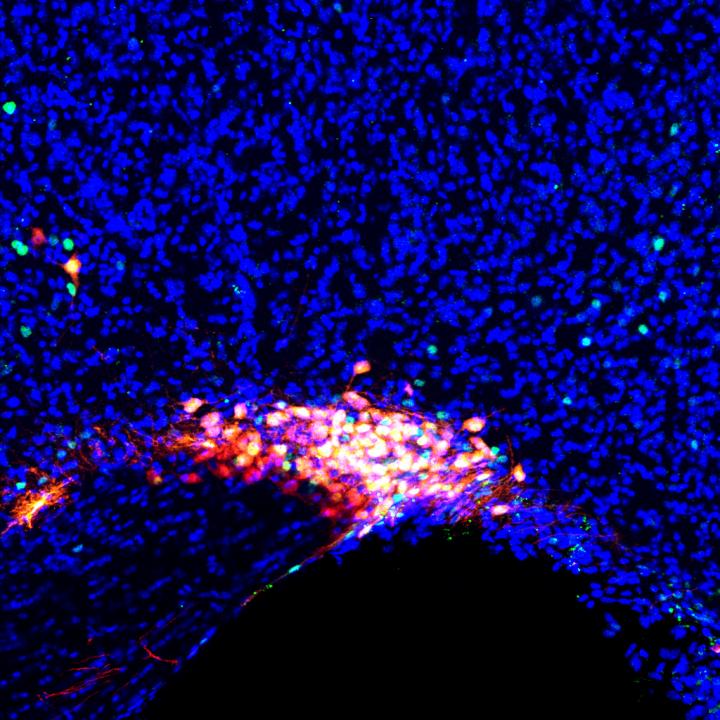

Worldwide, 50 million people are living with Alzheimer’s disease and other dementias. According to the Alzheimer’s Association, every 65 seconds someone in the United States develops this disease, which causes problems with memory, thinking and behavior.

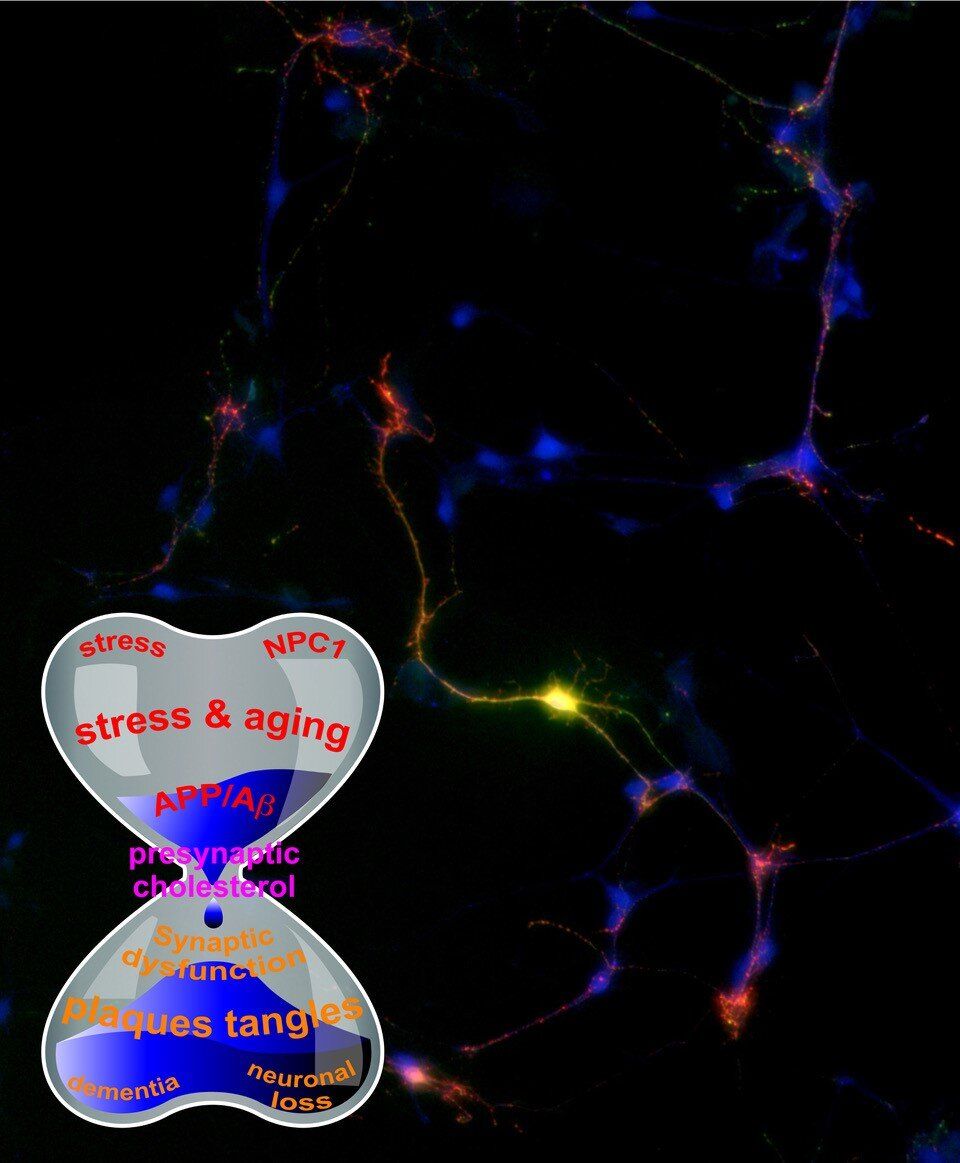

It has been more than 100 years since Alois Alzheimer, M.D., a German psychiatrist and neuropathologist, first reported the presence of senile plaques in an Alzheimer’s disease patient brain. It led to the discovery of amyloid precursor protein that produces deposits or plaques of amyloid fragments in the brain, the suspected culprit of Alzheimer’s disease. Since then, amyloid precursor protein has been extensively studied because of its association with Alzheimer’s disease. However, amyloid precursor protein distribution within and on neurons and its function in these cells remain unclear.

A team of neuroscientists led by Florida Atlantic University’s Brain Institute sought to answer a fundamental question in their quest to combat Alzheimer’s disease—” Is amyloid precursor protein the mastermind behind Alzheimer’s disease or is it just an accomplice?”