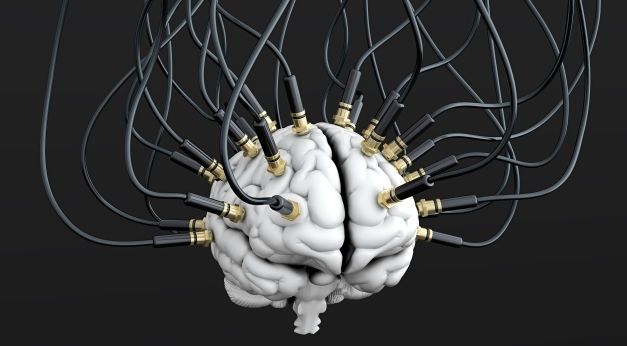

A neural interface being created by the United States military aims to greatly improve the resolution and connection speed between biological and non-biological matter.

The Defence Advanced Research Projects Agency (DARPA) — a branch of the U.S. military — has announced a new research and development program known as Neural Engineering System Design (NESD). This aims to create a fully implantable neural interface able to provide unprecedented signal resolution and data-transfer bandwidth between the human brain and the digital world.