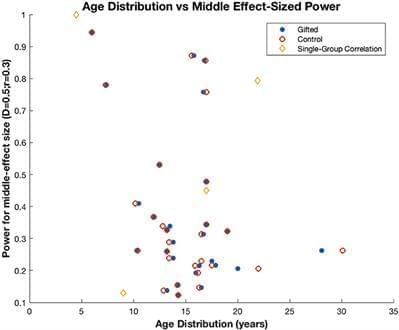

Often labeled developmental dyscalculia and/or mathematical learning disability. In contrast, much less research is available on cognitive and neural correlates of gifted/excellent mathematical knowledge in adults and children. In order to facilitate further inquiry into this area, here we review 40 available studies, which examine the cognitive and neural basis of gifted mathematics. Studies associated a large number of cognitive factors with gifted mathematics, with spatial processing and working memory being the most frequently identified contributors. However, the current literature suffers from low statistical power, which most probably contributes to variability across findings. Other major shortcomings include failing to establish domain and stimulus specificity of findings, suggesting causation without sufficient evidence and the frequent use of invalid backward inference in neuro-imaging studies. Future studies must increase statistical power and neuro-imaging studies must rely on supporting behavioral data when interpreting findings. Studies should investigate the factors shown to correlate with math giftedness in a more specific manner and determine exactly how individual factors may contribute to gifted math ability.

A disproportionately large amount of scientific advancement throughout history has occurred due to cognitively gifted individuals. However, we know surprisingly little about the cognitive structure supporting gifted mathematics. The current understanding is that human mathematical ability builds on an extensive network of cognitive skills and mathematics-specific knowledge, which are supported by motivational factors (Ansari, 2008; Beilock, 2008; Fias et al., 2013; Szűcs et al., 2014; Szűcs, 2016). To date, most psychological and neuroscience studies have examined potentially important factors only in children and adults with normal mathematics as well as in children with poor mathematical abilities (e.g., in children with mathematical learning disability or developmental dyscalculia). In contrast, those with high levels of mathematical giftedness received relatively little attention.