An essay by Steve Nadis and Shing-Tung Yau describes how math helps us imagine the cosmos around us in new ways.

Recently, a team of chemists, mathematicians, physicists and nano-engineers at the University of Twente in the Netherlands developed a device to control the emission of photons with unprecedented precision. This technology could lead to more efficient miniature light sources, sensitive sensors, and stable quantum bits for quantum computing.

GPT4 can score better than 95% of the average human on aptitude tests.

The GPT-4 language model recently completed the Scholastic Aptitude Test (SAT), achieving a verbal score of 710 and a math score of 690, resulting in a combined score of 1400. Based on U.S. norms, this corresponds to a verbal IQ of 126, a math IQ of 126, and a full-scale IQ of 124. If taken at face value, one might conclude that GPT-4 surpasses 95% of the American population in intelligence and is approximately as intelligent as the average doctoral degree holder, medical doctor, or attorney.

However, the question remains: Is administering an IQ test to GPT-4 a valid undertaking or a significant categorization mistake?

Noncommutative probability and categorical structure Quantum-like revolut…

Take courses in science, computer science, and mathematics on Brilliant! First 30 days are free and 20% off the annual premium subscription when you use our link ➜ https://brilliant.org/sabine.

The rate at which the universe is currently expanding is known as the Hubble Rate. In recent years, different measurements have given different results for the Hubble rate, a discrepancy between theory and observation that’s been called the “Hubble tension”. Now, a team of astrophysicists claims the Hubble tension is gone and it’s the fault of supernovae data. Let’s have a look.

Paper: https://iopscience.iop.org/article/10…

🤓 Check out my new quiz app ➜ http://quizwithit.com/

💌 Support me on Donorbox ➜ https://donorbox.org/swtg.

📝 Transcripts and written news on Substack ➜ https://sciencewtg.substack.com/

👉 Transcript with links to references on Patreon ➜ / sabine.

📩 Free weekly science newsletter ➜ https://sabinehossenfelder.com/newsle…

👂 Audio only podcast ➜ https://open.spotify.com/show/0MkNfXl…

🔗 Join this channel to get access to perks ➜

/ @sabinehossenfelder.

🖼️ On instagram ➜ / sciencewtg.

#science #sciencenews #cosmology

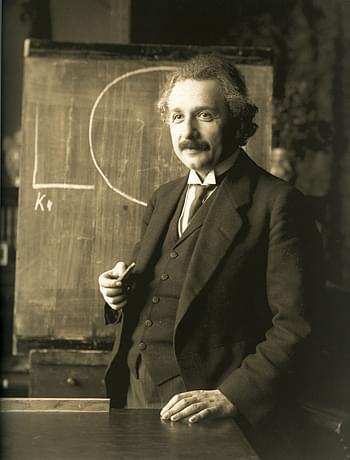

New discoveries about Jupiter could lead to a better understanding of Earth’s own space environment and influence a long-running scientific debate about the solar system’s largest planet. “By exploring a larger space such as Jupiter, we can better understand the fundamental physics governing Earth’s magnetosphere and thereby improve our space weather forecasting,” said Peter Delamere, a professor at the UAF Geophysical Institute and the UAF College of Natural Science and Mathematics.

“We are one big space weather event from losing communication satellites, our power grid assets, or both,” he said.

Space weather refers to disturbances in the Earth’s magnetosphere caused by interactions between the solar wind and the Earth’s magnetic field. These are generally associated with solar storms and the sun’s coronal mass ejections, which can lead to magnetic fluctuations and disruptions in power grids, pipelines and communication systems.

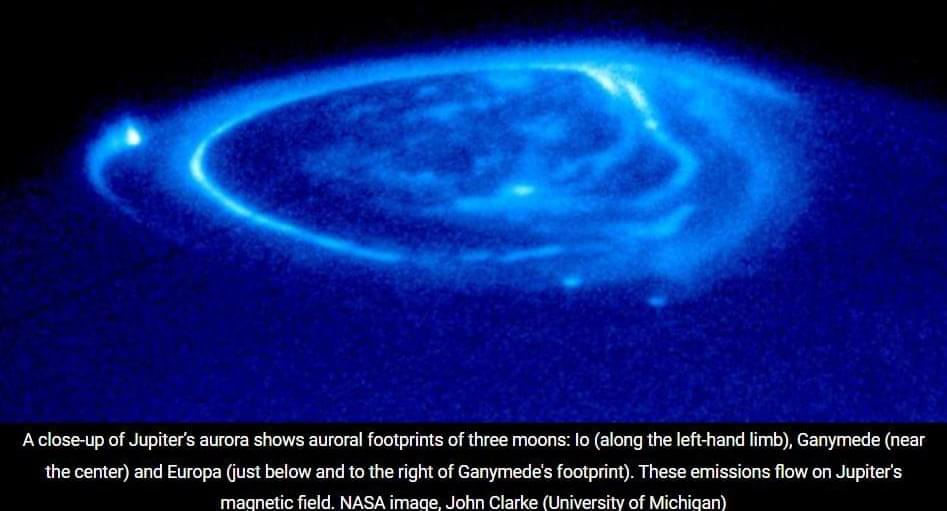

The Standard Model Lagrangian specifies all known particles that might come into being during a collision and how they might interact.

Particle physicists in search of the next theory of reality are consulting a mathematical structure that they know will never fail: a table of possibilities known as the S-matrix.

Awodey, Steve, Álvaro Pelayo, Michael A. Warren (2013). Voevodsky’s Univalence Axiom in homotopy type theory. Notices of the American Mathematical Society.

Bakhtin, Mikhail (1984). Bakhtin’s analysis of Dostoevsky. Problems of Dostoevsky’s Poetics. U. Minn. Press.

Carpenter, James (2012). First Sight: ESP and Parapsychology in Everyday Life. Rowan & Littlefield.

Life appears to require at least some instability. This fact should be considered a biological universality, proposes University of Southern California molecular biologist John Tower.

Biological laws are thought to be rare and describe patterns or organizing principles that appear to be generally ubiquitous. While they can be squishier than the absolutes of math or physics, such rules in biology nevertheless help us better understand the complex processes that govern life.

Most examples we’ve found so far seem to concern themselves with the conservation of materials or energy, and therefore life’s tendency towards stability.