During the worst days of the COVID-19 pandemic, many of us became accustomed to news reports on the reproduction number R, which is the average number of cases arising from a single infected case. If we were told that R was much greater than 1, that meant the number of infections was growing rapidly, and interventions (such as social distancing and lockdowns) were necessary. But if R was near to 1, then the disease was deemed to be under control and some relaxation of restrictions could be warranted. New mathematical modeling by Kris Parag from Imperial College London shows limitations to using R or a related growth rate parameter for assessing the “controllability” of an epidemic [1]. As an alternative strategy, Parag suggests a framework based on treating an epidemic as a positive feedback loop. The model produces two new controllability parameters that describe how far a disease outbreak is from a stable condition, which is one with feedback that doesn’t lead to growth.

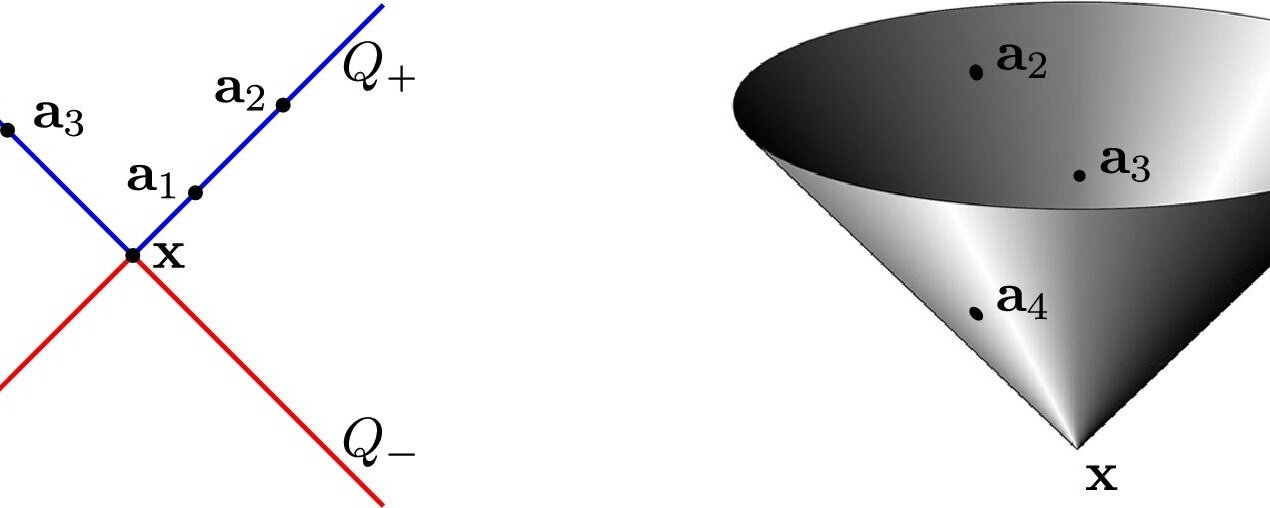

Parag’s starting point is the classical mathematical description of how an epidemic evolves in time in terms of the reproduction number R. This approach is called the renewal model and has been widely used for infectious diseases such as COVID-19, SARS, influenza, Ebola, and measles. In this model, new infections are determined by past infections through a mathematical function called the generation-time distribution, which describes how long it takes for someone to infect someone else. Parag departs from this traditional approach by using a kind of Fourier transform, called a Laplace transform, to convert the generation-time distribution into periodic functions that define the number of the infections. The Laplace transform is commonly adopted in control theory, a field of engineering that deals with the control of machines and other dynamical systems by treating them as feedback loops.

The first outcome of applying the Laplace transform to epidemic systems is that it defines a so-called transfer function that maps input cases (such as infected travelers) onto output infections by means of a closed feedback loop. Control measures (such as quarantines and mask requirements) aim to disrupt this loop by acting as a kind of “friction” force. The framework yields two new parameters that naturally describe the controllability of the system: the gain margin and the delay margin. The gain margin quantifies how much infections must be scaled by interventions to stabilize the epidemic (where stability is defined by R = 1). The delay margin is related to how long one can wait to implement an intervention. If, for example, the gain margin is 2 and the delay margin is 7 days, then the epidemic is stable provided that the number of infections doesn’t double and that control measures are applied within a week.