The rise of the smaller models.

Mathematicians at Loughborough University have turned their attention to a fascinating observation that has intrigued scientists and cocktail enthusiasts alike: the mysterious way ouzo, a popular anise-flavored liquor, turns cloudy when water is added.

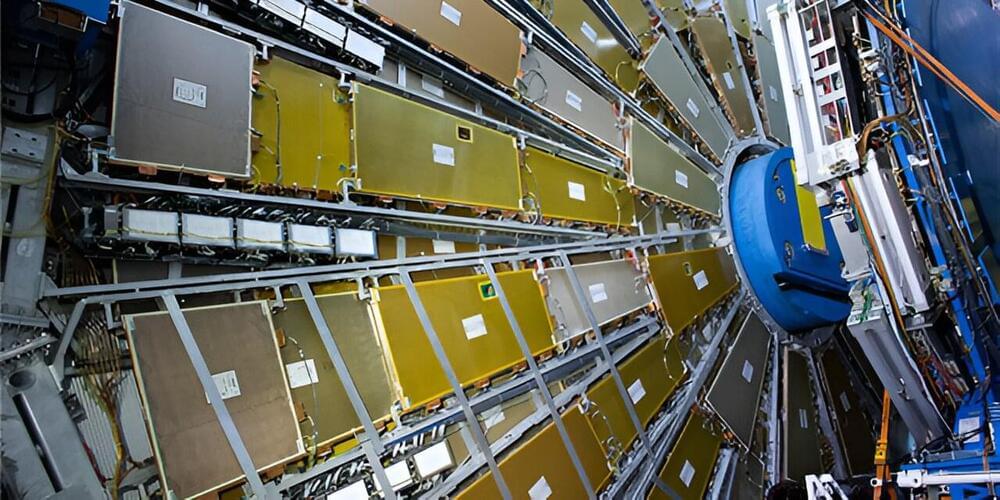

The Standard Model of particle physics is the mathematical description of the fundamental constituents and interactions of matter. While it is the accepted theory encapsulating our current state-of-the-art knowledge in particle physics, it is incomplete as it is unable to describe many glaring phenomena in nature.

Crivellin and Mellado’s article describes deviations in the decay of multi-lepton particles in the LHC, compared to how they should behave according to the Standard Model. These deviations, or anomalies, constitute excesses in the production of particles called electrons and its heavy cousin, the muon, on top of the predictions from the Standard Model.

“An anomaly is something that stands out as unusual or different from what is normal or expected. In this case, this is a deviation from the Standard Model of Particle physics. Anomalies can be important because they often signal that something unexpected or significant has happened,” says Crivellin.

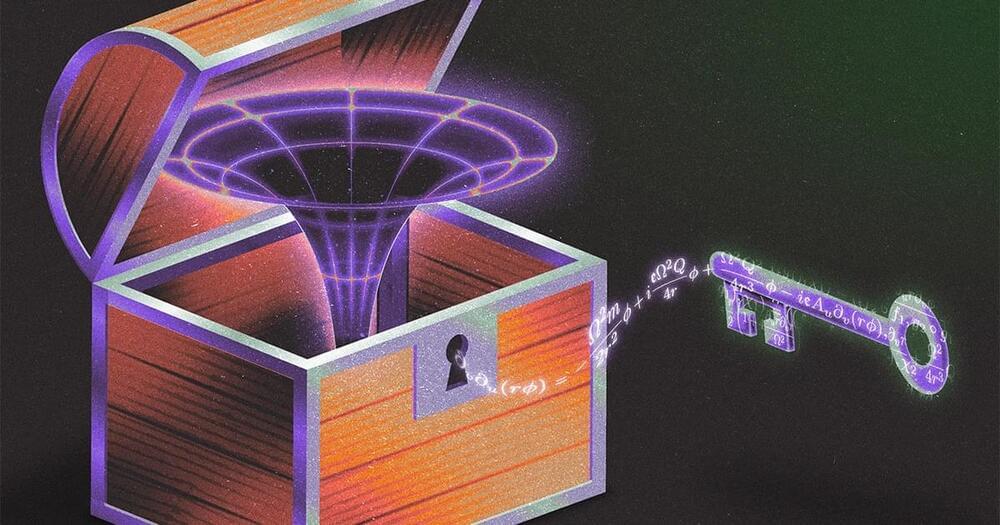

A new theory suggests time travel might be possible without creating paradoxes.

TL;DR:

A physics student from the University of Queensland, Germain Tobar, has developed a groundbreaking theory that could make time travel possible without creating paradoxes. Tobar’s calculations suggest that space-time can adjust itself to avoid inconsistencies, meaning that even if a time traveler were to change the past, the universe would correct itself to prevent any disruptions to the timeline. This theory offers a new perspective on time loops and free will, aligning with Einstein’s predictions. While the math is sound, actual time travel remains a distant possibility.

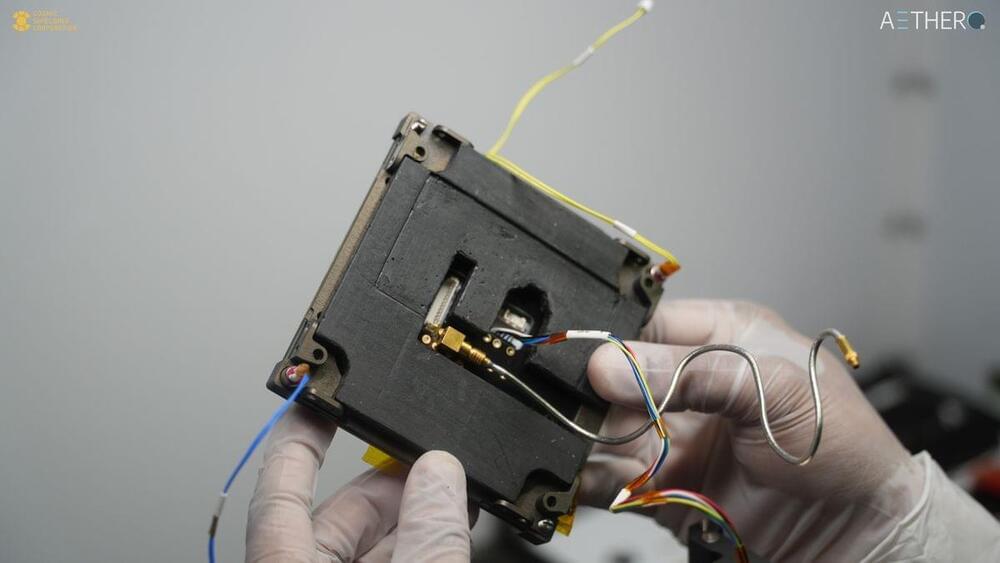

“This is going to be the fastest AI computer ever launched to space,” Yanni Barghouty, CSC’s cofounder and CEO, told Space.com. “The goal of this mission is simply to demonstrate the successful operation of an AI-capable Nvidia GPU on orbit with minimal to no errors while operating.”

The GPU will fly aboard a cubesat built by San Francisco-based company Aethero, a maker of high-performance, space-rated computers. The GPU’s only task during its four-month orbital mission will be to make mathematical calculations, the results of which will be beamed to Earth and carefully checked.

The Geometric Langlands Correspondence. Edward Frenkel is a renowned mathematician and professor at the University of California, Berkeley, known for his work in representation theory, algebraic geometry, and mathematical physics. Edward is also the author of the bestselling book “Love and Math: The Heart of Hidden Reality”, which bridges the gap between mathematics and the broader public.

Listen on Spotify: https://open.spotify.com/show/4gL14b9…

Become a YouTube Member Here:

/ @theoriesofeverything.

Patreon: / curtjaimungal (early access to ad-free audio episodes!)

Join TOEmail at https://www.curtjaimungal.org.

LINKS:

Terry Tao is one of the world’s leading mathematicians and winner of many awards including the Fields Medal. He is Professor of Mathematics at the University of California, Los Angeles (UCLA). Following his talk, Terry is in conversation with fellow mathematician Po-Shen Loh.

The Oxford Mathematics Public Lectures are generously supported by XTX Markets.