An exercise in pure mathematics has led to a wide-ranging theory of how the world comes together.

It’s a sad day. The observatory has not only been used to observe radio wave signals in deep space. It’s also become an iconic landmark over the decades after being featured in countless films and TV shows including the 1995 James Bond blockbuster “GoldenEye.”

The observatory has also made significant contributions to the Search for Extraterrestrial Intelligence (SETI), spotting mysterious radio signals emanating from distant corners of the universe.

“This decision is not an easy one for NSF to make, but safety of people is our number one priority,” Sean Jones, the assistant director for the mathematical and physical sciences directorate at NSF, told reporters today over a conference call, as quoted by The Verge.

Calculations show how theoretical ‘axionic strings’ could create odd behavior if produced in exotic materials in the lab.

A hypothetical particle that could solve one of the biggest puzzles in cosmology just got a little less mysterious. A RIKEN physicist and two colleagues have revealed the mathematical underpinnings that could explain how so-called axions might generate string-like entities that create a strange voltage in lab materials.

Axions were first proposed in the 1970s by physicists studying the theory of quantum chromodynamics, which describes how some elementary particles are held together within the atomic nucleus. The trouble was that this theory predicted some bizarre properties for known particles that are not observed. To fix this, physicists posited a new particle—later dubbed the axion, after a brand of laundry detergent, because it helped clean up a mess in the theory.

AI designed to be aware of it’s own competence.

Ira Pastor, ideaXme life sciences ambassador interviews Dr. Jiangying Zhou, DARPA program manager in the Defense Sciences Office, USA.

Ira Pastor comments:

On this episode of ideaXme, we meet once more with the U.S. Defense Advanced Research Projects Agency (DARPA), but unlike the past few shows where we been spent time with thought leaders from the Biologic Technology Office (BTO), today we’re going to be focused on the Defense Sciences Office (DSO) which identifies and pursues high-risk, high-payoff research initiatives across a broad spectrum of science and engineering disciplines and transforms them into important, new game-changing technologies for U.S. national security. Current DSO themes include frontiers in math, computation and design, limits of sensing and sensors, complex social systems, and anticipating surprise.

Dr. Jiangying Zhou became a DARPA program manager in the Defense Sciences Office in November 2018, having served as a program manager in the Strategic Technology Office (STO) since January 2018. Her areas of research include machine learning, artificial intelligence, data analytics, and intelligence, surveillance and reconnaissance (ISR) exploitation technologies.

Sophomore math major Xzavier Herbert was never much into science fiction or the space program, but his skills in pure mathematics seem to keep drawing him into NASA’s orbit.

With an interest in representation theory, Herbert spent the summer virtually at NASA, studying connections between classical information theory and quantum information theory, each of which corresponds to a different set of laws: classical physics and quantum mechanics.

“What I’m doing involves how representation theory allows us to draw a direct analog from classical information theory to quantum information theory,” Herbert says. “It turns out that there is a mathematical way of justifying how these are related.”

Ira Pastor, ideaXme life sciences ambassador and CEO Bioquark interviews Dr. Michelle Francl the Frank B. Mallory Professor of Chemistry, at Bryn Mawr College, and an adjunct scholar of the Vatican Observatory.

Ira Pastor comments:

Today, we have another fascinating guest working at the intersection of cutting edge science and spirituality.

Dr. Michelle Francl is the Frank B. Mallory Professor of Chemistry, at Bryn Mawr College, a distinguished women’s college in the suburbs of Philadephia, as well as an adjunct scholar of the Vatican Observatory.

Dr. Francl has a Ph.D. in chemistry from University of California, Irvine, did her post-doctoral research at Princeton University, and has taught physical chemistry, general chemistry, and mathematical modeling at Bryn Mawr College since 1986. In addition Dr. Francl has research interests in theoretical and computational chemistry, structures of topologically intriguing molecules (molecules with weird shapes), history and sociology of science, and the rhetoric of science.

Dr. Francl is noted for developing new methodologies in computational chemistry, is on a list of the 1,000 most cited chemists, is a member of the editorial board for the Journal of Molecular Graphics and Modelling, is active in the American Chemical Society, and the author of “The Survival Guide for Physical Chemistry”. In 1994, she was awarded the Christian R. and Mary F. Lindback Award by Bryn Mawr College for excellence in teaching.

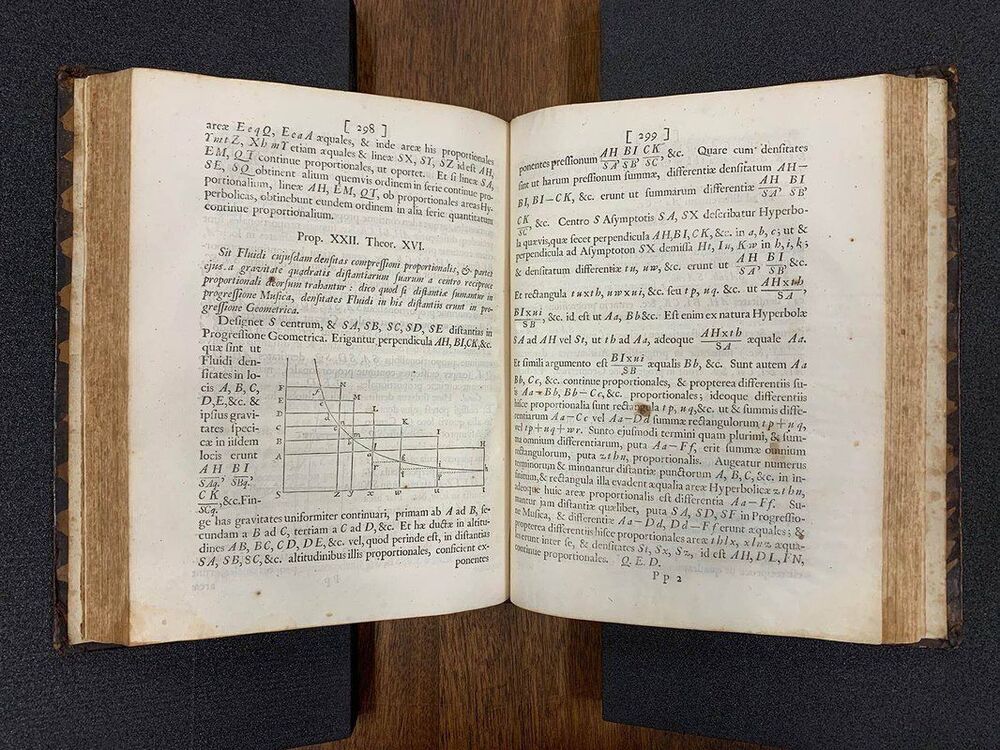

In a story of lost and stolen books and scrupulous detective work across continents, a Caltech historian and his former student have unearthed previously uncounted copies of Isaac Newton’s groundbreaking science book Philosophiae Naturalis Principia Mathematica, known more colloquially as the Principia. The new census more than doubles the number of known copies of the famous first edition, published in 1687. The last census of this kind, published in 1953, had identified 187 copies, while the new Caltech survey finds 386 copies. Up to 200 additional copies, according to the study authors, likely still exist undocumented in public and private collections.

“We felt like Sherlock Holmes,” says Mordechai (Moti) Feingold, the Kate Van Nuys Page Professor of the History of Science and the Humanities at Caltech, who explains that he and his former student, Andrej Svorenčík (MS ‘08) of the University of Mannheim in Germany, spent more than a decade tracing copies of the book around the world. Feingold and Svorenčík are co-authors of a paper about the survey published in the journal Annals of Science.

Moreover, by analyzing ownership marks and notes scribbled in the margins of some of the books, in addition to related letters and other documents, the researchers found evidence that the Principia, once thought to be reserved for only a select group of expert mathematicians, was more widely read and comprehended than previously thought.

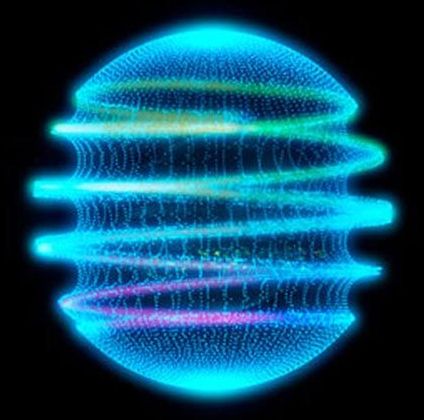

A team of researchers at Samsung has developed a slim-panel holographic video display that allows for viewing from a variety of angles. In their paper published in the journal Nature Communications, the group describes their new display device and their plans for making it suitable for use with a smartphone.

Despite predictions in science-fiction books and movies over the past several decades, 3D holographic video players are still not available to consumers. Existing players are too bulky and display video from limited viewing angles. In this new effort, the researchers at Samsung claim to have overcome these difficulties and built a demo device to prove it.

To build their demo device, which was approximately 25 cm tall, the team at Samsung added a steering-backlight unit with a beam deflector for increasing viewing angles. The demo had a viewing angle of 15 degrees at distances up to one meter. The beam deflector was made by sandwiching liquid crystals between sheets of glass. The end result was a device that could bend the light that came through it very much like a prism. Testing showed the beam deflector combined with a tilting mechanism increased viewing angles by 30 times compared to conventional designs. The new design also allows for a slim form at just 1 cm thick. It also has a light modulator, geometric lens and a holographic video processor capable of carrying out 140 billion operations per second. The researchers used a new algorithm that uses lookup tables rather than math operations to process the video data. The demo device was capable of displaying 4K resolution holographic video running at 30 frames per second.