New analysis supports Einstein’s relativity and narrows neutrino mass ranges, hinting at evolving dark energy.

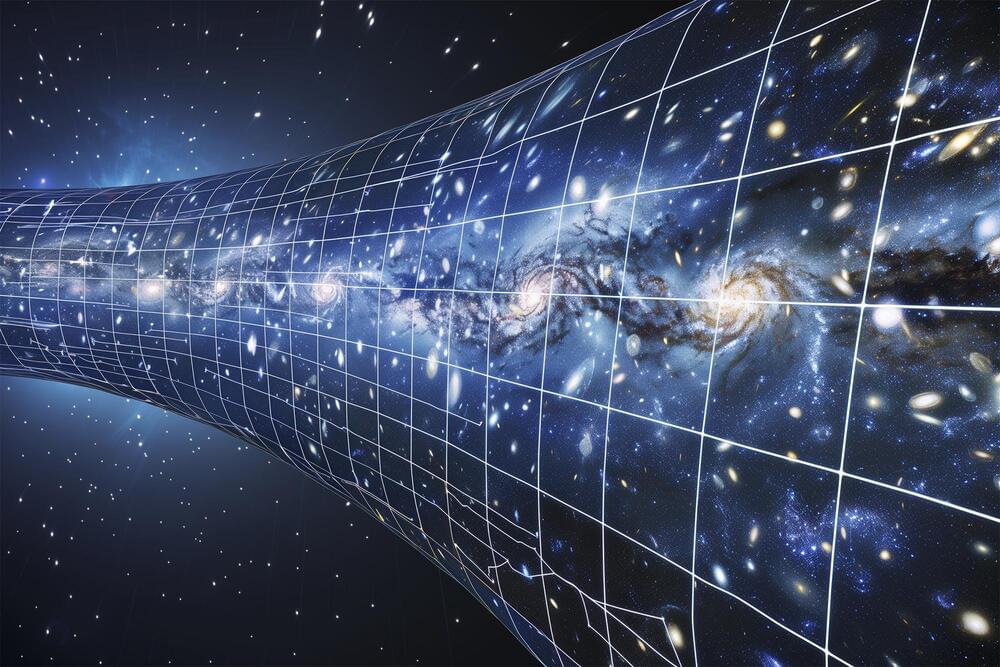

Gravity, the fundamental force sculpting the universe, has shaped tiny variations in matter from the early cosmos into the vast networks of galaxies we see today. Using data from the Dark Energy Spectroscopic Instrument (DESI), scientists have traced the evolution of these cosmic structures over the past 11 billion years. This research represents the most precise large-scale test of gravity ever conducted, offering unprecedented insights into the universe’s formation and behavior.

Introduction to DESI and its global impact.