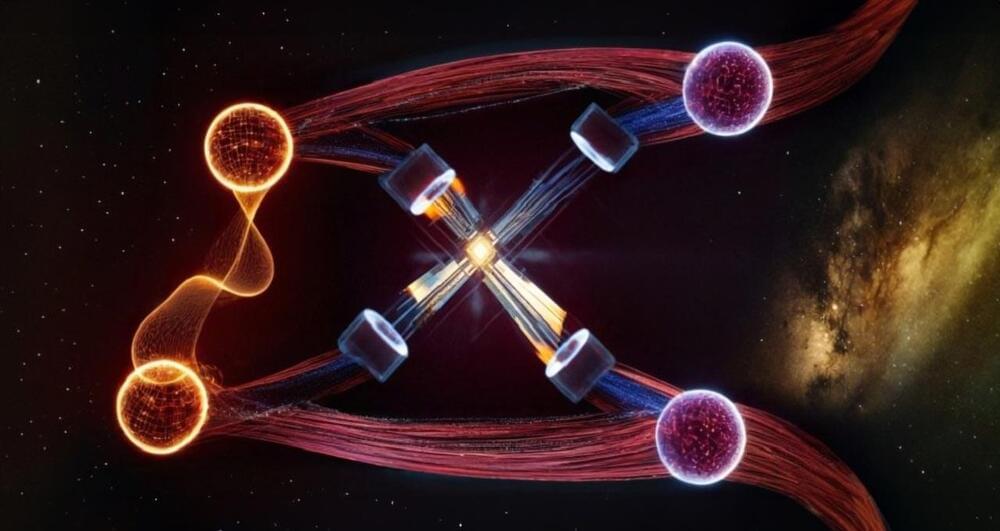

The SETI Institute has launched a new grants program to support the advancement of technosignature science, utilizing the Allen Telescope Array (ATA), a crucial observatory in the search for extraterrestrial technology. This program, the first of its kind, will fund research ranging from observational techniques to theoretical models in technosignature science, with grants available for non-tenured faculty and post-prelim graduate students. Credit: SETI Institute.

The SETI Institute’s new grants program supports advanced research in detecting extraterrestrial technosignatures with grants up to $100,000, leveraging the capabilities of the Allen Telescope Array.

The SETI Institute has introduced a groundbreaking grants program focused on advancing technosignature science. This unique initiative is designed to fund innovative research that tackles essential observational, theoretical, and technical challenges in the quest for technosignatures, which may reveal signs of past or present extraterrestrial technology.