A phenomenon once confined to equations breaks into the observable world.

If you’re considering how your organization can use this revolutionary technology, one of the choices that have to be made is whether to go with open-source or closed-source (proprietary) tools, models and algorithms.

Why is this decision important? Well, each option offers advantages and disadvantages when it comes to customization, scalability, support and security.

In this article, we’ll explore the key differences as well as the pros and cons of each approach, as well as explain the factors that need to be considered when deciding which is right for your organization.

In the 1920s, Erwin Schrödinger wrote an equation that predicts how particles-turned-waves should behave. Now, researchers are perfectly recreating those predictions in the lab.

The “it” Mr Woodman is referring to is Sora, a new text-to-video AI model from OpenAI, the artificial intelligence research organisation behind viral chatbot ChatGPT.

Instead of using their broad technical skills in filmmaking, such as animation, to overcome obstacles in the process, Mr Woodman and his team relied only on the model to generate footage for them, shot by shot.

“We just continued generating and it was almost like post-production and production in the same breath,” says Patrick Cederberg, who also worked on the project.

CWI senior researcher Sander Bohté started working on neuromorphic computing already in 1998 as a PhD-student, when the subject was barely on the map. In recent years, Bohté and his CWI-colleagues have realized a number of algorithmic breakthroughs in spiking neural networks (SNNs) that make neuromorphic computing finally practical: in theory many AI-applications can become a factor of a hundred to a thousand more energy-efficient. This means that it will be possible to put much more AI into chips, allowing applications to run on a smartwatch or a smartphone. Examples are speech recognition, gesture recognition and the classification of electrocardiograms (ECG).

“I am really grateful that CWI, and former group leader Han La Poutré in particular, gave me the opportunity to follow my interest, even though at the end of the 1990s neural networks and neuromorphic computing were quite unpopular”, says Bohté. “It was high-risk work for the long haul that is now bearing fruit.”

Spiking neural networks (SNNs) more closely resemble the biology of the brain. They process pulses instead of the continuous signals in classical neural networks. Unfortunately, that also makes them mathematically much more difficult to handle. For many years SNNs were therefore very limited in the number of neurons they could handle. But thanks to clever algorithmic solutions Bohté and his colleagues have managed to scale up the number of trainable spiking neurons first to thousands in 2021, and then to tens of millions in 2023.

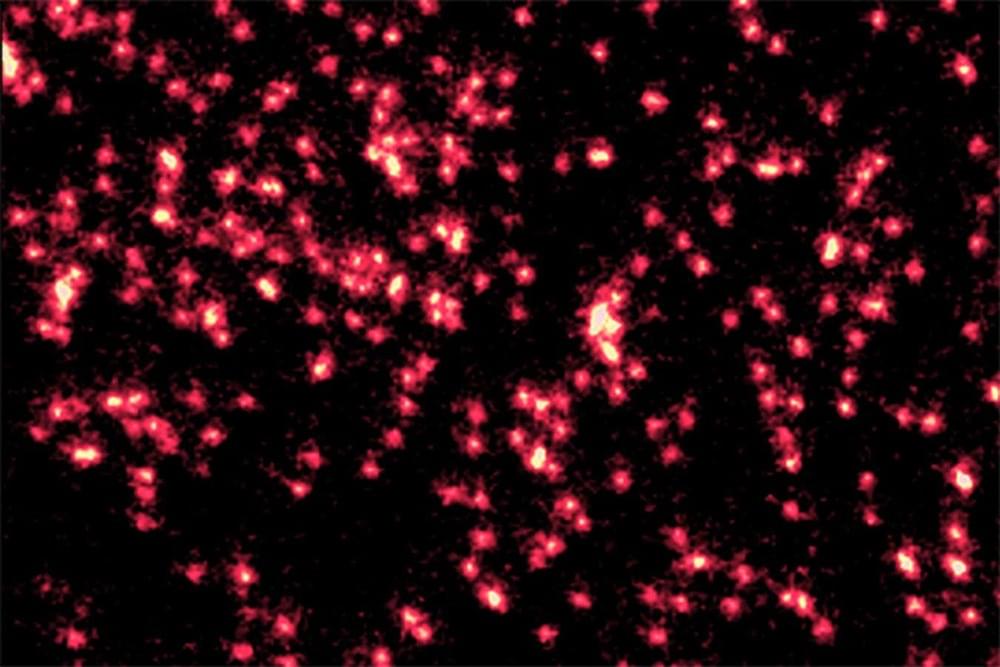

For most stars, neutron stars and black holes are their final resting places. When a supergiant star runs out of fuel, it expands and then rapidly collapses on itself. This act creates a neutron star—an object denser than our sun crammed into a space 13 to 18 miles wide. In such a heavily condensed stellar environment, most electrons combine with protons to make neutrons, resulting in a dense ball of matter consisting mainly of neutrons. Researchers try to understand the forces that control this process by creating dense matter in the laboratory through colliding neutron-rich nuclei and taking detailed measurements.

The U.S. Air Force Test Pilot School and the Defense Advanced Research Projects Agency were finalists for the 2023 Robert J. Collier Trophy, a formal acknowledgement of recent breakthroughs that have launched the machine-learning era within the aerospace industry. The teams worked together to test breakthrough executions in artificial intelligence algorithms using the X-62A VISTA aircraft as part of DARPA’s Air Combat Evolution (ACE) program. In less than a calendar year the teams went from the initial installation of live AI agents into the X-62A’s systems, to demonstrating the first AI versus human within-visual-range engagements, otherwise known as a dogfight. In total, the team made over 100,000 lines of flight-critical software changes across 21 test flights. Dogfighting is a highly complex scenario that the X-62A utilized to successfully prove using non-deterministic artificial intelligence safely is possible within aerospace.

“The X-62A is an incredible platform, not just for research and advancing the state of tests, but also for preparing the next generation of test leaders. When ensuring the capability in front of them is safe, efficient, effective and responsible, industry can look to the results of what the X-62A ACE team has done as a paradigm shift,” said Col. James Valpiani, commandant of the Test Pilot School.

“The potential for autonomous air-to-air combat has been imaginable for decades, but the reality has remained a distant dream up until now. In 2023, the X-62A broke one of the most significant barriers in combat aviation. This is a transformational moment, all made possible by breakthrough accomplishments of the X-62A ACE team,” said Secretary of the Air Force Frank Kendall. Secretary Kendall will soon take flight in the X-62A VISTA to personally witness AI in a simulated combat environment during a forthcoming test flight at Edwards.

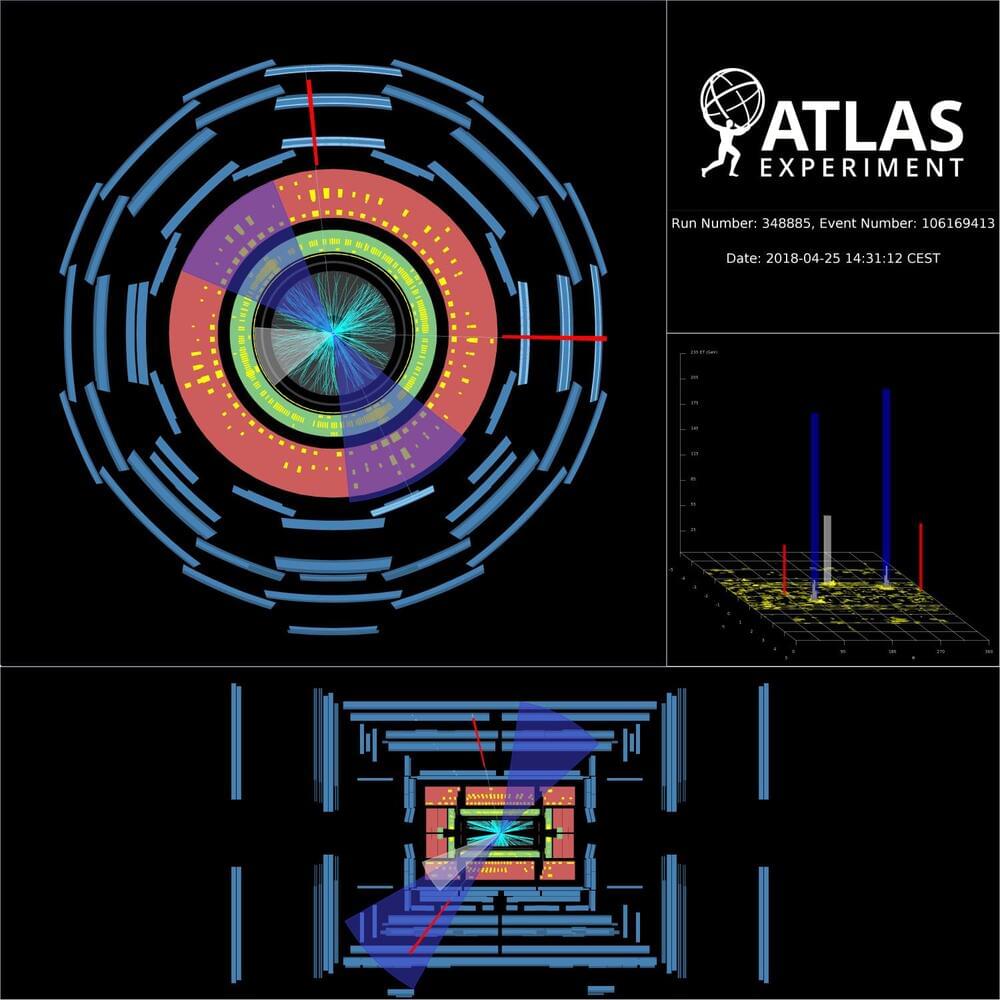

More than 40 years since its discovery, the Z boson remains a cornerstone of particle physics research. Through its production alongside heavy-flavour quarks (bottom and charm quarks), the Z boson provides a unique window into the internal dynamics of a proton’s constituents. Specifically, it allows researchers to probe the heavy-flavour contributions to “Parton Distribution Functions” (PDFs), which describe how a proton’s momentum is distributed among its constituent quarks and gluons. Using the full LHC Run-2 dataset, the ATLAS Collaboration measured Z boson production in association with both bottom (b) and charm © quarks, the latter for the first time in ATLAS. In their new result, physicists studied Z boson decays into electron or muon pairs produced in association with “jets” of particles. They focused on jets arising from the hadronisation of b or c quarks, creating two jet “flavours”: b-jets and c-jets. Physicists developed a new multivariate algorithm that was able to identify the jet-flavour, allowing them to measure the production of both Z+b-jets and Z+c-jets processes. Researchers then took this one step further and applied a specialised fit procedure, called the ‘flavour-fit’, to determine the large background contribution due to Z production together with other flavour jets. This method is driven by data and allows a precise description of the jet flavours for every studied observable. This led to a significant improvement in the precision of the results, allowing a more stringent comparison with theoretical predictions. The Z boson provides a unique window into the internal dynamics of a proton’s constituents. So, what did they find? ATLAS researchers measured the production rates (or “cross sections”) of several physics observables. These results were then compared with theoretical predictions, probing various approaches to describe the quark distributions in protons, the most recent computational improvements in QCD calculations and the effect of different treatments of the quark masses in the predictions. For example, Figure 1a shows the differential cross section for Z+1 b-jet production as a function of the transverse momentum of the most energetic b-jet in the event. Results show that predictions treating the b-quarks as massless (blue squares and red triangles) provide the best agreement with measurements. Z+2 b-jets angular observables are in general well understood, while some discrepancies with data appear in the invariant mass of the 2 b-jets, whose spectrum is not well modelled by the studied predictions. Figure 1: Measured fiducial cross-section as a function of a) leading b-jet pT for Z+b-jets events and b) leading c-jet x_F (its momentum along the beam axis relative to the initial proton momentum) for Z+c-jets events. Data (black) are compared with several theoretical predictions testing different theoretical flavour schemes, high order accuracy calculations and intrinsic charm models. (Image: ATLAS Collaboration/CERN) Studying Z+c-jets production offered a unique possibility to investigate the hypothesis of intrinsic (valence-like) components of c-quarks in the proton. With this result, the ATLAS Collaboration contributes to the long-standing debate on the existence of this phenomenon, currently supported by experimental measurements from the LHCb Collaboration. As shown in Figure 1b, the Z+c-jets results were compared with several hypotheses for intrinsic charm content. Due to the larger experimental and theoretical uncertainty on Z+c-jets processes, the current result makes no strong statement on the intrinsic c-quark component in the proton. However, it does improve physicists’ sensitivity to this effect, as the new data will be used in future by PDF fitting groups to set tighter constraints on the intrinsic charm distribution in the proton. Overall, the new ATLAS result provides deep insights for refining theoretical predictions, thereby fostering a deeper understanding of the dynamics of heavy-flavour quark content in the proton. About the event display: Display of a candidate Z boson decaying to two muons alongside two b-jets, recorded by the ATLAS detector at a centre-of-mass collision energy of 13 TeV. Blue cones indicate the b-jets, and the red lines indicate the muon tracks. Starting from the centre of the ATLAS detector, the reconstructed tracks of the charged particles in the inner detector are shown as cyan lines. The energy deposits in the electromagnetic (the green layer) and hadronic (the red layer) calorimeters are shown as yellow boxes. The hits in the muon spectrometer (the outer blue layer) are shown as light blue blocks. (Image: ATLAS Collaboration/CERN) Learn more Measurements of the production cross-section for a Z boson in association with b-or c-jets in proton-proton collisions at 13 TeV with the ATLAS detector (arXiv:2403.15093, see figures) Measurements of the production cross-section for a boson in association with in proton–proton collisions at 13 TeV (JHEP 7 (2020) 44, arXiv:2003.11960) LHCb Collaboration, Study of Z Bosons Produced in Association with Charm in the Forward Region (Phys. Rev. Lett. 128 (2022) 82,001, arXiv:2109.08084) See also the full list of ATLAS physics results.

An easy-to-use technique could assist everyone from economists to sports analysts.

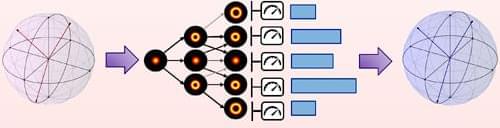

Pollsters trying to predict presidential election results and physicists searching for distant exoplanets have at least one thing in common: They often use a tried-and-true scientific technique called Bayesian inference.

Bayesian inference allows these scientists to effectively estimate some unknown parameter — like the winner of an election — from data such as poll results. But Bayesian inference can be slow, sometimes consuming weeks or even months of computation time or requiring a researcher to spend hours deriving tedious equations by hand.