Restraining or slowing ageing hallmarks at the cellular level have been proposed as a route to increased organismal lifespan and healthspan. Consequently, there is great interest in anti-ageing drug discovery. However, this currently requires laborious and lengthy longevity analysis. Here, we present a novel screening readout for the expedited discovery of compounds that restrain ageing of cell populations in vitro and enable extension of in vivo lifespan.

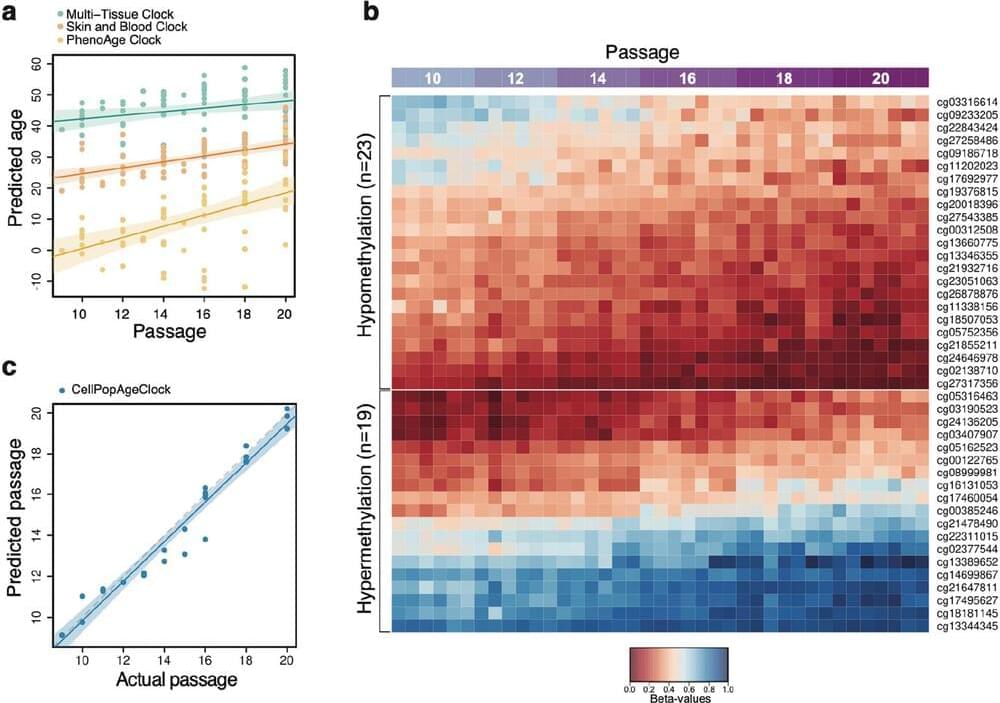

Using Illumina methylation arrays, we monitored DNA methylation changes accompanying long-term passaging of adult primary human cells in culture. This enabled us to develop, test, and validate the CellPopAge Clock, an epigenetic clock with underlying algorithm, unique among existing epigenetic clocks for its design to detect anti-ageing compounds in vitro. Additionally, we measured markers of senescence and performed longevity experiments in vivo in Drosophila, to further validate our approach to discover novel anti-ageing compounds. Finally, we bench mark our epigenetic clock with other available epigenetic clocks to consolidate its usefulness and specialisation for primary cells in culture.

We developed a novel epigenetic clock, the CellPopAge Clock, to accurately monitor the age of a population of adult human primary cells. We find that the CellPopAge Clock can detect decelerated passage-based ageing of human primary cells treated with rapamycin or trametinib, well-established longevity drugs. We then utilise the CellPopAge Clock as a screening tool for the identification of compounds which decelerate ageing of cell populations, uncovering novel anti-ageing drugs, torin2 and dactolisib (BEZ-235). We demonstrate that delayed epigenetic ageing in human primary cells treated with anti-ageing compounds is accompanied by a reduction in senescence and ageing biomarkers. Finally, we extend our screening platform in vivo by taking advantage of a specially formulated holidic medium for increased drug bioavailability in Drosophila. We show that the novel anti-ageing drugs, torin2 and dactolisib (BEZ-235), increase longevity in vivo.