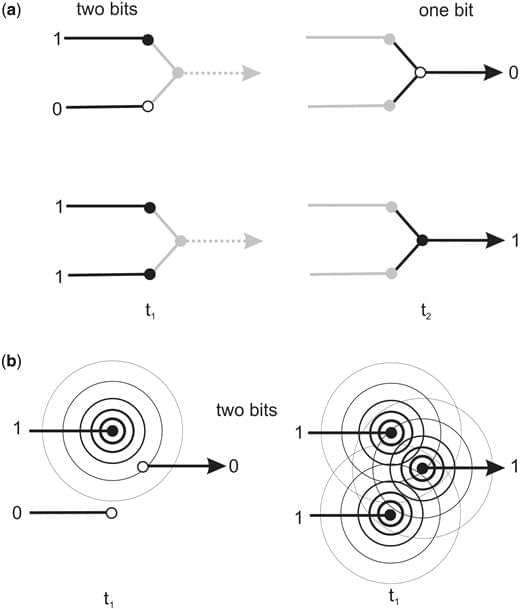

In this sense, the cemi theory incorporates Chalmers’ (Chalmers 1995) ‘double-aspect’ principle that information has both a physical, and a phenomenal or experiential aspect. At the particulate level, a molecule of the neurotransmitter glutamate encodes bond energies, angles, etc. but nothing extrinsic to itself. Awareness makes no sense for this kind matter-encoded information: what can glutamate be aware of except itself? Conversely, at the wave level, information encoded in physical fields is physically unified and can encode extrinsic information, as utilized in TV and radio signals. This EM field-based information will, according to the double-aspect principle, be a suitable substrate for experience. As proposed in my earlier paper (McFadden 2002a) ‘awareness will be a property of any system in which information is integrated into an information field that is complex enough to encode representations of real objects in the outside world (such as a face)’. Nevertheless, awareness is meaningless unless it can communicate so only fields that have access to a motor system, such as the cemi field, are candidates for any scientific notion of consciousness.

I previously proposed (McFadden 2013b), that complex information acquires its meaning, in the sense of binding of all of the varied aspects of a mental object, in the brain’s EM field. Here, I extend this idea to propose that meaning is an algorithm experienced, in its entirety from problem to its solution, as a single percept in the global workspace of brain’s EM field. This is where distributed information encoded in millions of physically separated neurons comes together. It is where Shakespeare’s words are turned into his poetry. It is also, where problems and solutions, such as how to untangle a rope from the wheels of a bicycle, are grasped in their entirety.

There are of course many unanswered questions, such as degree and extent of synchrony required to encode conscious thoughts, the influence of drugs or anaesthetics on the cemi field or whether cemi fields are causally active in animal brains. Yet the cemi theory provides a new paradigm in which consciousness is rooted in an entirely physical, measurable and artificially malleable physical structure and is amenable to experimental testing. The cemi field theory thereby delivers a kind of dualism, but it is a scientific dualism built on the distinction between matter and energy, rather than matter and spirit. Consciousness is what algorithms that exist simultaneously in the space of the brain’s EM field, feel like.