Summary: A study reveals that London taxi drivers prioritize complex and distant junctions during their initial “offline thinking” phase when planning routes, rather than sequentially considering streets. This efficient, intuitive strategy leverages spatial awareness and contrasts with AI algorithms, which typically follow step-by-step approaches.

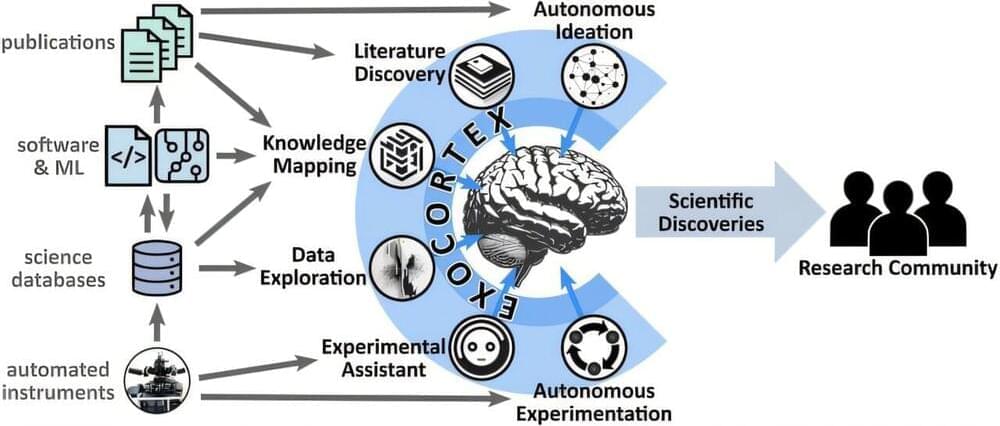

The findings highlight the unique planning abilities of expert human navigators, influenced by their deep memory of London’s intricate street network. Researchers suggest that studying human expert intuition could improve AI algorithms, especially for tasks involving flexible planning and human-AI collaboration.