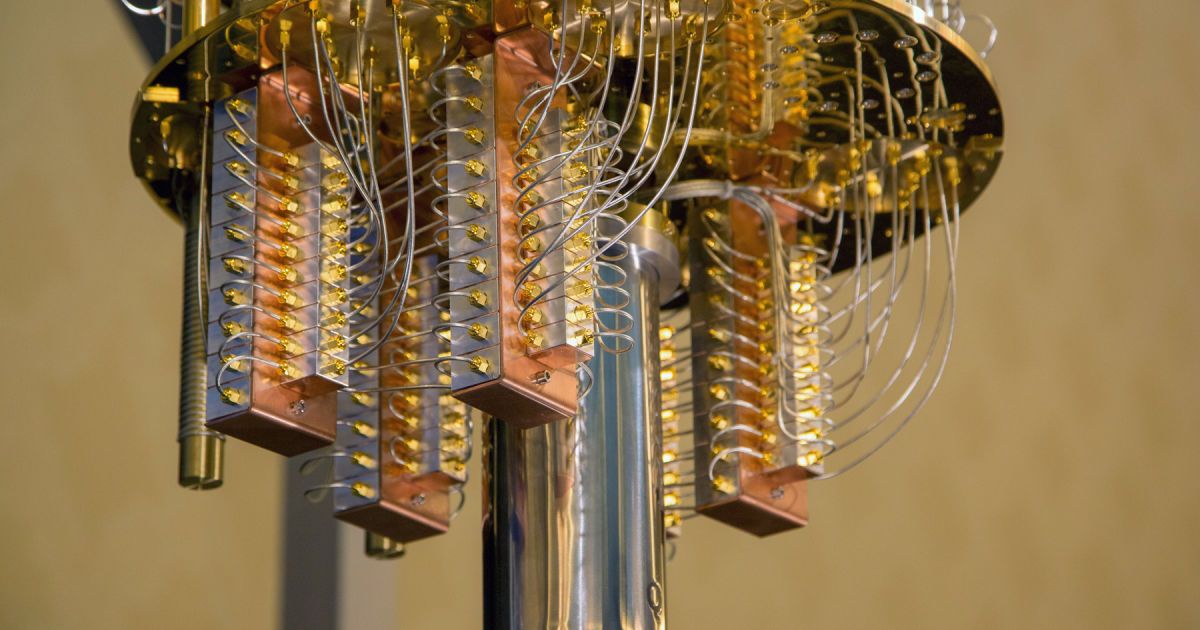

PETER SINGER, AN expert on future warfare at the New America think-tank, is in no doubt. “What we have is a series of technologies that change the game. They’re not science fiction. They raise new questions. What’s possible? What’s proper?” Mr Singer is talking about artificial intelligence, machine learning, robotics and big-data analytics. Together they will produce systems and weapons with varying degrees of autonomy, from being able to work under human supervision to “thinking” for themselves. The most decisive factor on the battlefield of the future may be the quality of each side’s algorithms. Combat may speed up so much that humans can no longer keep up.

Frank Hoffman, a fellow of the National Defence University who coined the term “hybrid warfare”, believes that these new technologies have the potential not just to change the character of war but even possibly its supposedly immutable nature as a contest of wills. For the first time, the human factors that have defined success in war, “will, fear, decision-making and even the human spark of genius, may be less evident,” he says.

Weapons with a limited degree of autonomy are not new. In 1943 Germany produced a torpedo with an acoustic homing device that helped it find its way to its target. Tomahawk cruise missiles, once fired, can adjust their course using a digital map of Earth’s contours. Anti-missile systems are pre-programmed to decide when to fire and engage an incoming target because the human brain cannot react fast enough.