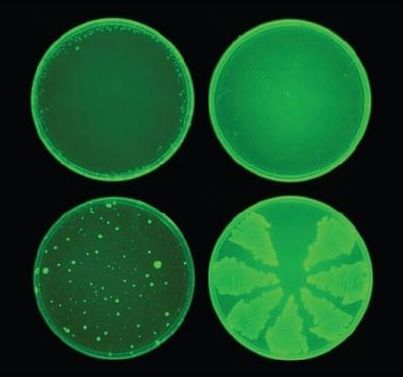

Under the watchful eye of a microscope, busy little blobs scoot around in a field of liquid—moving forward, turning around, sometimes spinning in circles. Drop cellular debris onto the plain and the blobs will herd them into piles. Flick any blob onto its back and it’ll lie there like a flipped-over turtle.

Their behavior is reminiscent of a microscopic flatworm in pursuit of its prey, or even a tiny animal called a water bear—a creature complex enough in its bodily makeup to manage sophisticated behaviors. The resemblance is an illusion: These blobs consist of only two things, skin cells and heart cells from frogs.

Writing today in the Proceedings of the National Academy of Sciences, researchers describe how they’ve engineered so-calleds (from the species of frog, Xenopus laevis, whence their cells came) with the help of evolutionary algorithms. They hope that this new kind of organism—contracting cells and passive cells stuck together—and its eerily advanced behavior can help scientists unlock the mysteries of cellular communication.