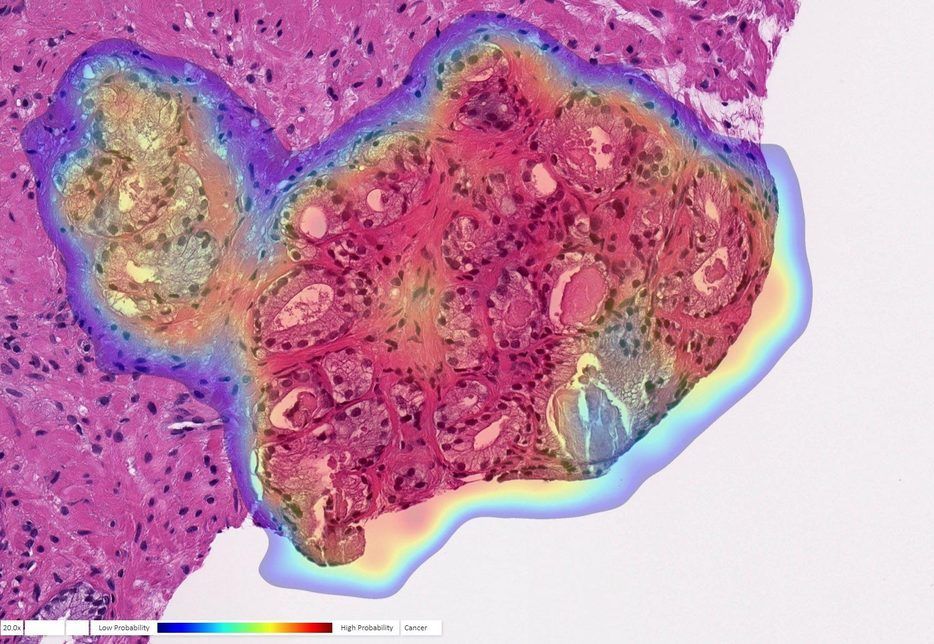

Proteins are essential to the life of cells, carrying out complex tasks and catalyzing chemical reactions. Scientists and engineers have long sought to harness this power by designing artificial proteins that can perform new tasks, like treat disease, capture carbon, or harvest energy, but many of the processes designed to create such proteins are slow and complex, with a high failure rate.

In a breakthrough that could have implications across the healthcare, agriculture, and energy sectors, a team lead by researchers in the Pritzker School of Molecular Engineering (PME) at the University of Chicago has developed an artificial intelligence-led process that uses big data to design new proteins.

By developing machine-learning models that can review protein information culled from genome databases, the researchers found relatively simple design rules for building artificial proteins. When the team constructed these artificial proteins in the lab, they found that they performed chemistries so well that they rivaled those found in nature.