Featureless “cost functions” prevent quantum machine learning algorithms from reconstructing scrambled information.

Is the physical universe independent from us, or is it created by our minds, as suggested by scientist Robert Lanza?

Army researchers have developed a pioneering framework that provides a baseline for the development of collaborative multi-agent systems.

The framework is detailed in the survey paper “Survey of recent multi-agent reinforcement learning algorithms utilizing centralized training,” which is featured in the SPIE Digital Library. Researchers said the work will support research in reinforcement learning approaches for developing collaborative multi-agent systems such as teams of robots that could work side-by-side with future soldiers.

“We propose that the underlying information sharing mechanism plays a critical role in centralized learning for multi-agent systems, but there is limited study of this phenomena within the research community,” said Army researcher and computer scientist Dr. Piyush K. Sharma of the U.S. Army Combat Capabilities Development Command, known as DEVCOM, Army Research Laboratory. “We conducted this survey of the state-of-the-art in reinforcement learning algorithms and their information sharing paradigms as a basis for asking fundamental questions on centralized learning for multi-agent systems that would improve their ability to work together.”

Erika Woodrum/HHMI/NatureTwo tiny arrays of implanted electrodes relayed information from the brain area that controls the hands and arms to an algorithm, which translated it into letters that appeared on a screen.

New EPFL research has found that almost half of local Twitter trending topics in Turkey are fake, a scale of manipulation previously unheard of. It also proves for the first time that many trends are created solely by bots due to a vulnerability in Twitter’s Trends algorithm.

Social media has become ubiquitous in our modern, daily lives. It has changed the way that people interact, connecting us in previously unimaginable ways. Yet, where once our social media networks probably consisted of a small circle of friends most of us are now part of much larger communities that can influence what we read, do, and even think.

One influencing mechanism, for example, is “Twitter Trends.” The platform uses an algorithm to determine hashtag-driven topics that become popular at a given point in time, alerting twitter users to the top words, phrases, subjects and popular hashtags globally and locally.

The researchers started with a sample taken from the temporal lobe of a human cerebral cortex, measuring just 1 mm3. This was stained for visual clarity, coated in resin to preserve it, and then cut into about 5300 slices each about 30 nanometers (nm) thick. These were then imaged using a scanning electron microscope, with a resolution down to 4 nm. That created 225 million two-dimensional images, which were then stitched back together into one 3D volume.

Machine learning algorithms scanned the sample to identify the different cells and structures within. After a few passes by different automated systems, human eyes “proofread” some of the cells to ensure the algorithms were correctly identifying them.

The end result, which Google calls the H01 dataset, is one of the most comprehensive maps of the human brain ever compiled. It contains 50000 cells and 130 million synapses, as well as smaller segments of the cells such axons, dendrites, myelin and cilia. But perhaps the most stunning statistic is that the whole thing takes up 1.4 petabytes of data – that’s more than a million gigabytes.

With 2700 locations across 10000 U.S. communities, YMCA is becoming a major hub for healthy living — From vaccinations and diabetes prevention programs, to healthy aging and wellness — Siva Balu, VP/Chief Information Officer — The Y of the U.S.A.

Mr. Siva Balu is Vice President and Chief Information Officer of YMCA of the U.S. (Y-USA), where he is working to rethink and reorganize the work of the organization’s information technology strategy to meet the changing needs of Y-USA and Ys throughout the country.

The YMCA is a leading nonprofit committed to strengthening community by connecting all people to their potential, purpose and each other, with a focus on empowering young people, improving health and well-being and inspiring action in and across communities, and with presence in 10000 neighborhoods across the nation, they have real ability to deliver positive change.

Mr. Balu has 20 years of healthcare technology experience in leadership roles for Blue Cross Blue Shield, the nation’s largest health insurer, which provides healthcare to over 107 million members—1 in 3 Americans. He most recently led the Enterprise Information Technology team at the Blue Cross Blue Shield Association (BCBSA), a national federation of Blue Cross and Blue Shield companies.

Mr. Balu was responsible for leading all aspects of IT, including architecture, application and product development, big data, business intelligence and data analytics, information security, project management, digital, infrastructure and operations. He has created several highly scalable innovative solutions that cater to the needs of members and patients throughout the country in all communities. He provided leadership in creating innovative solutions and adopting new technologies for national and international users.

Deep-learning expert weighs in on getting to AGI, assessing algorithmic intelligence, autonomous vehicles,” “Mark Ryan is a Data Science Manager at Intact Insurance and the author of the recently-released “Deep Learning with Structured Data”. He holds a Masters degree in Computer Science from the University of Toronto, and is interested in chatbots and natural language processing.

I think we should. If it is corrupt or makes mistakes, it will at least be correctable.

LONDON — A study has found that most Europeans would like to see some of their members of parliament replaced by algorithms.

Researchers at IE University’s Center for the Governance of Change asked 2769 people from 11 countries worldwide how they would feel about reducing the number of national parliamentarians in their country and giving those seats to an AI that would have access to their data.

The results, published Thursday, showed that despite AI’s clear and obvious limitations, 51% of Europeans said they were in favor of such a move.

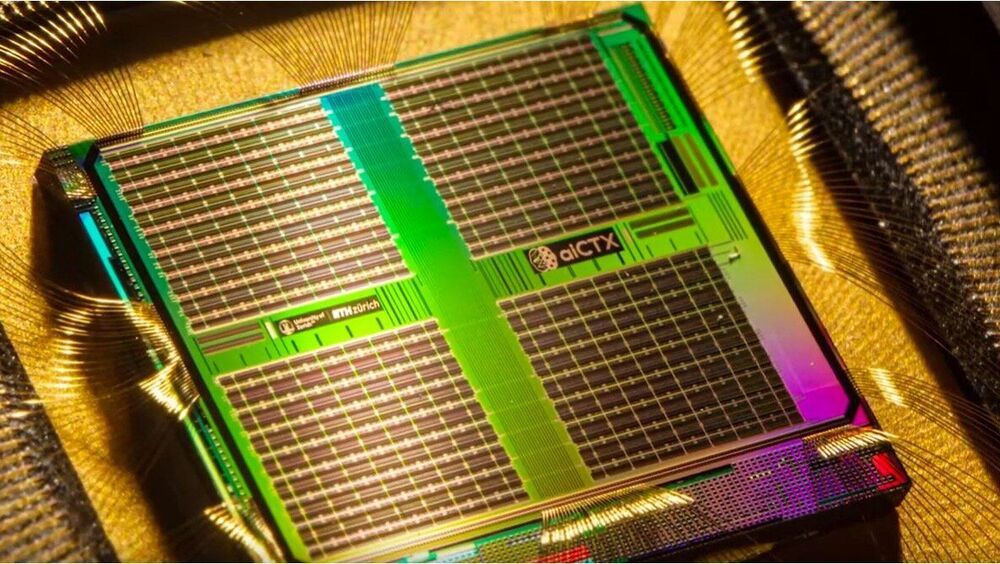

Researchers from Zurich have developed a compact, energy-efficient device made from artificial neurons that is capable of decoding brainwaves. The chip uses data recorded from the brainwaves of epilepsy patients to identify which regions of the brain cause epileptic seizures. This opens up new perspectives for treatment.

Current neural network algorithms produce impressive results that help solve an incredible number of problems. However, the electronic devices used to run these algorithms still require too much processing power. These artificial intelligence (AI) systems simply cannot compete with an actual brain when it comes to processing sensory information or interactions with the environment in real time.