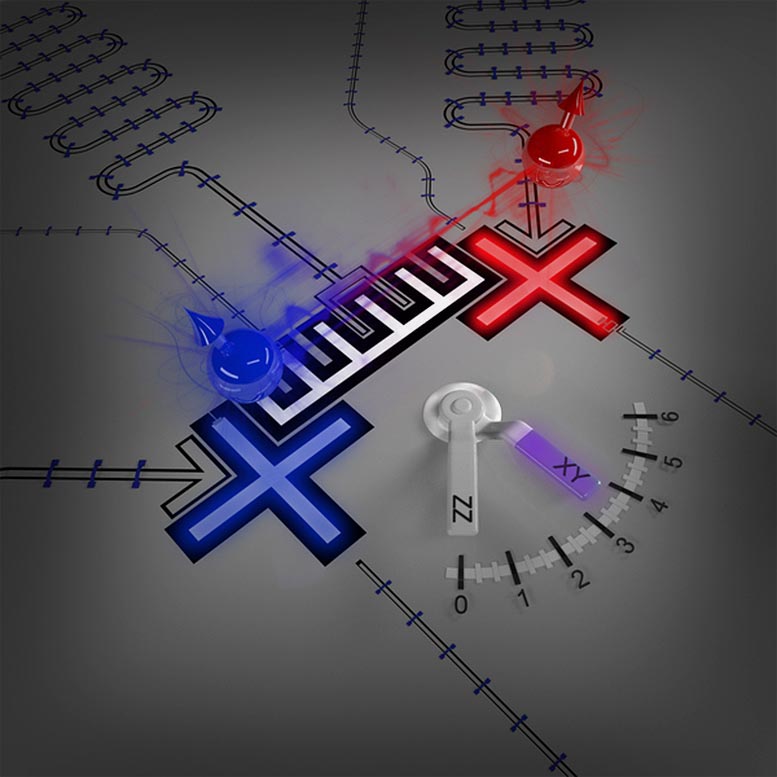

Russian scientists have experimentally proved the existence of a new type of quasiparticle—previously unknown excitations of coupled pairs of photons in qubit chains. This discovery could be a step towards disorder-robust quantum metamaterials. The study was published in Physical Review B.

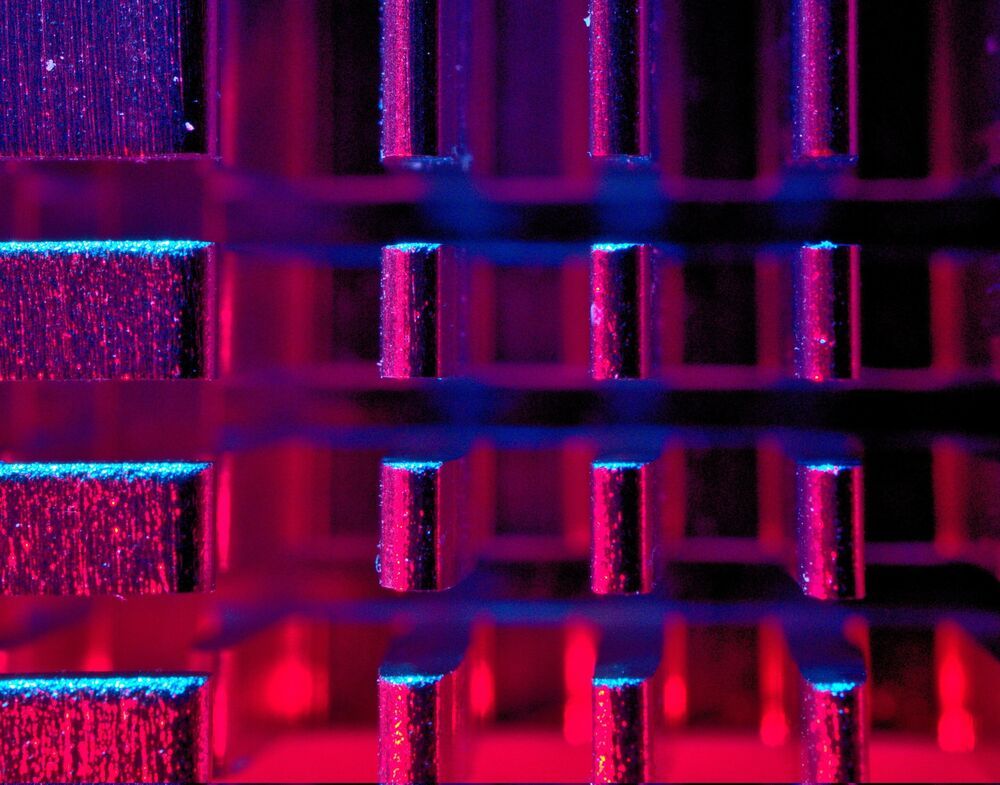

Superconducting qubits are a leading qubit modality today that is currently being pursued by industry and academia for quantum computing applications. However, the performance of quantum computers is largely affected by decoherence that contributes to a qubit’s extremely short lifespan and causes computational errors. Another major challenge is low controllability of large qubit arrays.

Metamaterial quantum simulators provide an alternative approach to quantum computing, as they do not require a large amount of control electronics. The idea behind this approach is to create artificial matter out of qubits, the physics of which will obey the same equations as for some real matter. Conversely, you can program the simulator in such a way as to embody matter with properties that have not yet been discovered in nature.