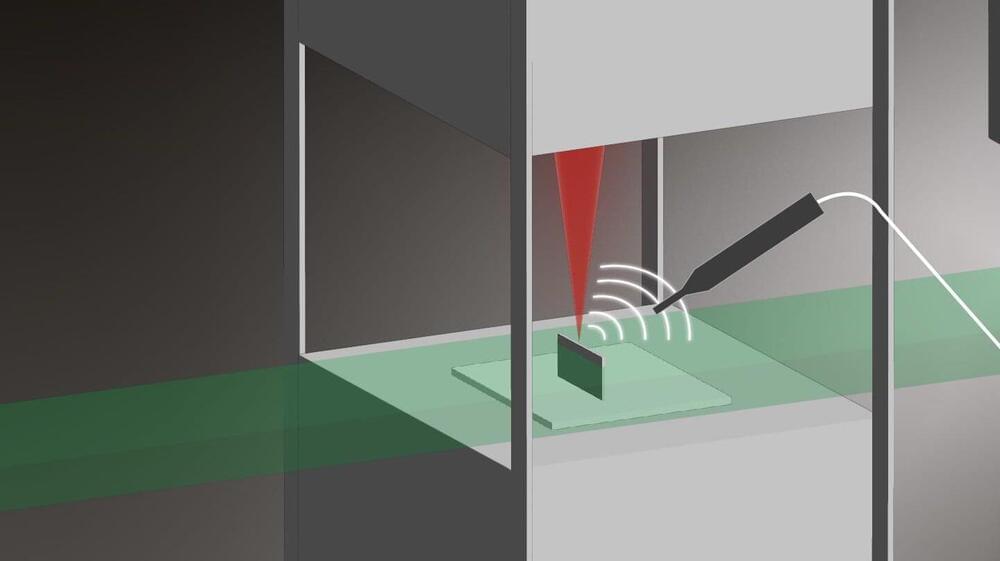

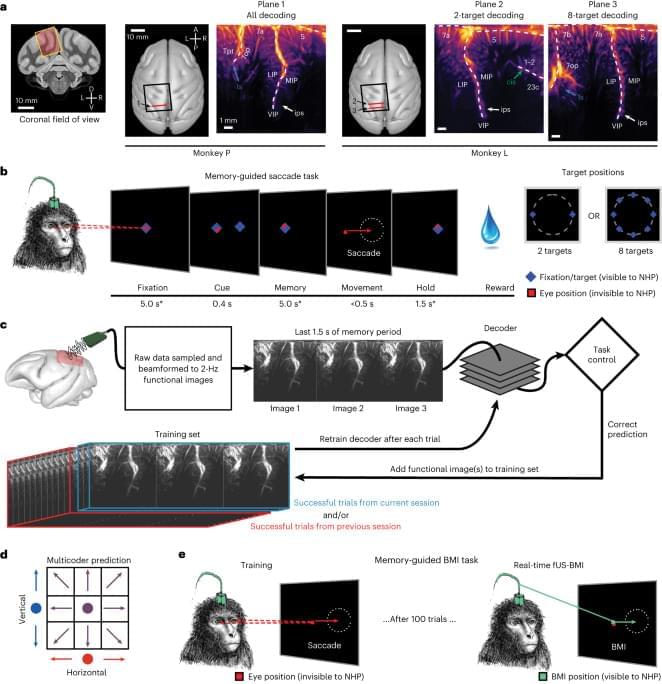

BMIs using intracortical electrodes, such as Utah arrays, are particularly adept at sensing fast changing (millisecond-scale) neural activity from spatially localized regions (1 cm) during behavior or stimulation that is correlated to activity in such spatially specific regions, for example, M1 for motor and V1 for vision. Intracortical electrodes, however, struggle to track individual neurons over longer periods of time, for example, between subsequent recording sessions15,16. Consequently, decoders are typically retrained every day15. A similar neural population identification problem is also present with an ultrasound device, including from shifts in the field of view between experiment sessions. In the current study, we demonstrated an alignment method that stabilizes image-based BMIs across more than a month and decodes from the same neurovascular populations with minimal, if any, retraining. This is a critical development that enables easy alignment of a previous days’ models to a new day’s data and allows decoding to begin with minimal to no new training data. Much effort has focused on ways to recalibrate intracortical BMIs across days that do not require extensive new data18,19,20,21,22,23. Most of these methods require identification of manifolds and/or latent dynamical parameters and collecting new neural and behavioral data to align to these manifolds/parameters. These techniques are, to date, tailored to each research group’s specific applications with varying requirements, such as hyperparameter tuning of the model23 or a consistent temporal structure of data22. They are also susceptible to changes in function in addition to anatomy. For example, ‘out-of-manifold’ learning/plasticity alters the manifold24 in ways that many alignment techniques struggle to address. Finally, some of the algorithms are computationally expensive and/or difficult to implement in online use22.

Contrasting these manifold-based methods, our decoder alignment algorithm leverages the intrinsic spatial resolution and field of view provided by fUS neuroimaging to perform decoder stabilization in a way that is intuitive, repeatable and performant. We used a single fUS frame (∼ 500 ms) to generate an image of the current session’s anatomy and aligned a previous session’s field of view to this single image. Notably, this did not require any additional behavior for the alignment. Because we only relied upon the anatomy, our decoder alignment is robust, can use any off-the-shelf alignment tool and is a valid technique so long as the anatomy and mesoscopic encoding of relevant variables do not change drastically between sessions.

It remains an open question as to how much the precise positioning of the ultrasound transducer during each session matters for decoder performance, especially out-of-plane shifts or rotations. In these current experiments, we used linear decoders that assumed a given image pixel is the same brain voxel across all aligned data sessions. To minimize disruptions to this pixel–voxel relationship, we performed image alignment within the 2D plane. As we could only image a 2D recording plane, we did not correct for any out-of-plane brain shifts between sessions that would have disrupted the pixel–voxel mapping assumption. Future fUS-BMI decoders may benefit from three-dimensional (3D) models of the neurovasculature, such as registering the 2D field of view to a 3D volume25,26,27 to better maintain a consistent pixel–voxel mapping.