Rufo Guerreschi.

https://www.linkedin.com/in/rufoguerreschi.

Coalition for a Baruch Plan for AI

https://www.cbpai.org/

0:00 Intro.

0:21 Rufo Guerreschi.

0:28 Contents.

0:41 Part 1: Why we have a governance problem.

1:18 From e-democracy to cybersecurity.

2:42 Snowden showed that international standards were needed.

3:55 Taking the needs of intelligence agencies into account.

4:24 ChatGPT was a wake up moment for privacy.

5:08 Living in Geneva to interface with states.

5:57 Decision making is high up in government.

6:26 Coalition for a Baruch plan for AI

7:12 Parallels to organizations to manage nuclear safety.

8:11 Hidden coordination between intelligence agencies.

8:57 Intergovernmental treaties are not tight.

10:19 The original Baruch plan in 1946

11:28 Why the original Baruch plan did not succeed.

12:27 We almost had a different international structure.

12:54 A global monopoly on violence.

14:04 Could expand to other weapons.

14:39 AI is a second opportunity for global governance.

15:19 After Soviet tests, there was no secret to keep.

16:22 Proliferation risk of AI tech is much greater?

17:44 Scale and timeline of AI risk.

19:04 Capabilities of security agencies.

20:02 Internal capabilities of leading AI labs.

20:58 Governments care about impactful technologies.

22:06 Government compute, risk, other capabilities.

23:05 Are domestic labs outside their jurisdiction?

23:41 What are the timelines where change is required?

24:54 Scientists, Musk, Amodei.

26:24 Recursive self improvement and loss of control.

27:22 A grand gamble, the rosy perspective of CEOs.

28:20 CEOs can’t really say anything else.

28:59 Altman, Trump, Softbank pursuing superintelligence.

30:01 Superintelligence is clearly defined by Nick Bostrom.

30:52 Explain to people what “superintelligence” means.

31:32 Jobs created by Stargate project?

32:14 Will centralize power.

33:33 Sharing of the benefits needs to be ensured.

34:26 We are running out of time.

35:27 Conditional treaty idea.

36:34 Part 2: We can do this without a global dictatorship.

36:44 Dictatorship concerns are very reasonable.

37:19 Global power is already highly concentrated.

38:13 We are already in a surveillance world.

39:18 Affects influential people especially.

40:13 Surveillance is largely unaccountable.

41:35 Why did this machinery of surveillance evolve?

42:34 Shadow activities.

43:37 Choice of safety vs liberty (privacy)

44:26 How can this dichotomy be rephrased?

45:23 Revisit supply chains and lawful access.

46:37 Why the government broke all security at all levels.

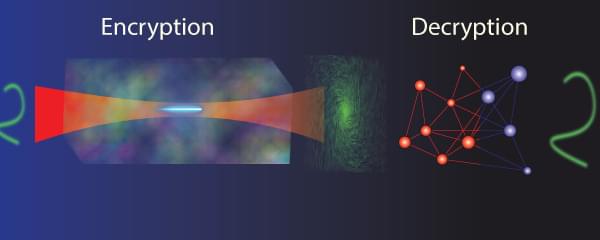

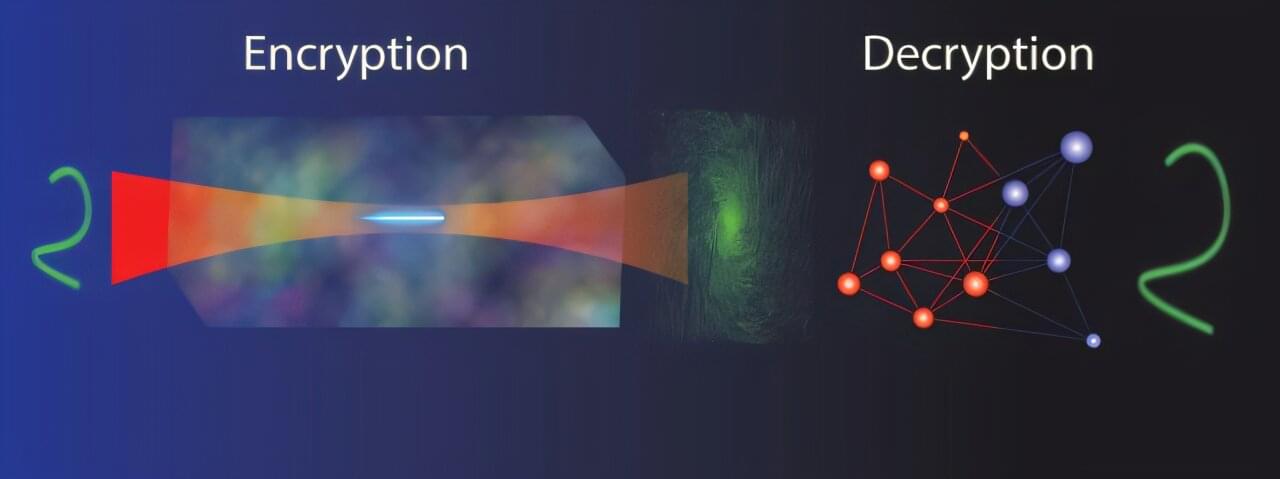

47:17 The encryption wars and export controls.

48:16 Front door mechanism replaced by back door.

49:21 The world we could live in.

50:03 What would responding to requests look like?

50:50 Apple may be leaving “bug doors” intentionally.

52:23 Apple under same constraints as government.

52:51 There are backdoors everywhere.

53:45 China and the US need to both trust AI tech.

55:10 Technical debt of past unsolved problems.

55:53 Actually a governance debt (social-technical)

56:38 Provably safe or guaranteed safe AI

57:19 Requirement: Governance plus lawful access.

58:46 Tor, Signal, etc are often wishful thinking.

59:26 Can restructure incentives.

59:51 Restrict proliferation without dragnet?

1:00:36 Physical plus focused surveillance.

1:02:21 Dragnet surveillance since the telegraph.

1:03:07 We have to build a digital dog.

1:04:14 The dream of cyber libertarians.

1:04:54 Is the government out to get you?

1:05:55 Targeted surveillance is more important.

1:06:57 A proper warrant process leveraging citizens.

1:08:43 Just like procedures for elections.

1:09:41 Use democratic system during chip fabrication.

1:10:49 How democracy can help with technical challenges.

1:11:31 Current world: anarchy between countries.

1:12:25 Only those with the most guns and money rule.

1:13:19 Everyone needing to spend a lot on military.

1:14:04 AI also engages states in a race.

1:15:16 Anarchy is not a given: US example.

1:16:05 The forming of the United States.

1:17:24 This federacy model could apply to AI

1:18:03 Same idea was even proposed by Sam Altman.

1:18:54 How can we maximize the chances of success?

1:19:46 Part 3: How to actually form international treaties.

1:20:09 Calling for a world government scares people.

1:21:17 Genuine risk of global dictatorship.

1:21:45 We need a world /federal/ democratic government.

1:23:02 Why people are not outspoken.

1:24:12 Isn’t it hard to get everyone on one page?

1:25:20 Moving from anarchy to a social contract.

1:26:11 Many states have very little sovereignty.

1:26:53 Different religions didn’t prevent common ground.

1:28:16 China and US political systems similar.

1:30:14 Coming together, values could be better.

1:31:47 Critical mass of states.

1:32:19 The Philadelphia convention example.

1:32:44 Start with say seven states.

1:33:48 Date of the US constitutional convention.

1:34:42 US and China both invited but only together.

1:35:43 Funding will make a big difference.

1:38:36 Lobbying to US and China.

1:38:49 Conclusion.

1:39:33 Outro