It seems like every day we’re warned about a new, AI-related threat that could ultimately bring about the end of humanity. According to Author and Oxford Professor Nick Bostrom, those existential risks aren’t so black and white, and an individual’s ability to influence those risks might surprise you.

Image Credit: TED

Bostrom defines an existential risk as one distinction of earth originating life or the permanent and drastic destruction of our future development, but he also notes that there is no single methodology that is applicable to all the different existential risks (as more technically elaborated upon in this Future of Humanity Institute study). Rather, he considers it an interdisciplinary endeavor.

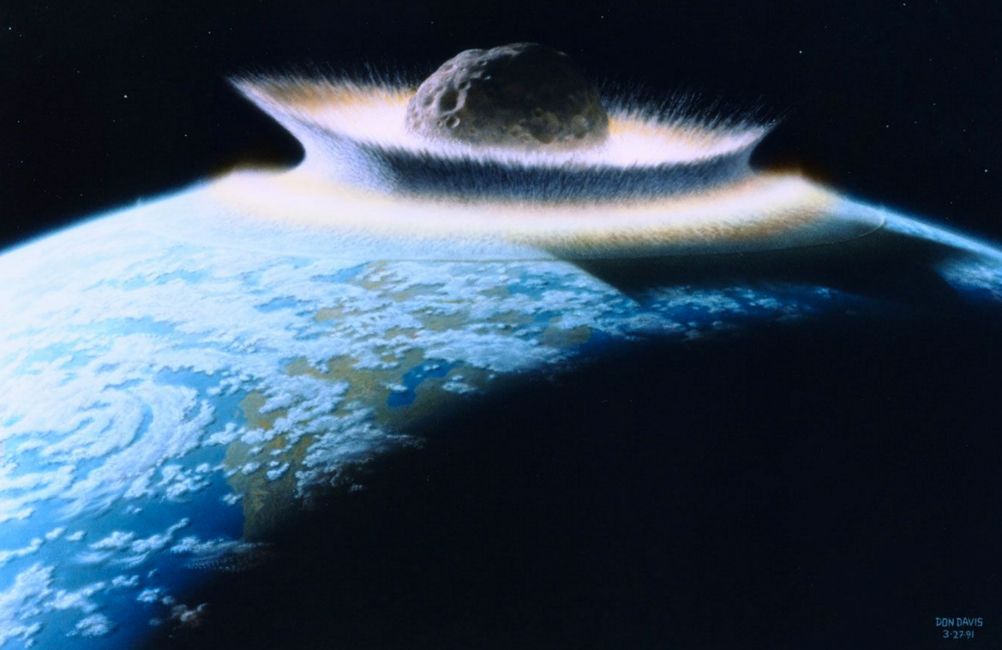

“If you’re wondering about asteroids, we have telescopes, we can study them with, we can look at past crater impacts and derive hard statistical data on that,” he said. “We find that the risk of asteroids is extremely small and likewise for a few of the other risks that arrive from nature. But other really big existential risks are not in any direct way susceptible to this kind of rigorous quantification.”

In Bostrom’s eyes, the most significant risks we face arise from human activity and particularly the potential dangerous technological discoveries that await us in the future. Though he believes there’s no way to quantify the possibility of humanity being destroyed by a super-intelligent machine, a more important variable is human judgment. To improve assessment of existential risk, Bostrom said we should think carefully about how these judgments are produced and whether the biases that affect those judgments can be avoided.

“If your task is to hammer a nail into a board, reality will tell you if you’re doing it right or not. It doesn’t really matter if you’re a Communist or a Nazi or whatever crazy ideologies you have, you’ll learn quite quickly if you’re hammering the nail in wrong,” Bostrom said. “If you’re wrong about what the major threats are to humanity over the next century, there is not a reality click to tell you if you’re right or wrong. Any weak bias you might have might distort your belief.”

Noting that humanity doesn’t really have any policy designed to steer a particular course into the future, Bostrom said many existential risks arise from global coordination failures. While he believes society might one day evolve into a unified global government, the question of when this uniting occurs will hinge on individual contributions.

“Working toward global peace is the best project, just because it’s very difficult to make a big difference there if you’re a single individual or a small organization. Perhaps your resources would be better put to use if they were focused on some problem that is much more neglected, such as the control problem for artificial intelligence,” Bostrom said. “(For example) do the technical research to figure that, if we got the ability to create super intelligence, the outcome would be safe and beneficial. That’s where an extra million dollars in funding or one extra very talented person could make a noticeable difference… far more than doing general research on existential risks.”

Looking to the future, Bostrom feels there is an opportunity to show that we can do serious research to change global awareness of existential risks and bring them into a wider conversation. While that research doesn’t assume the human condition is fixed, there is a growing ecosystem of people who are genuinely trying to figure out how to save the future, he said. As an example of how much influence one can have in reducing existential risk, Bostrom noted that a lot more people in history have believed they were Napoleon, yet there was actually only one Napoleon.

“You don’t have to try to do it yourself… it’s usually more efficient to each do whatever we specialize in. For most people, the most efficient way to contribute to eliminating existential risk would be to identify the most efficient organizations working on this and then support those,” Bostrom said. “The values on the line in terms of how many happy lives could exist in humanity’s future, even a very small probability of impact in that, would probably be worthwhile in pursuing”.