Is working to pioneer the full scope of everything that exists a duty? I have been contemplating aspects of that question for some years now. Here I move in the direction of articulating its nature and making the case by drawing out correlations with life extension and Immanuel Kant’s thoughts in The Metaphysical Elements of Ethics.

“IV. What are the Ends which are also Duties? They are: A. Our own perfection, B. Happiness of others.”

His notion of “categorical imperative” is that of a universally applicable, non-contradictory, absolute necessity which everyone can use pure practical reason to understand without it needing to be experienced or taught to them.

He says that “ethics may also be defined as the system of the ends of the pure practical reason.”

Perfection is doing what’s necessary, virtuous, moral, ethical and so forth, and doing it well, but it’s more of a direction to move in than a destination to be at.

He says it’s adding to happiness of others that is necessary, not happiness of ourselves, but if pain, poverty or so forth are to become us, it is our duty to remedy it not for our happiness, but to secure proper functioning of our moral agent in pursuit of our own perfection and happiness of others.

“That this beneficence is a duty results from this: that since our self-love cannot be separated from the need to be loved by others (to obtain help from them in case of necessity), we therefore make ourselves an end for others; and this maxim can never be obligatory except by having the specific character of a universal law, and consequently by means of a will that we should also make others our ends. Hence the happiness of others is an end that is also a duty.”

It’s pursuit of perfection of oneself that already covers personal happiness. If we took our own happiness on as an end then it would be unstructured, superfluous and generally unconcerned with imperative ends. That time could be used on the fulfilling and consequential variety of satisfaction that comes from sense of uprightness, accomplishment, and humanity-scale progress and security gained from contributing to and belonging to a happier collective when one is in pursuit of perfection. As people like Viktor Frankl and Abraham Maslow have told us, the brand of fulfillment derived from contributing to progress of humanity takes a person to a level beyond happiness and is capable even of eliminating suffering. Kant says:

“For he who is to feel himself happy in the mere consciousness of his uprightness already possesses that perfection which in the previous section was defined as that end which is also duty.”

To make others happy is not to go out of one’s way to shower them with greatness so much as it is to make sure that you aren’t a creator of its deficit. It’s just as easy to keep the peace. Any joy we impart beyond that is all bonus. A needlessly rude neighbor, for instance, is neglecting their duty because they are throwing off peoples focus, rhythm, productivity or whatever it may be.

“Moral well-being of others […] is our duty to promote, but only a negative duty […] it is my duty not to give him occasion of stumbling.”

Kant says you can’t just consider reasoned priorities that urgently need to get done and forget about them, because a pure and practical rational end demands an action. It’s unethical to bring them to mind and not move toward them by acting. It would mean we are not free because a free person internally compels their self to get what they know is indispensable done.

Most of the industrialized world is happy to be free to work for enjoyable things, games to play, skills to hone, prestige to build, vacations to go on, and so forth. We are not truly free though, when unable to respond to these duties of self-perfection and happiness of others.

“The man, for example, who is of sufficiently firm resolution and strong mind not to give up an enjoyment which he has resolved on, however much loss is shown as resulting therefrom, and who yet desists from his purpose unhesitatingly, though very reluctantly, when he finds that it would cause him to neglect an official duty or a sick father; this man proves his freedom in the highest degree by this very thing, that he cannot resist the voice of duty.”

If people cannot trade in some jet ski vacations, 5% of dart league time and 25% of television time to engage the necessity of survival and advancement of humanity for sake of removing absurdity and waste from the core of our existence then they are slaves to dart league and television and are neglecting duty. Those things have shackled them and taken control of their mind, are holding them back against their will. They need to find the source of those obstacles and remove them.

“all duty is necessitation or constraint”

It is sine quo non, indispensable for us to know what’s going on and constrain ourselves from absorbing too much of our time with things that distract us from figuring it out. We can’t do it right if we don’t know what it is we need to be doing right.

Since the worlds flow energy is generally preoccupied with these trends, hobbies and grunt labors, it can make it hard to reason this stuff through. That causes a lot of people to take shortcuts and let others think for them. Sometimes we’re just mentally lazy. It’s dangerous to close our eyes and let others lead us, but when challenged to look and think things through, tend to get defensive. “Thinking is stupid, don’t think a lot. Just say what pops into your head, that’s obviously best. If I have to exchange more than two sentences about a profound topic then I’m going to insult you with as much underhanded passive aggression as I can muster.”

If people cannot or aren’t wary enough to see these obstacles they are stumbling or stuck on, it is then upon those around them to counteract that, and we do that not through force or demand, but by enabling them to overcome in what ways we can. It’s a categorical imperative because if everybody stumbled and nobody cleared and lit paths then progress would be wasted, overall happiness would suffer, and it would be harder for anyone to pursue perfection in such a world. The Sumerians wouldn’t have shown writing to cultures around them, fire making and agriculture wouldn’t have been passed down, and the steam engine wouldn’t have kicked off the industrial revolution.

“Now I may be forced by others to actions which are directed to an end as means, but I cannot be forced to have an end; I can only make something an end to myself.”

As Kant explains, and many others tell us as well, you must let people make duty their end, it doesn’t work well enough when it comes from things like laws or customs.

People need policies, not commands, that promote education of their need to figure out what is going on, so they can know what to ultimately do. People at city and state levels can vote in policies supplying X amount of supporting infrastructure without having to make commands. An ethical pressure on the FCC and broadcasting directors can push to get enough information about it on air. Kids books, libraries, monuments, songs and slogans, scholarships, movies, banners, there are all kinds of spaces for ethics to delineate influential promotional action potential that helps enable realization of duty.

“To every duty corresponds a right of action”

That’s an interesting concept to consider and I find that I agree, every person has a right to act on duty. Not only is it ethical to make people happy, everyone has a right to be able to make people happy. A person would be denied a substantial part of their right to engage their duty to perfection if language were kept from them or all their earnings were taxed away. No one deserves to be rendered “Raphael without hands” as they say.

What, then, is the general fundamental thing preventing everyone’s perfection? What is the name of the limb that all of us Raphaels are missing? If you did arrive at perfection, how would you know? It couldn’t be known. The world isn’t in the right position for that yet. In order to achieve perfection, an understanding of the nature of existence is needed. What is going on in the full scope of reality needs to be known. That’s our missing limb: we don’t know what is going on. Our vision of the playing field and the game is missing. Figuring out what is going on in the full scope of reality is our most important duty; it is the path to perfection. If we didn’t work to know what is going on, knowing what to do could never be known. If we don’t know and don’t try to know what to do, only lives of absurdity and waste are possible. Neglect that renders life an absurd waste is anything but pursuit of perfection, and who would consciously choose or promote that?

Kant quotes this line by Albrecht von Haller which makes for a good summary of much of what he is saying, “With all his failings, man is still — better than angels void of will.”

I would say that for all their troubles, freedom fighters are still, better off than the slave by choice with no voice and no will. It’s unbecoming of an animal with the superpower of intellectual sentience to forgo its use. Our responsibilities in life aren’t something to sleep through.

“Virtue then is the moral strength of a man’s will in his obedience to duty […] strength is requisite, and the degree of this strength can be estimated only by the magnitude of the hindrances which man creates for himself, by his inclinations. [We valiantly fight those internal and external monsters that keep us from duty] wherefore this moral strength as fortitude constitutes the greatest and only true martial glory of man; it is also called the true wisdom, namely, the practical, because it makes the ultimate end of the existence of man on earth its own end. Its possession alone makes man free, healthy, rich, a king, etc., nor either chance or fate deprive him of this, since he possesses himself, and the virtuous cannot lose his virtue.”

That’s right, being completely virtuous, moral and ethical isn’t what makes us great, because it is likely that none of us are or can be. It’s in taking time to recognize what’s right and committing ourselves to move in its direction that makes us great martial activists, free, wise Kings and Queens fighting for the glory inherent in the ends of the human condition. Nothing can take away your work and struggle for morally and ethically virtuous, categorically imperative ends of life. You will either take your place as a burning star in causes great pathways across the skies of history — and like all stars you will cast a tremendous swath of light across the universe in all directions whether anybody sees you from a given point or not — or you will be one of those lucky enough to be there when the worlds collective virtue helps lay the first train tracks to the future. You might ride that path of stars toward full realization of it all with a pocket full of intergalactic nickels and a backpack full of dreams. Either way, you’ve done your job, and that’s all you can do. All success possible to you will be yours when you heed duty.

All in all it seems to be the case that everyone is inherently endowed with the necessary right of living in a world where everyone tries as hard as they can to wield that tremendous and wise power of setting personal and non-binding tentative societal policies that enable but not force stoppage of any flow impediments that may hold their self back from pursuing perfection or anyone else back from being happy so that we all have opportunity to fill out our fullest, richest volume of potential.

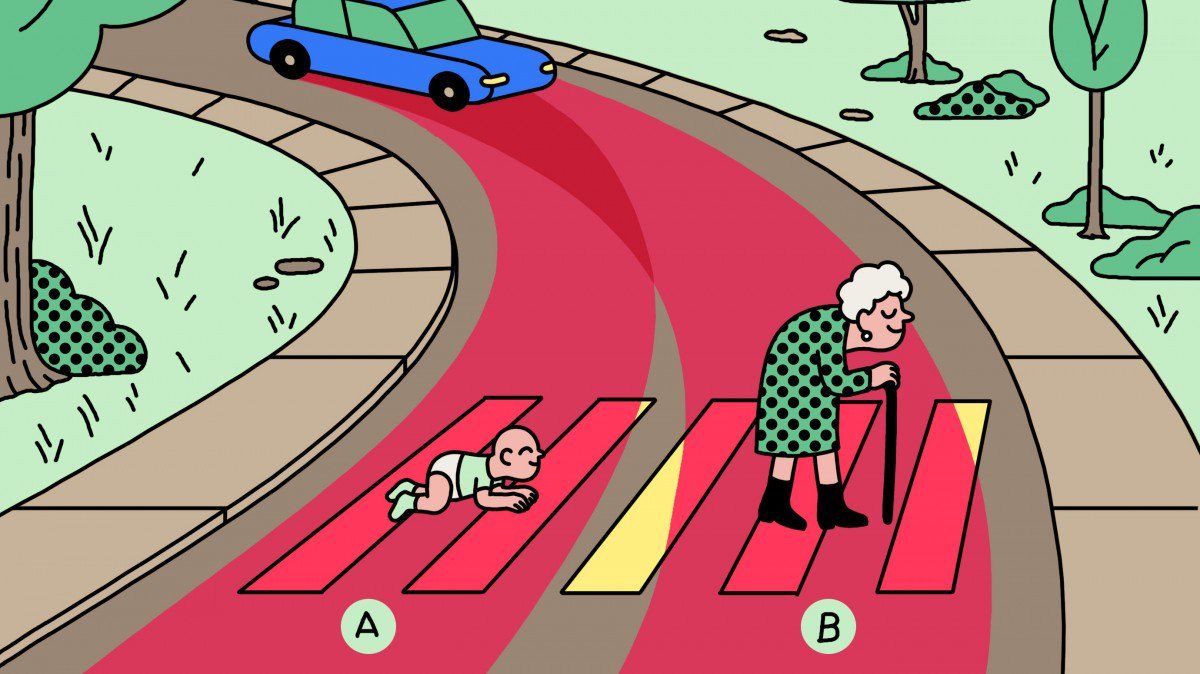

Duty to perfection involves knowing what is going on so we can know what to do. It is an absolute necessity being that since it’s unknown, we have no idea if there are crucial things to be done or not. This fundamental necessity can be brought to our attention through pure practical reasoning. To know what is going on the full scope of existence needs to be pioneered and cannot be done without everyone pitching in to secure an indefinite amount of time to make it happen. Hence the movement for indefinite life extension and pioneering the full scope of existence are categorical imperatives, and therefore ethical and duties.