A problem that has long been a focal point of research for the famous Game of Life, has finally been solved.

Introduction and objective: Video games are crucial to the entertainment industry, nonetheless they can be challenging to access for those with severe motor disabilities. Brain-computer interfaces (BCI) systems have the potential to help these individuals by allowing them to control video games using their brain signals. Furthermore, multiplayer BCI-based video games may provide valuable insights into how competitiveness or motivation affects the control of these interfaces. Despite the recent advancement in the development of code-modulated visual evoked potentials (c-VEPs) as control signals for high-performance BCIs, to the best of our knowledge, no studies have been conducted to develop a BCI-driven video game utilizing c-VEPs. However, c-VEPs could enhance user experience as an alternative method. Thus, the main goal of this work was to design, develop, and evaluate a version of the well-known ‘Connect 4’ video game using a c-VEP-based BCI, allowing 2 users to compete by aligning 4 same-colored coins vertically, horizontally or diagonally.

Methods: The proposed application consists of a multiplayer video game controlled by a real-time BCI system processing 2 electroencephalograms (EEGs) sequentially. To detect user intention, columns in which the coin can be placed was encoded with shifted versions of a pseudorandom binary code, following a traditional circular shifting c-VEP paradigm. To analyze the usability of our application, the experimental protocol comprised an evaluation session by 22 healthy users. Firstly, each user had to perform individual tasks. Afterward, users were matched and the application was used in competitive mode. This was done to assess the accuracy and speed of selection. On the other hand, qualitative data on satisfaction and usability were collected through questionnaires.

Results: The average accuracy achieved was 93.74% ± 1.71%, using 5.25 seconds per selection. The questionnaires showed that users felt a minimal workload. Likewise, high satisfaction values were obtained, highlighting that the application was intuitive and responds quickly and smoothly.

Lex Fridman Podcast full episode: https://www.youtube.com/watch?v=CGiDqhSdLHkPlease support this podcast by checking out our sponsors:- NetSuite: http://nets…

Enjoy 10% OFF and free worldwide shipping on all Hoverpens with code PBS: North America & other countries: https://bit.ly/pbs_novium UK & Europe: https://bit.ly/pbs_noviumeu.

To support your local station, go to: http://to.pbs.org/DonateSPACE

Sign Up on Patreon to get access to the Space Time Discord!

https://www.patreon.com/pbsspacetime.

We’re almost certainly the first technological civilization on Earth. But what if we’re not? We are. Although how sure are we, really? The Silurian hypothesis, which asks whether pre-human industrial civilizations might have existed.

Check out the Space Time Merch Store.

https://www.pbsspacetime.com/shop.

Sign up for the mailing list to get episode notifications and hear special announcements!

This video is about My Movie.

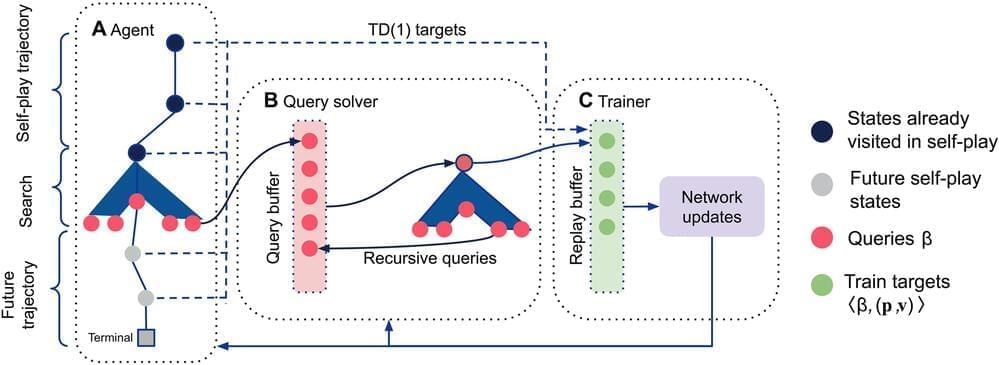

A team of AI researchers from EquiLibre Technologies, Sony AI, Amii and Midjourney, working with Google’s DeepMind project, has developed an AI system called Student of Games (SoG) that is capable of both beating humans at a variety of games and learning to play new ones. In their paper published in the journal Science Advances, the group describes the new system and its capabilities.

Over the past half-century, computer scientists and engineers have developed the idea of machine learning and artificial intelligence, in which human-generated data is used to train computer systems. The technology has applications in a variety of scenarios, one of which is playing board and/or parlor games.

Teaching a computer to play a board game and then improving its capabilities to the degree that it can beat humans has become a milestone of sorts, demonstrating how far artificial intelligence has developed. In this new study, the research team has taken another step toward artificial general intelligence—in which a computer can carry out tasks deemed superhuman.

Backlash on using AI to redub video game lines.

Naruto’s latest fighting game faces criticism for some questionable voiceover lines, leading to accusations of AI manipulation.

Naruto X Boruto: Ultimate Ninja Storm Connections is the newest brawler based on the hugely popular anime series (which itself adapts the hugely popular manga). Rather than purely adapting one part of the anime, however, Naruto X Boruto skims through almost the entire saga and adapts events already covered in previous games. But when comparing the new game’s dub to those previous entries, fans have been left confused.