No straps, no sockets: MIT team created a true bionic knee and successfully tested it on humans.

A new bionic knee allows amputees to walk faster, climb stairs more easily, and adroitly avoid obstacles, researchers reported in the journal Science.

The new prothesis is directly integrated with the person’s muscle and bone tissue, enabling greater stability and providing more control over its movement, researchers said.

Two people equipped with the prosthetic said the limb felt more like a part of their own body, the study says.

By Heidi Hausse & Peden Jones/The ConversationTo think about an artificial limb is to think about a person.

I was born without lower arms and legs, so I’ve been around prosthetics of all shapes and sizes for as long as I can remember.

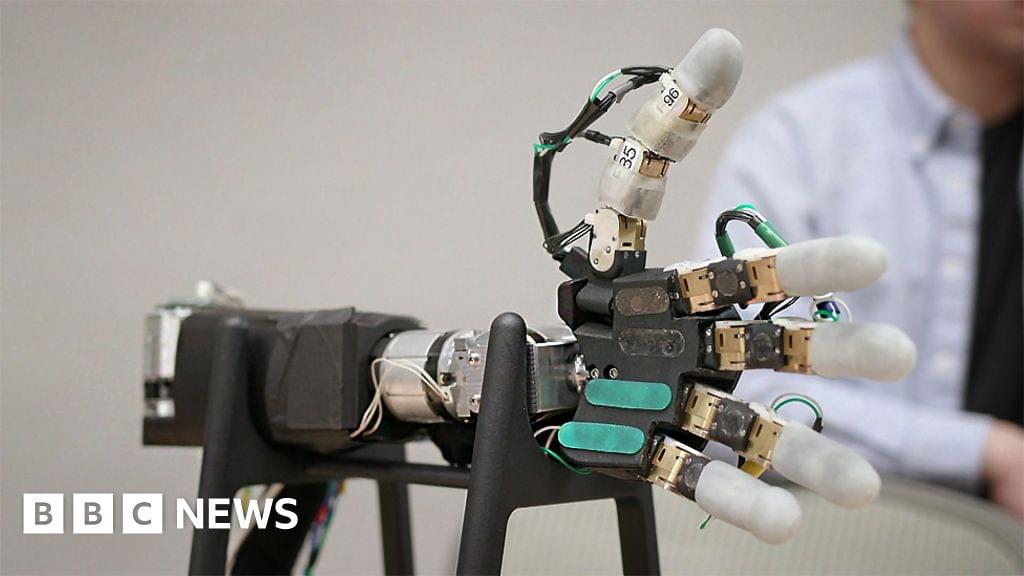

I’ve actively avoided those designed for upper arms for most of my adult life, so have never used a bionic hand before.

But when I visited a company in California, which is seeking to take the technology to the next level, I was intrigued enough to try one out — and the results were, frankly, mind-bending.

Prosthetic limbs have come a long way since the early days when they were fashioned out of wood, tin and leather.

Modern-day replacement arms and legs are made of silicone and carbon fibre, and increasingly they are bionic, meaning they have various electronically controlled moving parts to make them more useful to the user. (Feb 2024)

BBC Click reporter Paul Carter tries out a high-tech prosthetic promising a ‘full range of human motion’

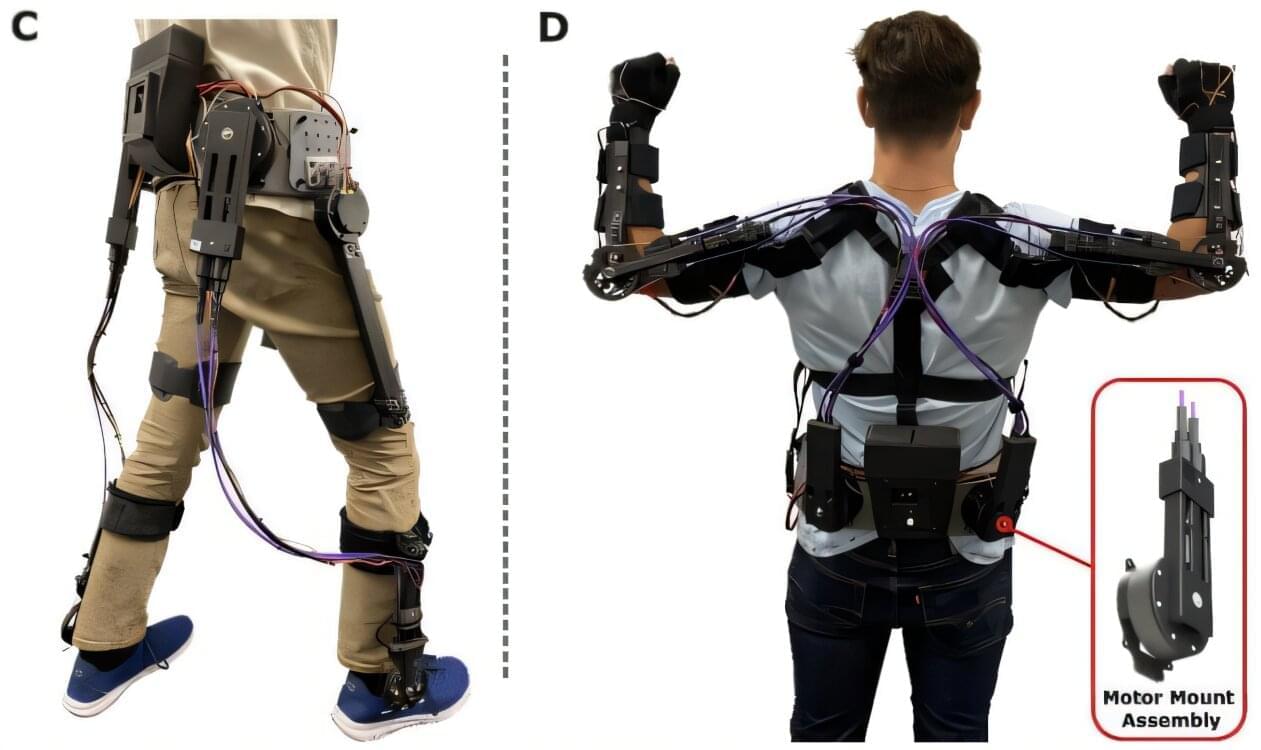

Imagine a future in which people with disabilities can walk on their own, thanks to robotic legs. A new project from Northern Arizona University is accelerating that future with an open-source robotic exoskeleton.

Right now, developing these complex electromechanical systems is expensive and time-consuming, which likely stops a lot of research before it ever starts. But that may soon change: Years of research from NAU associate professor Zach Lerner’s Biomechatronics Lab has led to the first comprehensive open-source exoskeleton framework, made freely available to anyone worldwide. It will help overcome several huge obstacles for potential exoskeleton developers and researchers.

An effective exoskeleton must be biomechanically beneficial to the person wearing it, which means that developing them requires extensive trial, error and adaptation to specific use cases.

Are human cyborgs the future? You won’t believe how close we are to merging humans with machines! This video uncovers groundbreaking advancements in cyborg technology, from bionic limbs and brain-computer interfaces to biological robots like anthrobots and exoskeletons. Discover how these innovations are reshaping healthcare, military, and even space exploration.

Learn about real-world examples, like Neil Harbisson, the colorblind cyborg artist, and the latest developments in brain-on-a-chip technology, combining human cells with artificial intelligence. Explore how cyborg soldiers could revolutionize the battlefield and how genetic engineering might complement robotic enhancements.

The future of human augmentation is here. Could we be on the verge of transforming humanity itself? Dive in to find out how science fiction is quickly becoming reality.

How do human cyborgs work? What are the latest AI breakthroughs in cyborg technology? How are cyborgs being used today? Could humans evolve into hybrid beings? This video answers all your questions. Don’t miss it!

#ai.

#cyborg.

#ainews.

====================================

Early brain development is a biological black box. While scientists have devised multiple ways to record electrical signals in adult brains, these techniques don’t work for embryos.

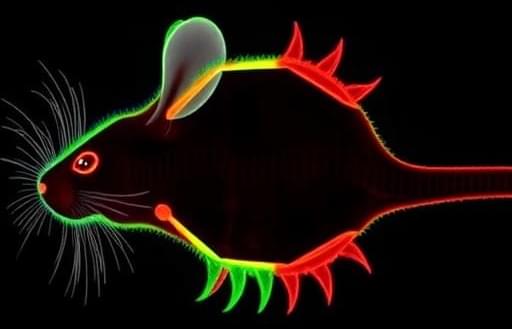

A team at Harvard has now managed to peek into the box—at least when it comes to amphibians and rodents. They developed an electrical array using a flexible, tofu-like material that seamlessly embeds into the early developing brain. As the brain grows, the implant stretches and shifts, continuously recording individual neurons without harming the embryo.

“There is just no ability currently to measure neural activity during early neural development. Our technology will really enable an uncharted area,” said study author Jia Liu in a press release.

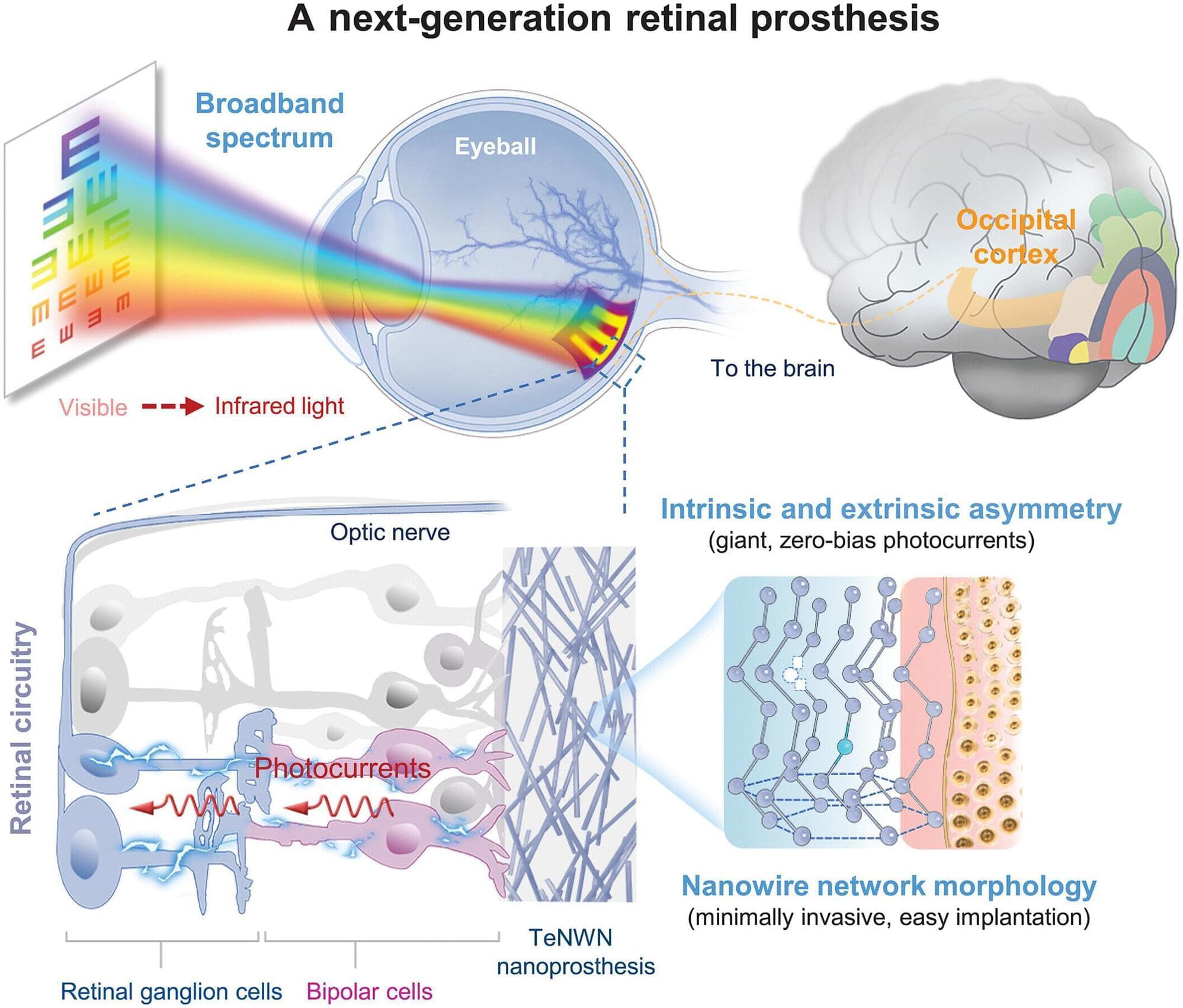

A team from Fudan University, the Shanghai Institute of Technical Physics, the Beijing University of Posts and Telecommunications and Shaoxin Laboratory, all in China, has developed a retinal prosthesis woven from metal nanowires that partially restored vision in blind mice.

In their paper published in the journal Science, the group describes how they created tellurium nanowires and interlaced them to create a retinal prosthesis. Eduardo Fernández, with University Miguel Hernández, in Spain, has published a Perspective piece in the same journal issue outlining the work done by the team on this new effort.

Finding a way to cure blindness has been a major goal for scientists for many years, and such efforts have paid off for some types of blindness, such as those caused by cataracts. Other types of blindness associated with damage to the retina, however, have proven too difficult to overcome in most cases. For this research, the team in China tried a new approach to treating such types of blindness by building a mesh out of a semiconductor and affixing it to the back of the eye, where it could send signals to the optic nerve.

A groundbreaking advancement in the field of vision restoration has recently emerged from the intersection of nanotechnology and biomedical engineering. Researchers have developed a novel retinal prosthesis constructed from tellurium nanowires, which has demonstrated remarkable efficacy in restoring vision to blind animal models. This innovative approach not only aims to restore basic visual function but also enhances the eye’s capability to detect near-infrared light, a development that holds promising implications for future ocular therapies.

The retina, a thin layer of tissue at the back of the eye, plays a crucial role in converting light into the electrical signals sent to the brain. In degenerative conditions affecting the retina, such as retinitis pigmentosa or age-related macular degeneration, this process is severely disrupted, ultimately leading to blindness. Traditional treatments have struggled with limitations such as electrical interference and insufficient long-term impacts. However, the introduction of a retinal prosthesis made from tellurium offers a fresh perspective on restoring vision.

Tellurium is a unique element known for its semiconductor properties, making it an excellent choice for developing nanostructured devices. The researchers carefully engineered tellurium nanowires and then integrated them into a three-dimensional lattice framework. This novel architecture facilitates easy implantation into the retina while enabling efficient conversion of both visible and near-infrared light into electrical impulses. By adopting this approach, the researchers ensured that the prosthesis would function effectively in various lighting conditions, a significant consideration for practical application in real-world scenarios.