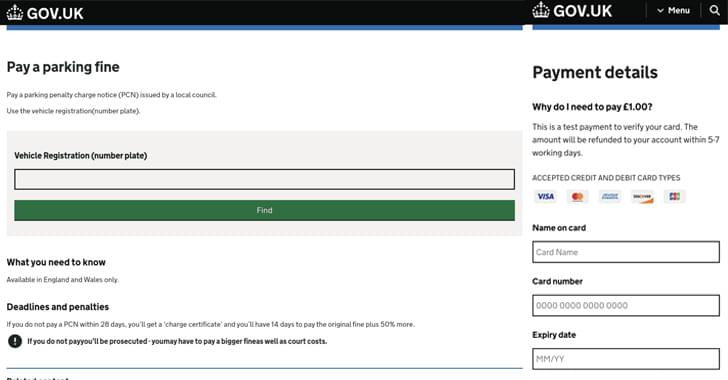

A US bank is warning customers of a security “intrusion” that may have compromised Mastercard account numbers and other financial data.

Maryland-based Eagle Bank says it has received a notice from Mastercard, stating an unnamed US merchant allowed unauthorized access to account information between August 15th, 2023, and May 25th, 2024.

The bank revealed the breach in a filing with the Massachusetts state government.