ToddyCat exploits ESET’s CVE-2024–11859 flaw with TCESB malware, bypassing security tools via DLL hijacking.

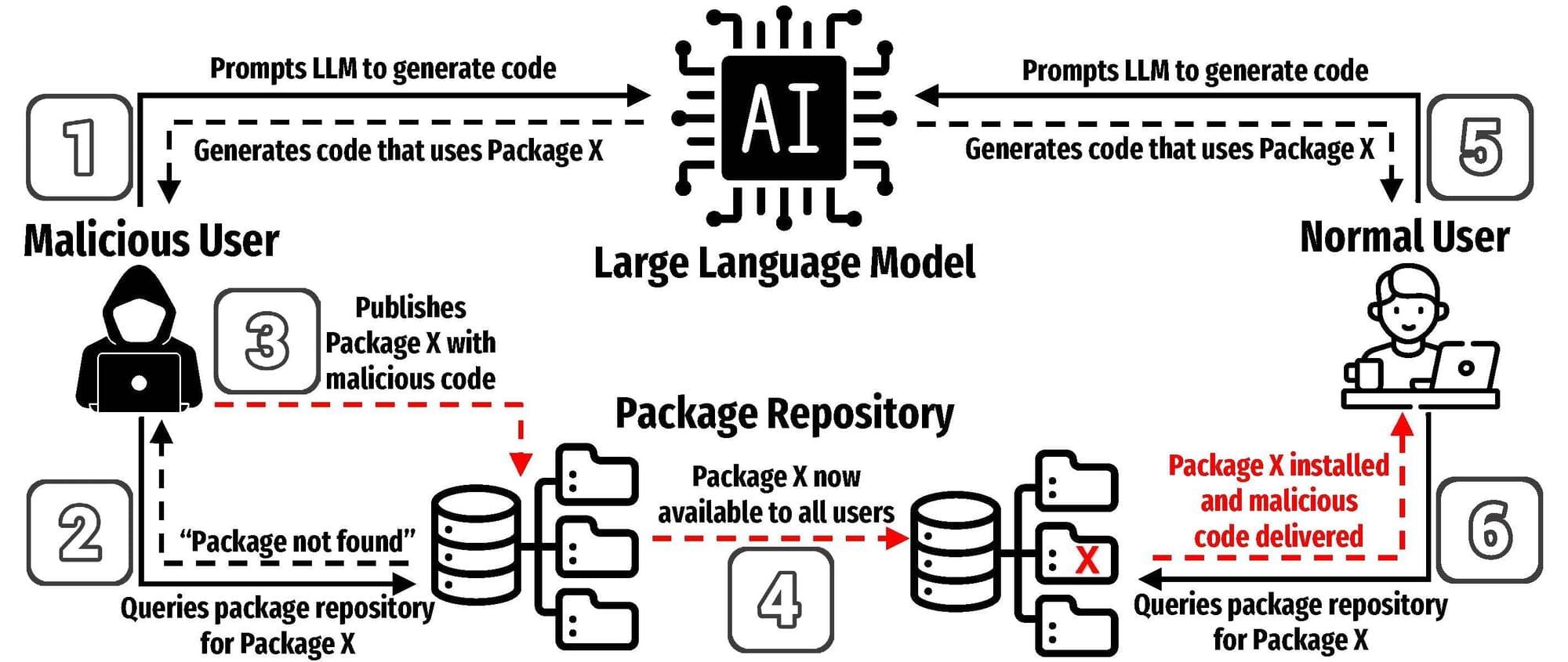

UTSA researchers recently completed one of the most comprehensive studies to date on the risks of using AI models to develop software. In a new paper, they demonstrate how a specific type of error could pose a serious threat to programmers that use AI to help write code.

Joe Spracklen, a UTSA doctoral student in computer science, led the study on how large language models (LLMs) frequently generate insecure code.

His team’s paper, published on the arXiv preprint server, has also been accepted for publication at the USENIX Security Symposium 2025, a cybersecurity and privacy conference.

A maximum severity remote code execution (RCE) vulnerability has been discovered impacting all versions of Apache Parquet up to and including 1.15.0.

The problem stems from the deserialization of untrusted data that could allow attackers with specially crafted Parquet files to gain control of target systems, exfiltrate or modify data, disrupt services, or introduce dangerous payloads such as ransomware.

The vulnerability is tracked under CVE-2025–30065 and has a CVSS v4 score of 10.0. The flaw was fixed with the release of Apache version 1.15.1.

The Hunters International Ransomware-as-a-Service (RaaS) operation is shutting down and rebranding with plans to switch to date theft and extortion-only attacks.

As threat intelligence firm Group-IB revealed this week, the cybercrime group remained active despite announcing on November 17, 2024, that it was shutting down due to declining profitability and increased government scrutiny.

Since then, Hunters International has launched a new extortion-only operation known as “World Leaks” on January 1, 2025.