His vision is definitely achievable.

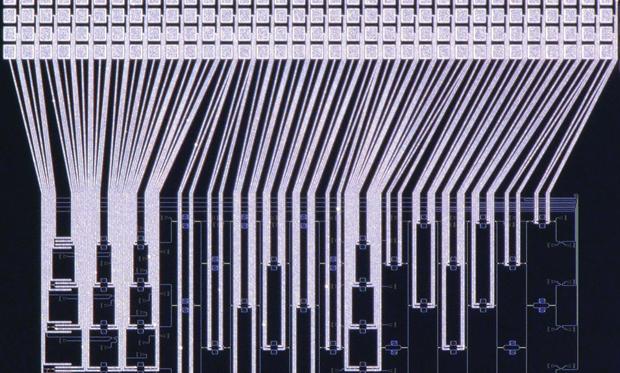

The future of airport transfer—in a pod.

World-renowned global futurist Dr. James Canton envisions hotel experiences that include supersonic travel and DNA-driven spa treatments, so what can we expect in the next decade? Canton, a former Apple Computer executive, author and social scientist, worked in conjunction with Hotels.com, to present the Hotels of the Future Study at a recent conference in San Francisco. In the study he describes hotels with everything from RoboButlers and virtual reality entertainment to hotel restaurants based on gourmet genomics and the emergence of neurotechnology to make sleep more refreshing. Canton, who has advised three White House Administrations and over 100 companies, believes these megatrends will shape the future of the hotel experience and that the RoboButler is the change we will most likely see first. Although, he also notes that plans are already underway for a supersonic hyperloop route from Los Angeles to New York City.