Poet who discovered Shor’s algorithm answers questions about quantum computers and other mysteries.

I had a little more invested in BCI.

Brain-machine interface—once the stuff of science fiction novels—is coming to a computer near you. The only question is: How soon? While the technology is in its infancy, it is already helping people with spinal cord injuries. Our authors examine its potential to be the ultimate game changer for any number of neurodegenerative diseases, as well as behavior, learning, and memory.

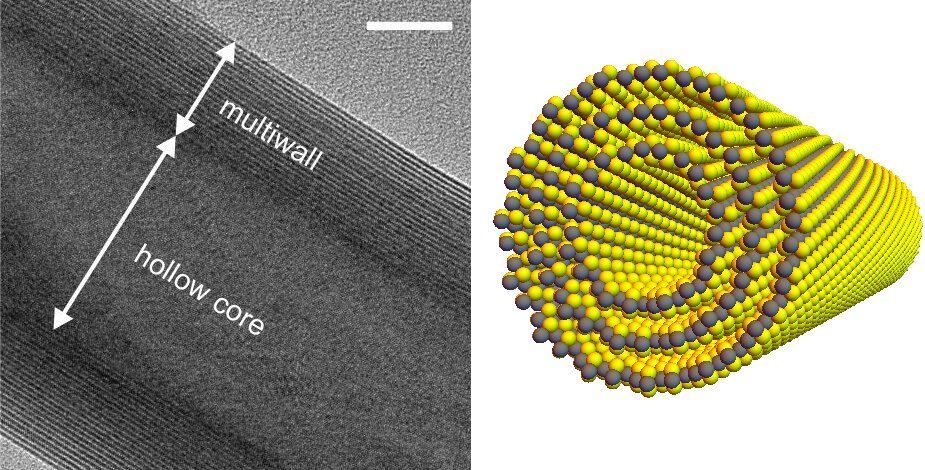

Physicists have discovered a novel kind of nanotube that generates current in the presence of light. Devices such as optical sensors and infrared imaging chips are likely applications, which could be useful in fields such as automated transport and astronomy. In future, if the effect can be magnified and the technology scaled up, it could lead to high-efficiency solar power devices.

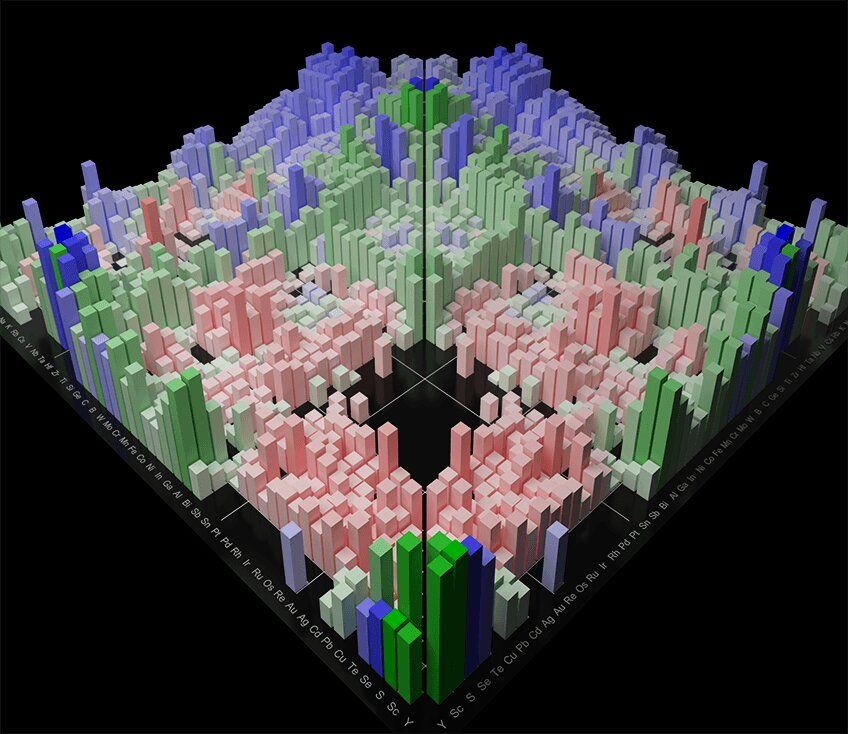

Andriy Zakutayev knows the odds of a scientist stumbling across a new nitride mineral are about the same as a ship happening upon a previously undiscovered landmass.

“If you find any nitride in nature, it’s probably in a meteorite,” said Zakutayev, a scientist at the U.S. Department of Energy’s (DOE’s) National Renewable Energy Laboratory (NREL).

Formed when metallic elements combine with nitrogen, nitrides can possess unique properties with potential applications spanning from semiconductors to industrial coatings. One nitride semiconductor served as the cornerstone of a Nobel Prize-winning technology for light-emitting diodes (LEDs). But before nitrides can be put to use, they first must be discovered—and now, researchers have a map to guide them.

Ever wonder why some fortunate people eat chips, don’t exercise, and still don’t get clogged arteries? It could be because they’ve got lucky genes.

Now Alphabet (Google’s parent company) is bankrolling a startup company that plans to use gene editing to spread fortunate DNA variations with “one-time” injections of the gene-editing tool CRISPR.

Heart doctors involved say the DNA-tweaking injections could “confer lifelong protection” against heart disease.

The trilogy’s success has been credited with establishing sci-fi, once marginalized in China, as a mainstream taste. Liu believes that this trend signals a deeper shift in the Chinese mind-set—that technological advances have spurred a new excitement about the possibilities of cosmic exploration. The trilogy commands a huge following among aerospace engineers and cosmologists; one scientist wrote an explanatory guide, “The Physics of Three Body.” Some years ago, China’s aerospace agency asked Liu, whose first career was as a computer engineer in the hydropower industry, to address technicians and engineers about ways that “sci-fi thinking” could be harnessed to produce more imaginative approaches to scientific problems.

A leading sci-fi writer takes stock of China’s global rise.

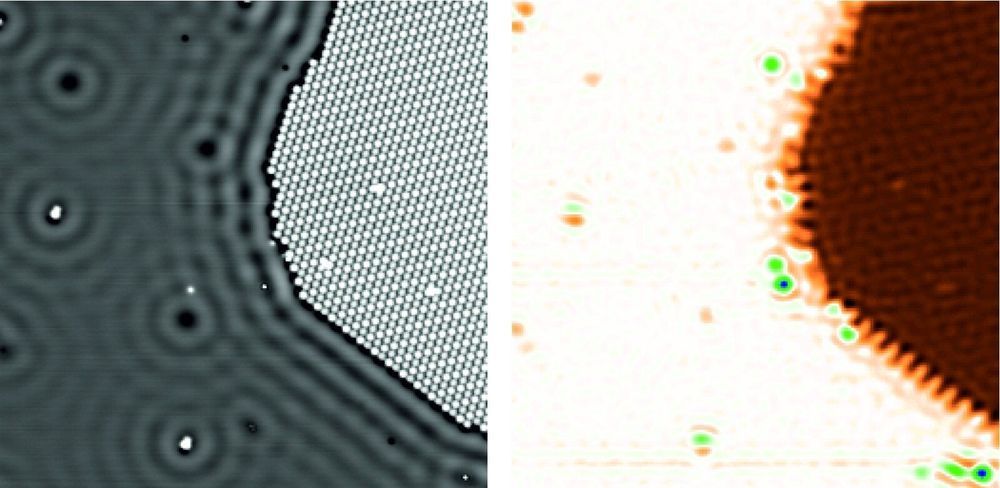

A team of researchers from Jülich in cooperation with the University of Magdeburg has developed a new method to measure the electric potentials of a sample at atomic accuracy. Using conventional methods, it was virtually impossible until now to quantitatively record the electric potentials that occur in the immediate vicinity of individual molecules or atoms. The new scanning quantum dot microscopy method, which was recently presented in the journal Nature Materials by scientists from Forschungszentrum Jülich together with partners from two other institutions, could open up new opportunities for chip manufacture or the characterization of biomolecules such as DNA.

The positive atomic nuclei and negative electrons of which all matter consists produce electric potential fields that superpose and compensate each other, even over very short distances. Conventional methods do not permit quantitative measurements of these small-area fields, which are responsible for many material properties and functions on the nanoscale. Almost all established methods capable of imaging such potentials are based on the measurement of forces that are caused by electric charges. Yet these forces are difficult to distinguish from other forces that occur on the nanoscale, which prevents quantitative measurements.

Four years ago, however, scientists from Forschungszentrum Jülich discovered a method based on a completely different principle. Scanning quantum dot microscopy involves attaching a single organic molecule—the quantum dot—to the tip of an atomic force microscope. This molecule then serves as a probe. “The molecule is so small that we can attach individual electrons from the tip of the atomic force microscope to the molecule in a controlled manner,” explains Dr. Christian Wagner, head of the Controlled Mechanical Manipulation of Molecules group at Jülich’s Peter Grünberg Institute (PGI-3).

To date, more than 110,000 users have run more than 7 million experiments on the public IBM Q Experience devices, publishing more than 145 third-party research papers based on experiments run on the devices. The IBM Q Network has grown to 45 organizations all over the world, including Fortune 500 companies, research labs, academic institutions, and startups. This goal of helping industries and individuals get “quantum ready” with real quantum hardware is what makes IBM Q stand out.

SF: What are the main technological hurdles that still need to be resolved before quantum computing goes mainstream?

JW: Today’s approximate or noisy quantum computers have a coherence time of about 100 microseconds. That’s the time in which an experiment can be run on a quantum processor before errors take over. Error mitigation and error correction will need to be resolved before we have a fault-tolerant quantum computer.