Whatever you are doing, whether it is driving a car, going for a jog, or even at your laziest, eating chips and watching TV on the couch, there is an entire suite of molecular machinery inside each of your cells hard at work. That machinery, far too small to see with the naked eye or even with many microscopes, creates energy for the cell, manufactures its proteins, makes copies of its DNA, and much more.

Among those pieces of machinery, and one of the most complex, is something known as the nuclear pore complex (NPC). The NPC, which is made of more than 1,000 individual proteins, is an incredibly discriminating gatekeeper for the cell’s nucleus, the membrane-bound region inside a cell that holds that cell’s genetic material. Anything going in or out of the nucleus has to pass through the NPC on its way.

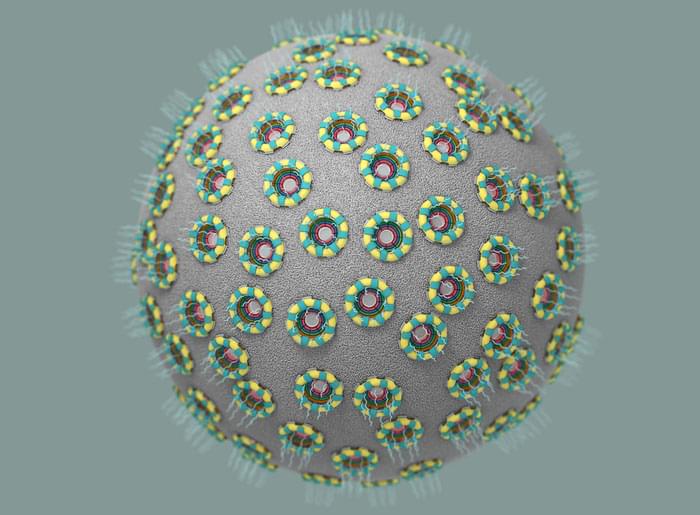

Nuclear pores stud the surface of the cell’s nucleus, controlling what flows in and out of it. (Image: Valerie Altounian)