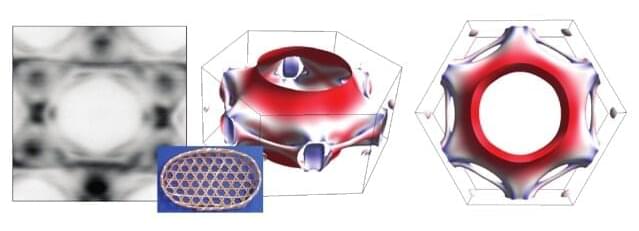

Researchers at the University at Albany’s RNA Institute have demonstrated a new approach to DNA nanostructure assembly that does not require magnesium. The method improves the biostability of the structures, making them more useful and reliable in a range of applications. The work appears in the journal Small this month.

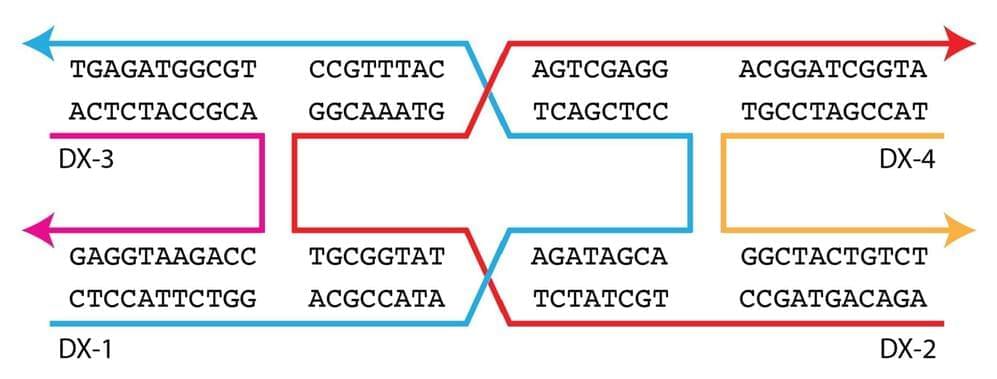

When we think of DNA, the first association that comes to mind is likely genetics—the double helix structure within cells that houses an organism’s blueprint for growth and reproduction. A rapidly evolving area of DNA research is that of DNA nanostructures—synthetic molecules made up of the same building blocks as the DNA found in living cells, which are being engineered to solve critical challenges in applications ranging from medical diagnostics and drug delivery to materials science and data storage.

“In this work, we assembled DNA nanostructures without using magnesium, which is typically used in this process but comes with challenges that ultimately reduce the utility of the nanostructures that are produced,” said Arun Richard Chandrasekaran, corresponding author of the study and senior research scientist at the RNA Institute.