Google argued that its new uber-powerful quantum computer is so fast that it may have tapped a parallel universe.

A new hypothesis suggests that the very fabric of space-time may act as a dynamic reservoir for quantum information, which, if it holds, would address the long-standing Black Hole Information Paradox and potentially reshape our understanding of quantum gravity, according to a research team including scientists from pioneering quantum computing firm, Terra Quantum and Leiden University.

Published in Entropy, the Quantum Memory Matrix (QMM) hypothesis offers a mathematical framework to reconcile quantum mechanics and general relativity while preserving the fundamental principle of information conservation.

The study proposes that space-time, quantized at the Planck scale — a realm where the physics of quantum mechanics and general relativity converge — stores information from quantum interactions in “quantum imprints.” These imprints encode details of quantum states and their evolution, potentially enabling information retrieval during black hole evaporation through mechanisms like Hawking radiation. This directly addresses the Black Hole Information Paradox, which highlights the conflict between quantum mechanics — suggesting information cannot be destroyed — and classical black hole descriptions, where information appears to vanish once the black hole evaporates.

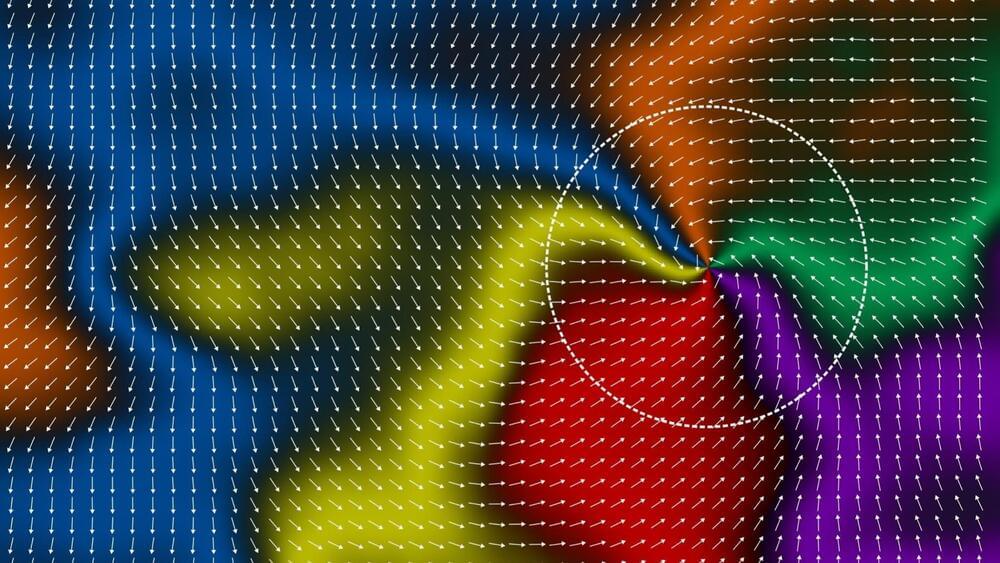

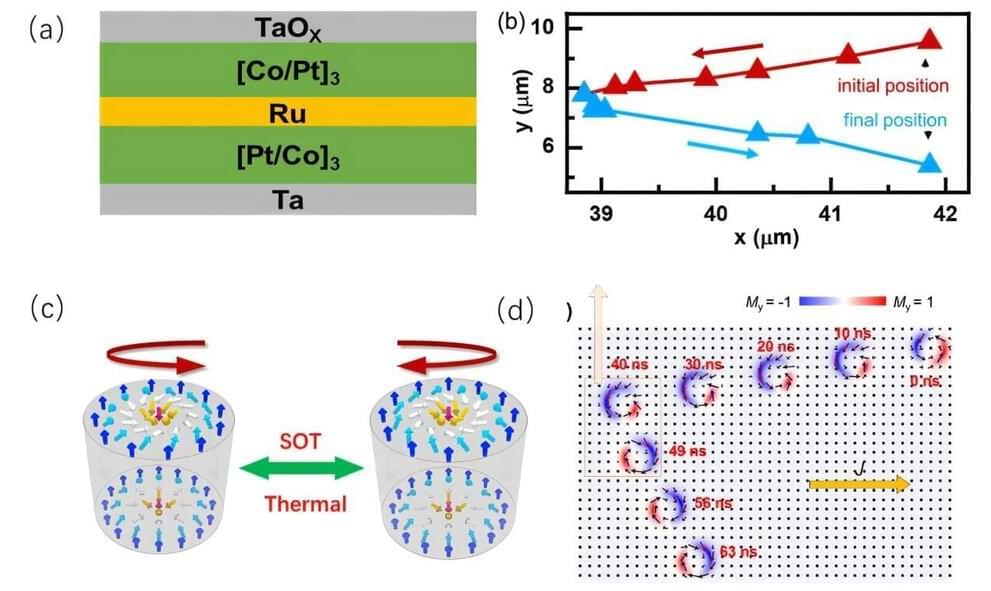

Three distinct topological degrees of freedom are used to define all topological spin textures based on out-of-plane and in-plane spin configurations: the topological charge, representing the number of times the magnetization vector m wraps around the unit sphere; the vorticity, which quantifies the angular integration of the magnetic moment along the circumferential direction of a domain wall; and the helicity, defining the swirling direction of in-plane magnetization.

Electrical manipulation of these three degrees of freedom has garnered significant attention due to their potential applications in future spintronic devices. Among these, the helicity of a magnetic skyrmion—a critical topological property—is typically determined by the Dzyaloshinskii-Moriya interaction (DMI). However, controlling skyrmion helicity remains a formidable challenge.

A team of scientists led by Professor Yan Zhou from The Chinese University of Hong Kong, Shenzhen, and Professor Senfu Zhang from Lanzhou University successfully demonstrated a controllable helicity switching of skyrmions using spin-orbit torque, enhanced by thermal effects.

Just a few months after the previous record was set, a start-up called Quantinuum has announced that it has entangled the largest number of logical qubits – this will be key to quantum computers that can correct their own errors.

Google on Monday announced Willow, its latest, greatest quantum computing chip. The speed and reliability performance claims Google’s made about this chip were newsworthy in themselves, but what really caught the tech industry’s attention was an even wilder claim tucked into the blog post about the chip.

Google Quantum AI founder Hartmut Neven wrote in his blog post that this chip was so mind-boggling fast that it must have borrowed computational power from other universes.

Ergo the chip’s performance indicates that parallel universes exist and “we live in a multiverse.”

Quantum computers differ fundamentally from classical ones. Instead of using bits (0s and 1s), they employ “qubits,” which can exist in multiple states simultaneously due to quantum phenomena like superposition and entanglement.

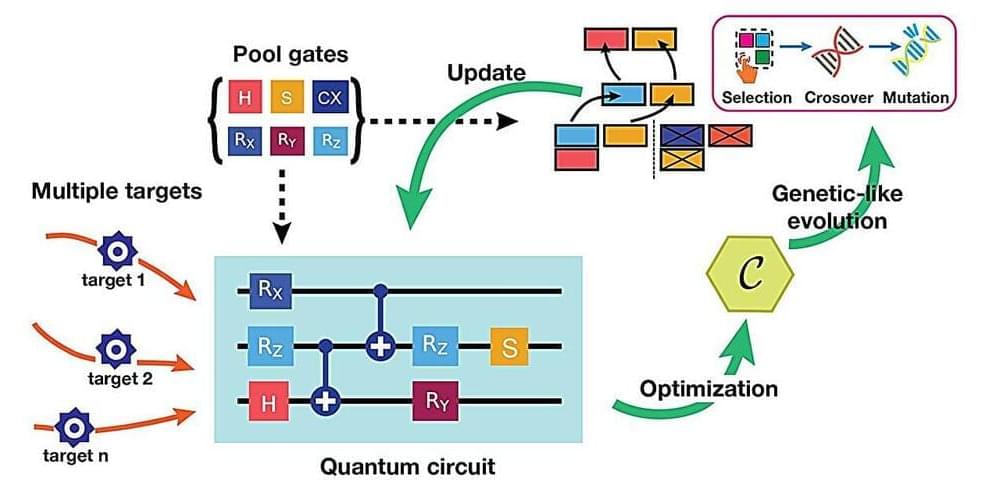

For a quantum computer to simulate dynamic processes or process data, among other essential tasks, it must translate complex input data into “quantum data” that it can understand. This process is known as quantum compilation.

Essentially, quantum compilation “programs” the quantum computer by converting a particular goal into an executable sequence. Just as the GPS app converts your desired destination into a sequence of actionable steps you can follow, quantum compilation translates a high-level goal into a precise sequence of quantum operations that the quantum computer can execute.

The semiconductor industry’s long held imperative—Moore’s Law, which dictates that transistor densities on a chip should double roughly every two years—is getting more and more difficult to maintain. The ability to shrink down transistors, and the interconnects between them, is hitting some basic physical limitations. In particular, when copper interconnects are scaled down, their resistivity skyrockets, which decreases how much information they can carry and increases their energy draw.

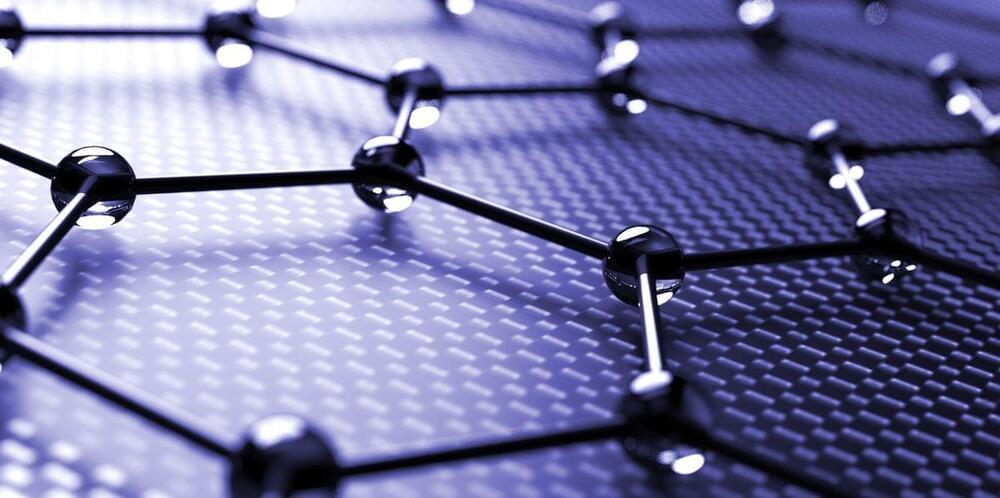

The industry has been looking for alternative interconnect materials to prolong the march of Moore’s Law a bit longer. Graphene is a very attractive optionin many ways: The sheet-thin carbon material offers excellent electrical and thermal conductivity, and is stronger than diamond.

However, researchers have struggled to incorporate graphene into mainstream computing applications for two main reasons. First, depositing graphene requires high temperatures that are incompatible with traditional CMOS manufacturing. And second, the charge carrier density of undoped, macroscopic graphene sheets is relatively low.

Making smaller transistors, and the interconnections between them, is getting near impossible. Copper interconnects get more resistive as they are scaled down, making them worse and slower at carrying information. Startup Destination 2D thinks graphene is the solution. They have a novel technique of growing graphene that is CMOS compatible, promising 100x current density improvement over copper.

Researchers have developed a new, fast, and rewritable method for DNA computing that promises smaller, more powerful computers.

This method mimics the sequential and simultaneous gene expression in living organisms and incorporates programmable DNA circuits with logic gates. The improved process places DNA on a solid glass surface, enhancing efficiency and reducing the need for manual transfers, culminating in a 90-minute reaction time in a single tube.

Advancements in DNA-Based Computation.